Making Pre Trained Language Models Better Few Shot Learners Deepai

Making Pre Trained Language Models Better Few Shot Learners Deepai Inspired by their findings, we study few shot learning in a more practical scenario, where we use smaller language models for which fine tuning is computationally efficient. Inspired by their findings, we study few shot learning in a more practical sce nario, where we use smaller language models for which fine tuning is computationally effi cient.

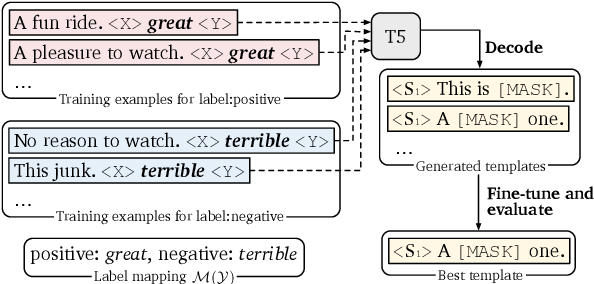

Figure 2 From Making Pre Trained Language Models Better Few Shot In this paper, we propose a theoretical framework to explain the efficacy of prompt learning in zero few shot scenarios. first, we prove that conventional pre training and fine tuning paradigm fails in few shot scenarios due to overfitting the unrepresentative labelled data. This study proposes a novel pluggable, extensible, and efficient approach named differentiable prompt (dart), which can convert small language models into better few shot learners without any prompt engineering. The paper provides how zero shot and few shot learning improve nlp by using minimal labelled data. it emphasizes pre trained models that generalize to tasks like sentiment analysis and translation. the paper explores how pre trained models like bert and gpt enhance zero shot learning and explores chain of thought prompting, highlighting the superiority of fine tuned proprietary models in. Using separate model making pre trained language models better few shot learners. without updating any of the weights! what is a prompt? the key challenge is to construct the template and ( ) we refer to these two together as a prompt label words .

Personalizing Pre Trained Models Deepai The paper provides how zero shot and few shot learning improve nlp by using minimal labelled data. it emphasizes pre trained models that generalize to tasks like sentiment analysis and translation. the paper explores how pre trained models like bert and gpt enhance zero shot learning and explores chain of thought prompting, highlighting the superiority of fine tuned proprietary models in. Using separate model making pre trained language models better few shot learners. without updating any of the weights! what is a prompt? the key challenge is to construct the template and ( ) we refer to these two together as a prompt label words . Inspired by their findings, we study few shot learning in a more practical sce nario, where we use smaller language models for which fine tuning is computationally effi cient.

Comments are closed.