Making Floating Point Math Highly Efficient For Ai Hardware

Fillable Online Making Floating Point Math Highly Efficient For Ai We have made radical changes to floating point to make it as much as 16 percent more efficient than int8 32 math. our approach is still highly accurate for convolutional neural networks, and it offers several additional benefits: our technique can improve the speed of ai research and development. Recently, a new 8 bit floating point format (fp8) has been suggested for efficient deep learning network training. as some layers in neural networks can be trained in fp8 as opposed to the incumbent fp16 and fp32 networks, this format would improve efficiency for training tremendously.

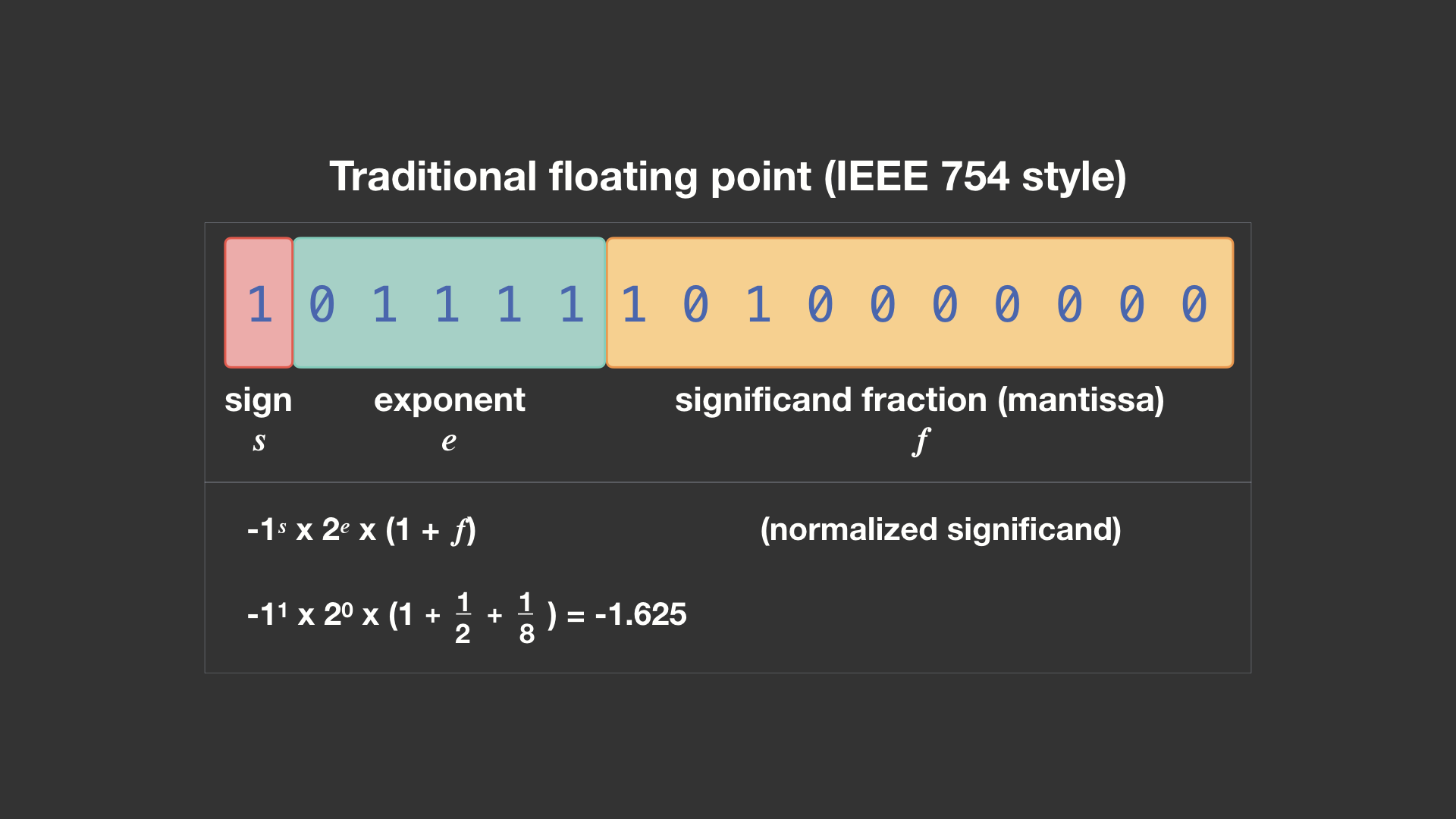

Making Floating Point Math Highly Efficient For Ai Hardware By Many machine intelligence processors offer competing forms of 16 bit floating point numerical representation to accelerate machine intelligence workloads, such as the ieee 754 half precision 16 bit floating point numbers or the google bfloat16 format. But, as argued in this paper, using floating point numbers for weights representation may result in significantly more efficient hardware implementations. fused multiply add (fma) operations, where rounding is computed on the final results, may provide additional performance improvements. This paper examines the historical progression of floating point computation in scientific applications and contextualizes recent trends driven by ai, particularly the adoption of reduced precision floating point types. This paper introduces the hybrid precision (hpfp) selection algorithms, designed to systematically reduce precision and implement hybrid precision strategies, thereby balancing layer wise arithmetic operations and data path precision to address the shortcomings of traditional floating point formats.

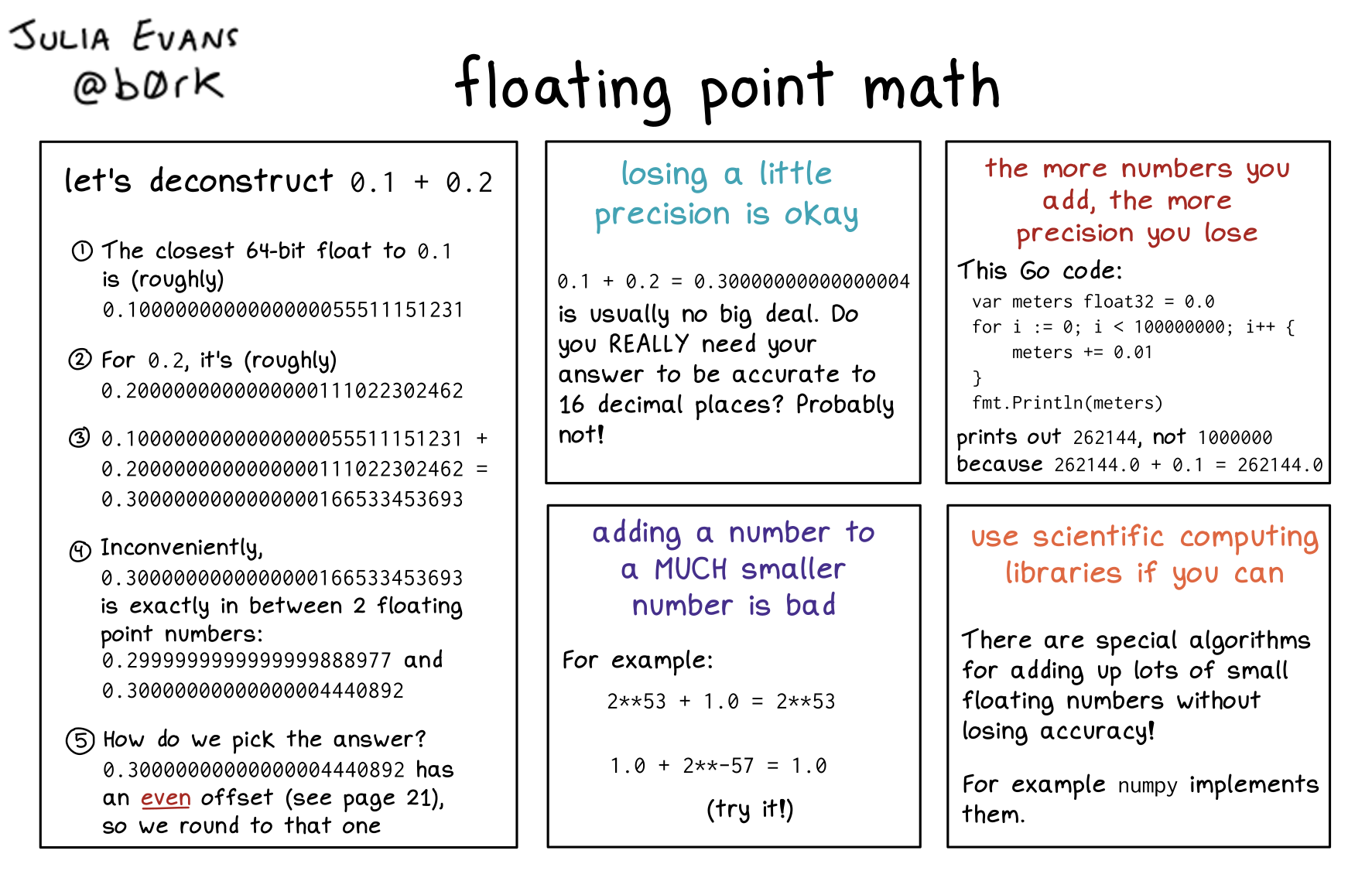

Floating Point Math This paper examines the historical progression of floating point computation in scientific applications and contextualizes recent trends driven by ai, particularly the adoption of reduced precision floating point types. This paper introduces the hybrid precision (hpfp) selection algorithms, designed to systematically reduce precision and implement hybrid precision strategies, thereby balancing layer wise arithmetic operations and data path precision to address the shortcomings of traditional floating point formats. The floating point tapering trick applied on top of this can help with the primary chip power problem, which is moving bits around, so you can solve more problems with a smaller word size because your encoding matches your data distribution better. The development of accurate and efficient machine learning models for predicting the structure and properties of molecular crystals has been hindered by the scarcity of publicly available datasets of structures with property labels. Floating point operations are widely used in the fields of communication algorithm, digital signal processing, artificial intelligence and so on. however, the l. With the increasing need for efficient dnn training, which is crucial for diverse applications such as self driving cars and sophisticated security monitoring systems, we have developed the hybrid precision floating point (hpfp) selection method. this novel approach maximizes computational efficiency and precision within dnn training processes.

Ai Engineers Claim New Algorithm Reduces Ai Power Consumption By 95 The floating point tapering trick applied on top of this can help with the primary chip power problem, which is moving bits around, so you can solve more problems with a smaller word size because your encoding matches your data distribution better. The development of accurate and efficient machine learning models for predicting the structure and properties of molecular crystals has been hindered by the scarcity of publicly available datasets of structures with property labels. Floating point operations are widely used in the fields of communication algorithm, digital signal processing, artificial intelligence and so on. however, the l. With the increasing need for efficient dnn training, which is crucial for diverse applications such as self driving cars and sophisticated security monitoring systems, we have developed the hybrid precision floating point (hpfp) selection method. this novel approach maximizes computational efficiency and precision within dnn training processes.

Making Floating Point Math Highly Efficient For Ai Hardware Floating point operations are widely used in the fields of communication algorithm, digital signal processing, artificial intelligence and so on. however, the l. With the increasing need for efficient dnn training, which is crucial for diverse applications such as self driving cars and sophisticated security monitoring systems, we have developed the hybrid precision floating point (hpfp) selection method. this novel approach maximizes computational efficiency and precision within dnn training processes.

Comments are closed.