Llm Task Evals For Business Use Cases What You Need To Know

Llm Evaluation Everything You Need To Run Benchmark Llm Evals We put together 7 examples of how top companies like asana and github run llm evaluations. they share how they approach the task, what methods and metrics they use, what they test for, and their learnings along the way. This 3 part series is focused on llm evaluation techniques spanning timeseries data, custom tasks, and customer feedback. participants will learn the latest methodologies and application of ai observability approaches in practical scenarios.

Llm Evals In Practice Llm Task Evals For Business Use Cases Arize Ai In this article, i'll go through why llm evaluation fails when not being outcome driven, and how to solve it. In this post, we’ll walk through some tried and true best practices, common pitfalls, and handy tips to help you benchmark your llm’s performance. whether you’re just starting out or looking for a quick refresher, these guidelines will keep your evaluation strategy on solid ground. But how do you evaluate these models for your use case? this article is a deep dive into evaluations, covering accuracy, speed, cost, customization, context window, safety, and licensing. That's where llm evaluations come to ensure models are reliable, accurate, and meet business preferences. in this article, we'll dive into why evaluating llms is important and explore llm evaluation metrics, frameworks, tools, and challenges.

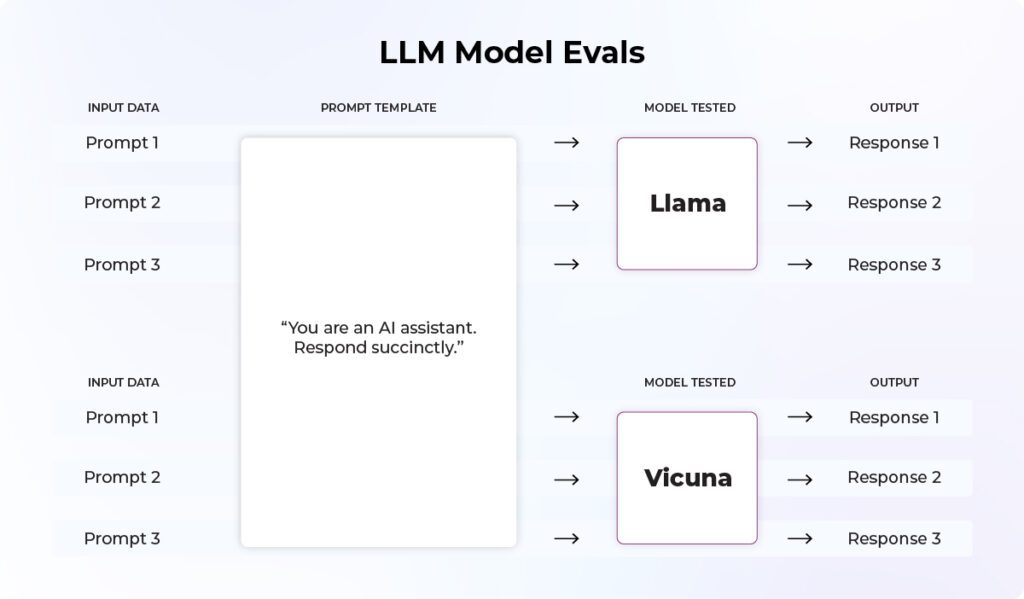

Llm Evals In Practice Llm Task Evals For Business Use Cases Arize Ai But how do you evaluate these models for your use case? this article is a deep dive into evaluations, covering accuracy, speed, cost, customization, context window, safety, and licensing. That's where llm evaluations come to ensure models are reliable, accurate, and meet business preferences. in this article, we'll dive into why evaluating llms is important and explore llm evaluation metrics, frameworks, tools, and challenges. If you've ever wondered how to make sure an llm performs well on your specific task, this guide is for you! it covers the different ways you can evaluate a model, guides on designing your own evaluations, and tips and tricks from practical experience. Master llm evaluation with component level & end to end methods. learn metric alignment, roi correlation & scaling strategies for effective llm eval. Large language models (llms) are an incredible tool for developers and business leaders to create new value for consumers. they make personal recommendations, translate between unstructured and. Llm evaluation is the process of assessing the performance and capabilities of large language models. sometimes referred to simply as “llm eval,” it entails testing these models across various tasks, datasets and metrics to gauge their effectiveness.

8 Essential Enterprise Llm Use Cases Transforming Business Efficiency If you've ever wondered how to make sure an llm performs well on your specific task, this guide is for you! it covers the different ways you can evaluate a model, guides on designing your own evaluations, and tips and tricks from practical experience. Master llm evaluation with component level & end to end methods. learn metric alignment, roi correlation & scaling strategies for effective llm eval. Large language models (llms) are an incredible tool for developers and business leaders to create new value for consumers. they make personal recommendations, translate between unstructured and. Llm evaluation is the process of assessing the performance and capabilities of large language models. sometimes referred to simply as “llm eval,” it entails testing these models across various tasks, datasets and metrics to gauge their effectiveness.

Llm Use Cases To Transform Your Business Matellio Inc Large language models (llms) are an incredible tool for developers and business leaders to create new value for consumers. they make personal recommendations, translate between unstructured and. Llm evaluation is the process of assessing the performance and capabilities of large language models. sometimes referred to simply as “llm eval,” it entails testing these models across various tasks, datasets and metrics to gauge their effectiveness.

Llm Use Cases And Applications 2025

Comments are closed.