Llm In A Flash Efficient Llm Inference With Limited Memory R Hypeurls

Llm In A Flash Efficient Llm Inference With Limited Memory R Hypeurls 1it is notable that, by data we mean weights of the neural network. however, our developed techniques can be eas ily generalized to other data types transferred and used for llm inference, such as activations or kv cache, as suggested by (sheng et al., 2023). Our integration of sparsity awareness, context adaptive loading, and a hardware oriented design paves the way for effective inference of llms on devices with limited memory.

Efficient Llm Inference With Limited Memory Apple Plato Data Official community of hypeurls : r hypeurls is a reddit community for sharing and discussing new tech…. In a significant stride for artificial intelligence, researchers introduce an inventive method to efficiently deploy large language models (llms) on devices with limited memory. In this post we dive into llm in a flash paper by apple, that introduces a method to run llms on devices that have limited memory. This paper tackles the challenge of efficiently running llms that exceed the available dram capacity by storing the model parameters in flash memory, but bringing them on demand to dram.

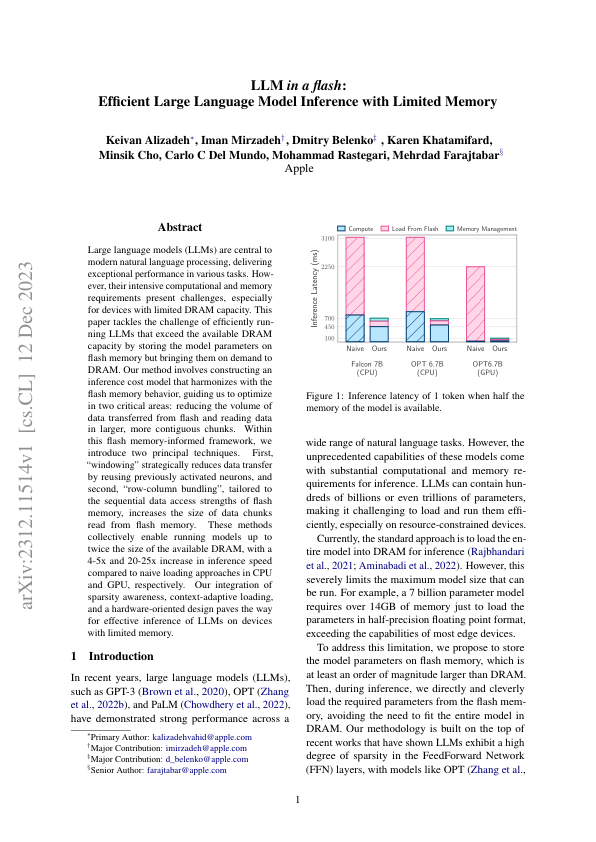

Llm In A Flash Efficient Large Language Model Inference With Limited In this post we dive into llm in a flash paper by apple, that introduces a method to run llms on devices that have limited memory. This paper tackles the challenge of efficiently running llms that exceed the available dram capacity by storing the model parameters in flash memory, but bringing them on demand to dram. Efficiently infer with limited memory using llm in a flash. explore techniques in our blog for quick and effective results. efficient large language model inference techniques have been developed to tackle the challenges of running large models on devices with limited memory. Flash llm aims to optimize the four matmuls based on the key approach called "load as sparse and compute as dense" (lscd). visit the documentation to get started. flash llm shows superior performance in both single spmm kernel and end to end llm inference. Large language models (llms) are central to modern natural language processing, delivering exceptional performance in various tasks. however, their substantial computational and memory requirements present challenges, especially for devices with limited dram capacity. First, “windowing'” strategically reduces data transfer by reusing previously activated neurons, and second, “row column bundling”, tailored to the sequential data access strengths of flash memory, increases the size of data chunks read from flash memory.

Comments are closed.