Llm In A Flash Efficient Large Language Model Inference With Limited Memory

Llm In A Flash Efficient Large Language Model Inference With Limited Generative, agentic, and reasoning-driven AI workloads are growing exponentially – in many cases requiring 10 to 100 times more compute per query than previous Large Language Model (LLM A new technical paper titled “Efficient LLM Inference: Bandwidth, Compute, Synchronization, and Capacity are all you need” was published by NVIDIA Abstract “This paper presents a limit study of

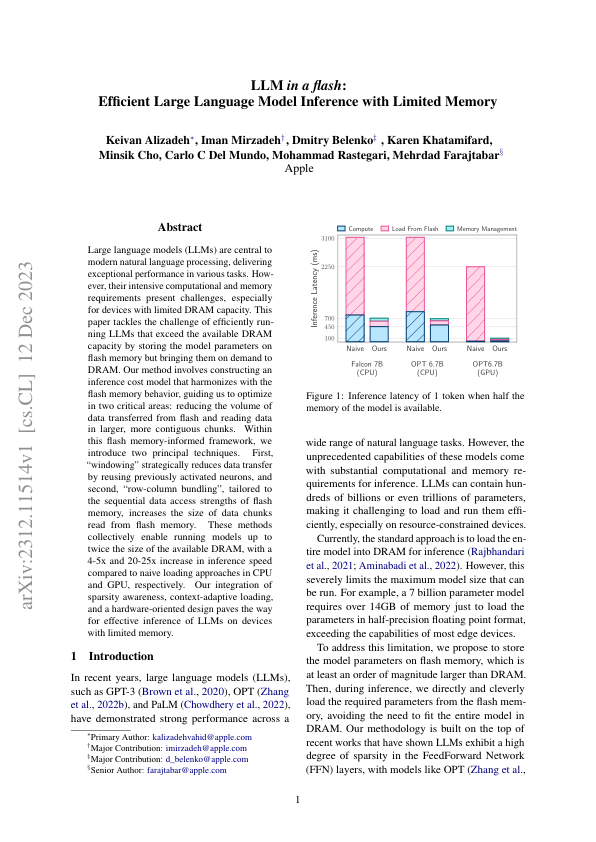

Paper Page Llm In A Flash Efficient Large Language Model Inference High-quality output at low latency is a critical requirement when using large language models (LLMs), especially in real-world scenarios, such as chatbots interacting with customers, or the AI Japanese AI lab Sakana AI has introduced a new technique that allows multiple large language models (LLMs) to cooperate on a single task, effectively creating a “dream team” of AI agents The In Figure 1, examples of this are the generation of “be, with” and “you” Fig 1: LLM inference flow During the prefill stage, the model needs to compute attention over all previous tokens However, Mere days after releasing for free and with open-source licensing what is now the top performing non-reasoning large language model (LLM) in the world — full stop, even compared to proprietary

Efficient Llm Inference With Limited Memory Apple Plato Data In Figure 1, examples of this are the generation of “be, with” and “you” Fig 1: LLM inference flow During the prefill stage, the model needs to compute attention over all previous tokens However, Mere days after releasing for free and with open-source licensing what is now the top performing non-reasoning large language model (LLM) in the world — full stop, even compared to proprietary

Llm In A Flash Efficient Inference Techniques With Limited Memory

Comments are closed.