Llm Benchmarking How One Llm Is Tested Against Another Llm Evaluation Benchmarks Simplilearn

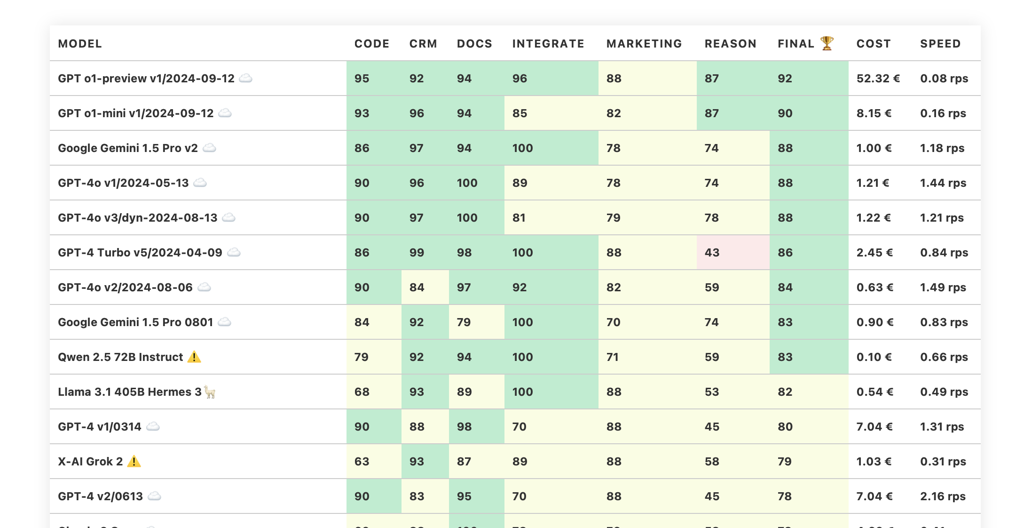

Benchmarking Llm For Business Workloads Whether you're curious about how ai models like gpt, claude, or llama are ranked, or you're looking to understand the benchmarking process that drives the development of cutting edge language. Understand llm evaluation with our comprehensive guide. learn how to define benchmarks and metrics, and measure progress for optimizing your llm performance.

Llm Benchmarks Study Using Data Subsampling Willowtree Llm benchmarks provide a starting point for evaluating generative ai models across a range of different tasks. learn where these benchmarks can be useful, and where they're lacking. large language models seem to be a double edged sword. In this article, you'll learn how to evaluate llm systems using llm evaluation metrics and benchmark datasets. In this post, we’ll walk through some tried and true best practices, common pitfalls, and handy tips to help you benchmark your llm’s performance. whether you’re just starting out or looking for a quick refresher, these guidelines will keep your evaluation strategy on solid ground. By combining insights from standardized benchmarks and emerging dynamic frameworks, llm evaluation can achieve a balance between scalability, depth, and adaptability.

What Is Llm Benchmarks Types Challenges Evaluators In this post, we’ll walk through some tried and true best practices, common pitfalls, and handy tips to help you benchmark your llm’s performance. whether you’re just starting out or looking for a quick refresher, these guidelines will keep your evaluation strategy on solid ground. By combining insights from standardized benchmarks and emerging dynamic frameworks, llm evaluation can achieve a balance between scalability, depth, and adaptability. There are several types of benchmarks used to evaluate llms, each focusing on different aspects of their functionality. below are some of the most widely recognized categories: 1. natural language understanding (nlu) purpose: assess how well an llm understands and interprets human language. An analysis of how language model evaluation has evolved from simple text completion to sophisticated multi modal reasoning tasks, and what this means for the future of ai assessment. Benchmarking: benchmarking involves comparing an llm as a candidate against a set of other llms using a standard metric on possibly a well defined task to assess its performance. for example, we can compare a list of llms and their performance towards text translation using a well established machine translation metric such as bleu [1]. Llm bias isn’t a monolithic entity; it manifests in various forms, each requiring specific detection and measurement strategies. categorizing these biases is the first critical step in developing a comprehensive benchmarking approach.

Llm Benchmarks Understanding Language Model Performance Humanloop There are several types of benchmarks used to evaluate llms, each focusing on different aspects of their functionality. below are some of the most widely recognized categories: 1. natural language understanding (nlu) purpose: assess how well an llm understands and interprets human language. An analysis of how language model evaluation has evolved from simple text completion to sophisticated multi modal reasoning tasks, and what this means for the future of ai assessment. Benchmarking: benchmarking involves comparing an llm as a candidate against a set of other llms using a standard metric on possibly a well defined task to assess its performance. for example, we can compare a list of llms and their performance towards text translation using a well established machine translation metric such as bleu [1]. Llm bias isn’t a monolithic entity; it manifests in various forms, each requiring specific detection and measurement strategies. categorizing these biases is the first critical step in developing a comprehensive benchmarking approach.

Comments are closed.