Link Context Learning For Multimodal Llms Deepai

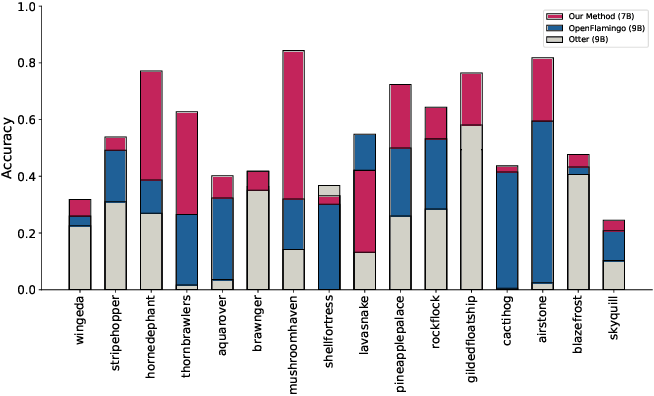

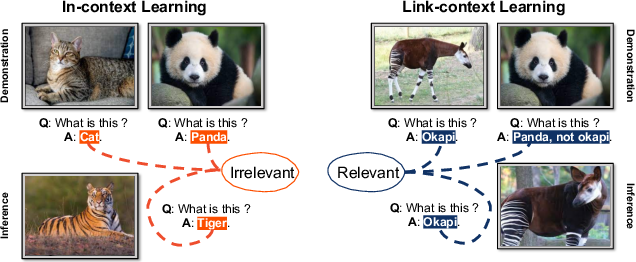

Link Context Learning For Multimodal Llms Deepai In this work, we propose link context learning (lcl), which emphasizes "reasoning from cause and effect" to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set. In this work, we propose link context learning (lcl), which emphasizes "reasoning from cause and effect" to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set.

Multimodal Deep Learning Models Pdf Inspired by in context learning (hereinafter called icl), we propose link context learning (hereinafter called lcl), which requires the mllms to acquire knowledge about new concepts from the conversation and retain their existing knowledge for accurate question answering. 本文将介绍一种新的学习方法——链接上下文学习(link context learning,lcl),它通过强化 因果关系 来增强mllms的学习能力,使其能够更有效地识别未见图像和理解新概念。. This is my meeting note for link context learning for multimodal llms. it presents a demo of how to use positive and negative example to tell l l m to recognize novel concept. In this work we propose link context learning (lcl) which emphasizes "reasoning from cause and effect" to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set.

Link Context Learning For Multimodal Llms Paper And Code Catalyzex This is my meeting note for link context learning for multimodal llms. it presents a demo of how to use positive and negative example to tell l l m to recognize novel concept. In this work we propose link context learning (lcl) which emphasizes "reasoning from cause and effect" to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set. The paper presents a method called link context learning (lcl) that enhances the learning abilities of multimodal large language models (mllms). lcl aims to enable mllms to recognize new images and understand unfamiliar concepts without the need for training. In this work, we propose link context learning (lcl), which emphasizes “reasoning from cause and effect” to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set. In this work we propose link context learning (lcl) which emphasizes "reasoning from cause and effect" to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set. This paper proposes two strategies for selecting in context samples and systematically examines the performance of several state of the art llms across 13 stance classification datasets using these selection strategies. additionally, we investigate cross domain stance classification based on in context learning.

Link Context Learning For Multimodal Llms Paper And Code Catalyzex The paper presents a method called link context learning (lcl) that enhances the learning abilities of multimodal large language models (mllms). lcl aims to enable mllms to recognize new images and understand unfamiliar concepts without the need for training. In this work, we propose link context learning (lcl), which emphasizes “reasoning from cause and effect” to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set. In this work we propose link context learning (lcl) which emphasizes "reasoning from cause and effect" to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set. This paper proposes two strategies for selecting in context samples and systematically examines the performance of several state of the art llms across 13 stance classification datasets using these selection strategies. additionally, we investigate cross domain stance classification based on in context learning.

A Deep Learning Based Multimodal Depth Aware Pdf Computer Vision In this work we propose link context learning (lcl) which emphasizes "reasoning from cause and effect" to augment the learning capabilities of mllms. lcl goes beyond traditional icl by explicitly strengthening the causal relationship between the support set and the query set. This paper proposes two strategies for selecting in context samples and systematically examines the performance of several state of the art llms across 13 stance classification datasets using these selection strategies. additionally, we investigate cross domain stance classification based on in context learning.

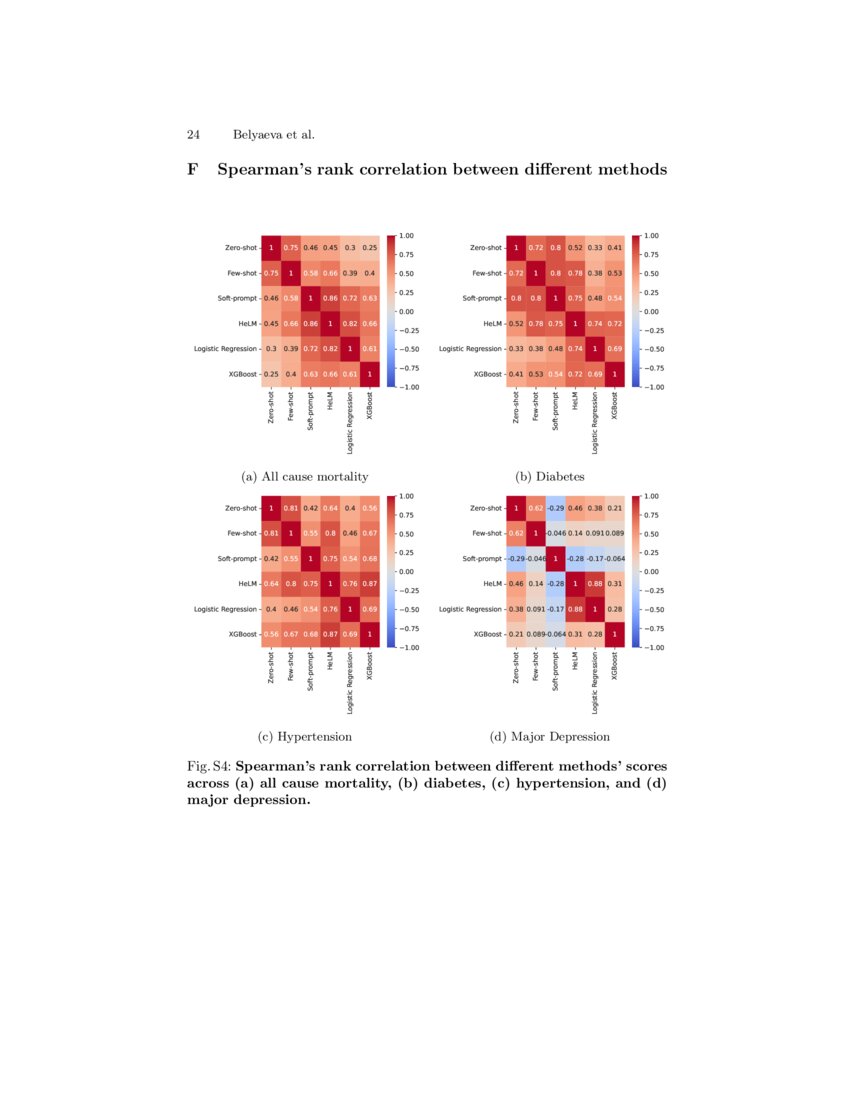

Multimodal Llms For Health Grounded In Individual Specific Data Deepai

Comments are closed.