Learning Less To Learn Better Dropout In Deep Machine Learning

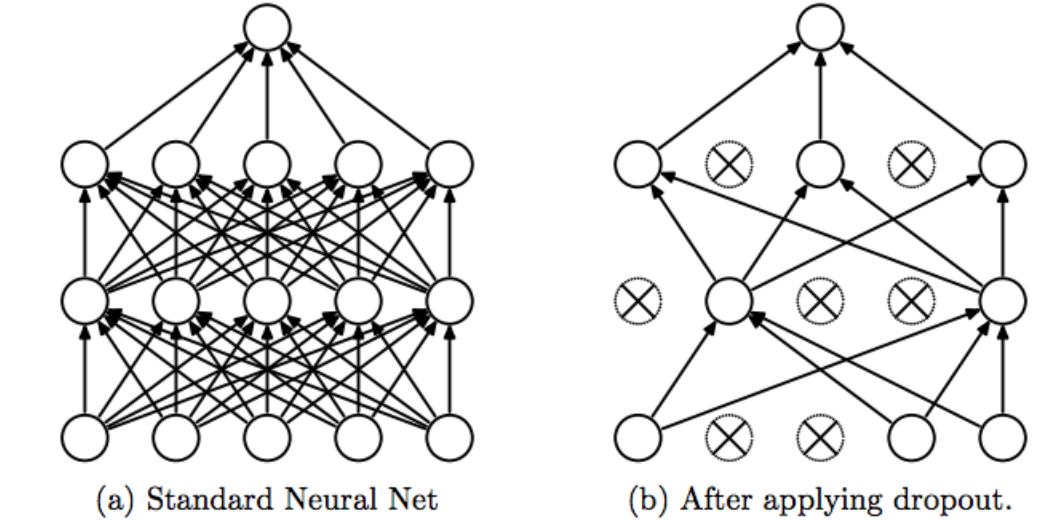

Dropout In Deep Machine Learning By Amar Budhiraja Medium Learn in many ways: the input and output of the network don't get changed, the only thing is changing is the mapping between them. so just imagine that, the network is learning the same thing in various different ways. Dropout is a regularization technique used in a neural network to prevent overfitting and enhance model generalization. overfitting occurs when a neural network becomes too specialized in learning the training data, capturing noise and specific details that do not generalize well to unseen data.

Learning Less To Learn Better Dropout In Deep Machine Learning Dropout is a technique that randomly disables (or “drops”) a fraction of neurons during each training iteration. this prevents the network from becoming too dependent on certain nodes and. Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel. during training, some number of layer outputs are randomly ignored or “ dropped out.”. Introduced by hinton et al. in 2012, dropout has stood the test of time as a regularizer for preventing overfitting in neural networks. in this study, we demonstrate that dropout can also mitigate underfitting when used at the start of training. Learn how to effectively use dropout in your machine learning models to prevent overfitting and improve their performance on unseen data.

Learning Less To Learn Better Dropout In Deep Machine Learning Introduced by hinton et al. in 2012, dropout has stood the test of time as a regularizer for preventing overfitting in neural networks. in this study, we demonstrate that dropout can also mitigate underfitting when used at the start of training. Learn how to effectively use dropout in your machine learning models to prevent overfitting and improve their performance on unseen data. In this post, i will primarily discuss the concept of dropout in neural networks, specifically deep nets, followed by an experiments to see how does it actually influence in practice by implementing a deep net on a standard dataset and seeing the effect of dropout. So, there you have it: the magic of dropout in deep learning. it's a powerful technique that can help prevent overfitting, improve generalization, and even reduce computational cost. This section will discuss implementing dropout in popular deep learning frameworks like tensorflow, keras, and pytorch. additionally, we’ll explore an example code snippet and discuss how to tune the dropout rate for optimal performance.

A Deep Dive Into Machine Learning Algorithms In this post, i will primarily discuss the concept of dropout in neural networks, specifically deep nets, followed by an experiments to see how does it actually influence in practice by implementing a deep net on a standard dataset and seeing the effect of dropout. So, there you have it: the magic of dropout in deep learning. it's a powerful technique that can help prevent overfitting, improve generalization, and even reduce computational cost. This section will discuss implementing dropout in popular deep learning frameworks like tensorflow, keras, and pytorch. additionally, we’ll explore an example code snippet and discuss how to tune the dropout rate for optimal performance.

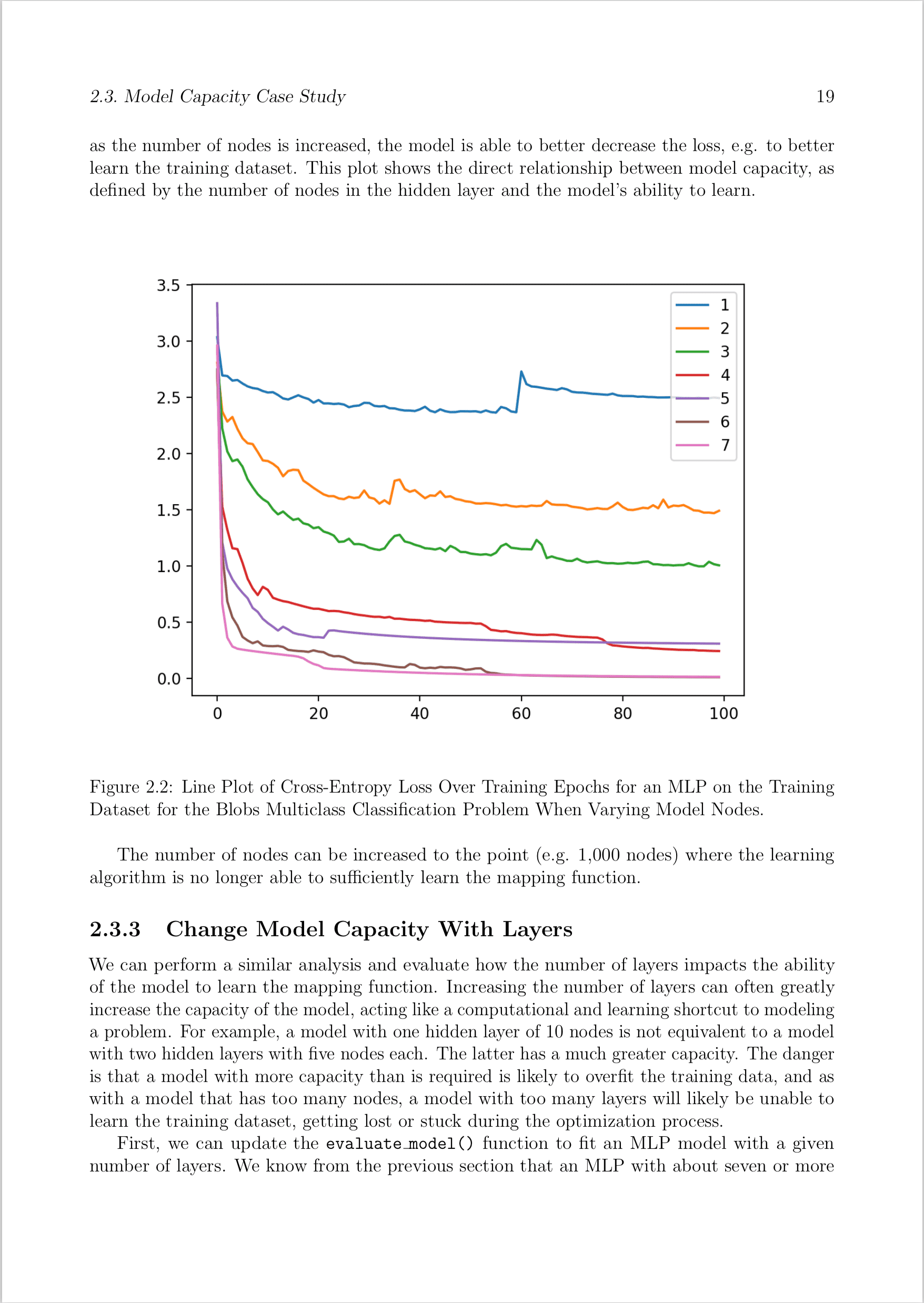

Better Deep Learning This section will discuss implementing dropout in popular deep learning frameworks like tensorflow, keras, and pytorch. additionally, we’ll explore an example code snippet and discuss how to tune the dropout rate for optimal performance.

Better Deep Learning

Comments are closed.