Langchain Huggingface S Inference Api No Openai Credits Required

Free Video Langchain And Huggingface Integration Using Gpt 2 Without Langchain huggingface's inference api (no openai credits required!) we combine langchain with gpt 2 and huggingface, a platform hosting cutting edge llm and other deep. Huggingfaceinference here's an example of calling a hugggingfaceinference model as an llm:.

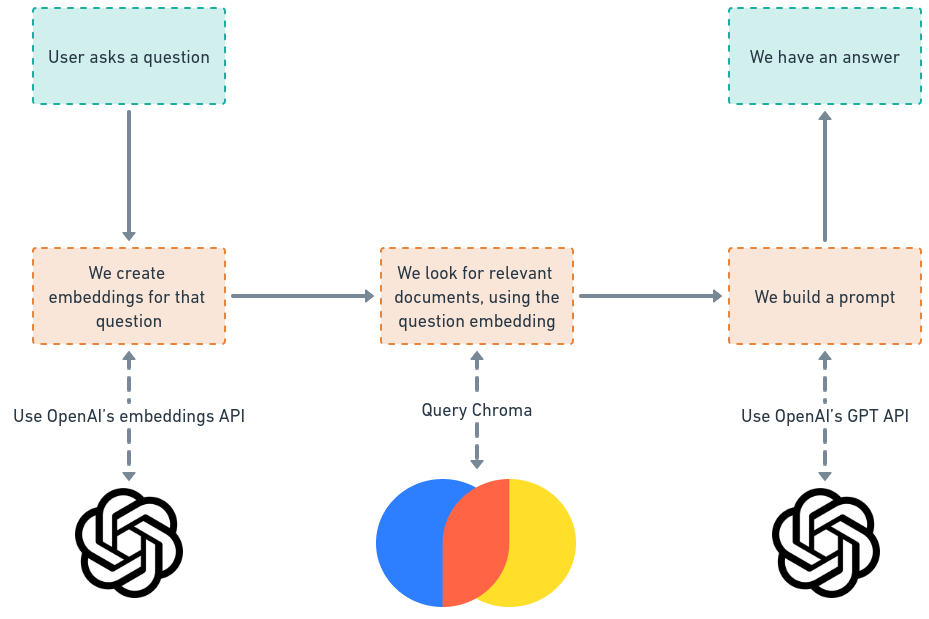

Langchain Openai Embeddings Api Image To U To conclude, we successfully implemented huggingface and langchain open source models with langchain. using these approaches, one can easily avoid paying openai api credits. By following this tutorial, you can create text generation applications without the need for openai credits, making it more accessible to a wider range of developers. Using langchain without openai api? · issue #2182 · langchain ai langchain. i'm wondering if we can use langchain without llm from openai. i've tried replace openai with "bloom 7b1" and "flan t5 xl" and used agent from langchain according to visual chatgpt github microsoft visual chatgpt. here is my demo: def init (self, device):. The first to do is to create a free hugging face api token. select “new token” with token type ‘read’ and copy the key. use llm to predict the next words after the text in question. # set.

Muratbo Huggingface Inference Api Test Hugging Face Using langchain without openai api? · issue #2182 · langchain ai langchain. i'm wondering if we can use langchain without llm from openai. i've tried replace openai with "bloom 7b1" and "flan t5 xl" and used agent from langchain according to visual chatgpt github microsoft visual chatgpt. here is my demo: def init (self, device):. The first to do is to create a free hugging face api token. select “new token” with token type ‘read’ and copy the key. use llm to predict the next words after the text in question. # set. This article explains how to create a retrieval augmented generation (rag) chatbot in langchain using open source models from hugging face serverless inference api. you will see how to call large language models (llms) and embedding models from hugging face serverless inference api using langchain. Hugging face’s api allows users to leverage models hosted on their server without the need for local installations. this approach offers the advantage of a wide selection of models to choose from, including stable lm from stability ai and dolly from data breaks. Here is an example of how you can access huggingfaceendpoint integration of the serverless inference providers api. repo id=repo id, max length=128, temperature=0.5, huggingfacehub api token=huggingfacehub api token, provider="auto", # set your provider here hf.co settings inference providers. Explore the integration of langchain with gpt 2 and huggingface in this 25 minute tutorial video. learn how to leverage cutting edge llm and deep learning ai models without incurring openai charges.

Github Ndsukesh Langchain Openai Huggingface This article explains how to create a retrieval augmented generation (rag) chatbot in langchain using open source models from hugging face serverless inference api. you will see how to call large language models (llms) and embedding models from hugging face serverless inference api using langchain. Hugging face’s api allows users to leverage models hosted on their server without the need for local installations. this approach offers the advantage of a wide selection of models to choose from, including stable lm from stability ai and dolly from data breaks. Here is an example of how you can access huggingfaceendpoint integration of the serverless inference providers api. repo id=repo id, max length=128, temperature=0.5, huggingfacehub api token=huggingfacehub api token, provider="auto", # set your provider here hf.co settings inference providers. Explore the integration of langchain with gpt 2 and huggingface in this 25 minute tutorial video. learn how to leverage cutting edge llm and deep learning ai models without incurring openai charges.

Huggingface Inference Api Issue рџ Tokenizers Hugging Face Forums Here is an example of how you can access huggingfaceendpoint integration of the serverless inference providers api. repo id=repo id, max length=128, temperature=0.5, huggingfacehub api token=huggingfacehub api token, provider="auto", # set your provider here hf.co settings inference providers. Explore the integration of langchain with gpt 2 and huggingface in this 25 minute tutorial video. learn how to leverage cutting edge llm and deep learning ai models without incurring openai charges.

Github Milanimcgraw Langchain Rag With Hugging Face Inference Api

Comments are closed.