Interpretability Of Deep Neural Networks With Sparse Autoencoders Ppt

Interpretability Of Deep Neural Networks With Sparse Autoencoders Ppt In mathematical logic, interpretability is a relation between formal theories that expresses the possibility of interpreting or translating one into the other. assume t and s are formal theories. Ai interpretability is the ability to understand and explain the decision making processes that power artificial intelligence models.

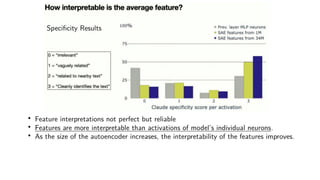

Interpretability Of Deep Neural Networks With Sparse Autoencoders Ppt Model interpretability is all about making a machine learning model’s decisions understandable to humans. instead of being a black box where inputs go in and predictions come out without any clarity, an interpretable model shows us why it made a certain choice. Models are interpretable when humans can readily understand the reasoning behind predictions and decisions made by the model. the more interpretable the models are, the easier it is for someone to comprehend and trust the model. While interpretability may seem like a common sense term, we will show that it can have different meanings in different contexts and that it plays a specific role in the progress of science. Explainability refers to the ability of a model to provide clear and understandable explanations for its predictions or decisions. interpretability, on the other hand, focuses on the ability to understand and make sense of how a model works and why it makes certain predictions.

Accelerating Sparse Deep Neural Networks Deepai While interpretability may seem like a common sense term, we will show that it can have different meanings in different contexts and that it plays a specific role in the progress of science. Explainability refers to the ability of a model to provide clear and understandable explanations for its predictions or decisions. interpretability, on the other hand, focuses on the ability to understand and make sense of how a model works and why it makes certain predictions. Lipton (2016) presents such a framework under which we can discuss and compare the interpretability of models. in this paper, the author outlines both what we look to gain from interpretable ml systems as well as in what ways interpretability can be achieved. Interpretability is the ability to understand the overall consequences of the model and ensuring the things we predict are accurate knowledge aligned with our initial research goal. We conduct a contextual inquiry (n=11) and a survey (n=197) of data scientists to observe how they use interpretability tools to uncover common issues that arise when building and eval uating ml models. our results indicate that data scientists over trust and misuse interpretability tools. Interpretability refers to the degree to which a human can predict the outcome of a model or understand the reasons behind its decisions. it can also be associated with terms such as comprehensibility, understandability, and explainability, which all contribute to different aspects of interpretability.

Final Ppt Ann Pdf Artificial Neural Network Deep Learning Lipton (2016) presents such a framework under which we can discuss and compare the interpretability of models. in this paper, the author outlines both what we look to gain from interpretable ml systems as well as in what ways interpretability can be achieved. Interpretability is the ability to understand the overall consequences of the model and ensuring the things we predict are accurate knowledge aligned with our initial research goal. We conduct a contextual inquiry (n=11) and a survey (n=197) of data scientists to observe how they use interpretability tools to uncover common issues that arise when building and eval uating ml models. our results indicate that data scientists over trust and misuse interpretability tools. Interpretability refers to the degree to which a human can predict the outcome of a model or understand the reasons behind its decisions. it can also be associated with terms such as comprehensibility, understandability, and explainability, which all contribute to different aspects of interpretability.

Deep Convolution Neural Network And Autoencoders Based Unsupervised We conduct a contextual inquiry (n=11) and a survey (n=197) of data scientists to observe how they use interpretability tools to uncover common issues that arise when building and eval uating ml models. our results indicate that data scientists over trust and misuse interpretability tools. Interpretability refers to the degree to which a human can predict the outcome of a model or understand the reasons behind its decisions. it can also be associated with terms such as comprehensibility, understandability, and explainability, which all contribute to different aspects of interpretability.

Enhancing Interpretability In Neural Networks With Sparse Autoencoders

Comments are closed.