In Context Retrieval Augmented Language Models Deepai

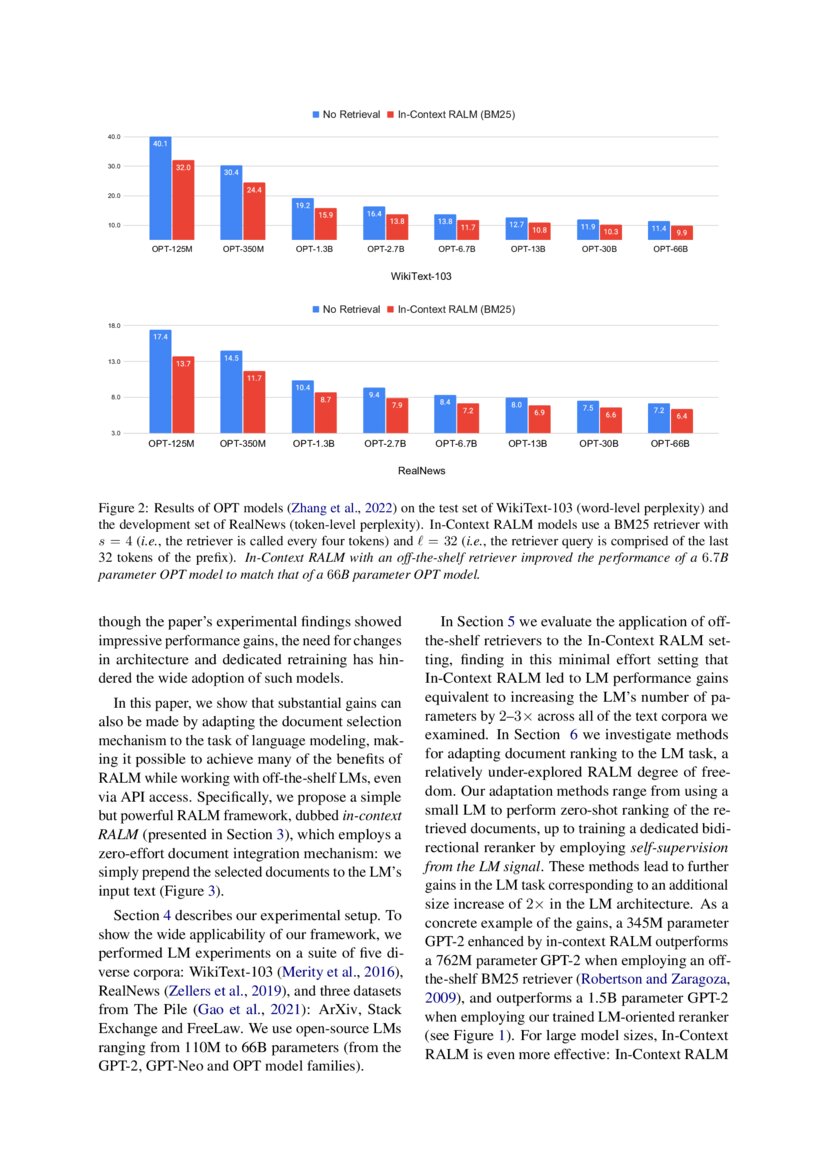

In Context Retrieval Augmented Language Models Deepai We show that in context ralm which uses off the shelf general purpose retrievers provides surprisingly large lm gains across model sizes and diverse corpora. we also demonstrate that the document retrieval and ranking mechanism can be specialized to the ralm setting to further boost performance. We show that in context ralm that builds on off the shelf general purpose retrievers provides surprisingly large lm gains across model sizes and diverse corpora. we also demonstrate that the document retrieval and ranking mechanism can be specialized to the ralm setting to further boost performance.

Enhancing Retrieval Augmented Large Language Models With Iterative Retrieval augmented language models (ralms) add an operation that retrieves one or more docu ments from an external corpus c, and condition the above lm predictions on these documents. Through a comprehensive exploration of in context ralm, researchers and practitioners can gain valuable insights into the potential of retrieval augmented approaches for advancing natural. In summary, the rag technology involves using a retriever during model inference to fetch relevant documents, which are then concatenated with the origin input. in the in context learning setting, some examples are placed before the user’s input, and then they are fed to llm. We show that in context ralm which uses off the shelf general purpose retrievers provides surprisingly large lm gains across model sizes and diverse corpora. we also demonstrate that the document retrieval and ranking mechanism can be specialized to the ralm setting to further boost performance.

In Context Retrieval Augmented Language Models Ai Hackr In summary, the rag technology involves using a retriever during model inference to fetch relevant documents, which are then concatenated with the origin input. in the in context learning setting, some examples are placed before the user’s input, and then they are fed to llm. We show that in context ralm which uses off the shelf general purpose retrievers provides surprisingly large lm gains across model sizes and diverse corpora. we also demonstrate that the document retrieval and ranking mechanism can be specialized to the ralm setting to further boost performance. Our work underscores the potential of retrieval augmented encoder decoder language models for in context learning and encourages further research in this direction. Retrieval augmented generation, or rag, is a process applied to large language models to make their outputs more relevant for the end user. We show that in context ralm that builds on off the shelf general purpose retrievers provides surprisingly large lm gains across model sizes and diverse corpora. we also demonstrate that the. In context learning (icl) is an advanced ai capability introduced in the seminal research paper “language models are few shot learners,” which unveiled gpt 3. 1 unlike supervised learning, where a model undergoes a training phase with backpropagation to alter its parameters, icl relies entirely on pretrained language models and keeps their parameters unchanged. the ai model uses the prompt.

In Context Retrieval Augmented Language Models Paper And Code Our work underscores the potential of retrieval augmented encoder decoder language models for in context learning and encourages further research in this direction. Retrieval augmented generation, or rag, is a process applied to large language models to make their outputs more relevant for the end user. We show that in context ralm that builds on off the shelf general purpose retrievers provides surprisingly large lm gains across model sizes and diverse corpora. we also demonstrate that the. In context learning (icl) is an advanced ai capability introduced in the seminal research paper “language models are few shot learners,” which unveiled gpt 3. 1 unlike supervised learning, where a model undergoes a training phase with backpropagation to alter its parameters, icl relies entirely on pretrained language models and keeps their parameters unchanged. the ai model uses the prompt.

Retrieval Augmented Generation For Knowledge Intensive Nlp Tasks Deepai We show that in context ralm that builds on off the shelf general purpose retrievers provides surprisingly large lm gains across model sizes and diverse corpora. we also demonstrate that the. In context learning (icl) is an advanced ai capability introduced in the seminal research paper “language models are few shot learners,” which unveiled gpt 3. 1 unlike supervised learning, where a model undergoes a training phase with backpropagation to alter its parameters, icl relies entirely on pretrained language models and keeps their parameters unchanged. the ai model uses the prompt.

Comments are closed.