Improving Model Runtime

Improving Model Runtime Apply hydraulic best practices to make the model hydraulically stable before you add sediment. a large time step that iterates can take longer to run than a short time step that is stable. try to. We assume that your goal is to find a configuration that maximizes the performance of your model. sometimes, your goal is to maximize model improvement by a fixed deadline. in other cases,.

Github Z 15 Infinite Runtime Model Editor Like Irtv But For Model Discover proven methods to optimize and reduce the training time of machine learning models, improving efficiency and performance. In this article, i will show you a range of techniques to optimize the task performance of machine learning models that i’ve used while working on ai at amazon. we’ll start by discussing how to uncover if your model needs improving and which measures are likely to yield the biggest performance gain. Therefore, predicting the runtime performance of a dl model ahead of job execution is critical for boosting development productivity and reducing resource waste. Neural networks are becoming increasingly powerful, but speed remains a crucial factor in real world applications. whether you’re running models on the cloud, edge devices, or personal hardware, optimizing them for speed can lead to faster inference, lower latency, and reduced resource consumption.

Generic Runtime Architecture Model Download Scientific Diagram Therefore, predicting the runtime performance of a dl model ahead of job execution is critical for boosting development productivity and reducing resource waste. Neural networks are becoming increasingly powerful, but speed remains a crucial factor in real world applications. whether you’re running models on the cloud, edge devices, or personal hardware, optimizing them for speed can lead to faster inference, lower latency, and reduced resource consumption. Model pruning involves reducing the number of parameters in a neural network by identifying and removing insignificant weights without compromising performance. this technique can significantly decrease model size and computation time. We’ll introduce some common strategies to improve model performance including selecting the best algorithm, tuning model settings, and adding new features (aka feature engineering). Instead of attempting to push beyond these limitations, it is more effective to build on a unique model and improve it up to 99% of model performance issues. the five steps outlined in this framework have been proven to be effective ways to improve the performance of a model. Optimize machine learning models with advanced techniques in gpu utilization, hyperparameter tuning, and data preprocessing for faster, cost efficient ai model performance and scalability.

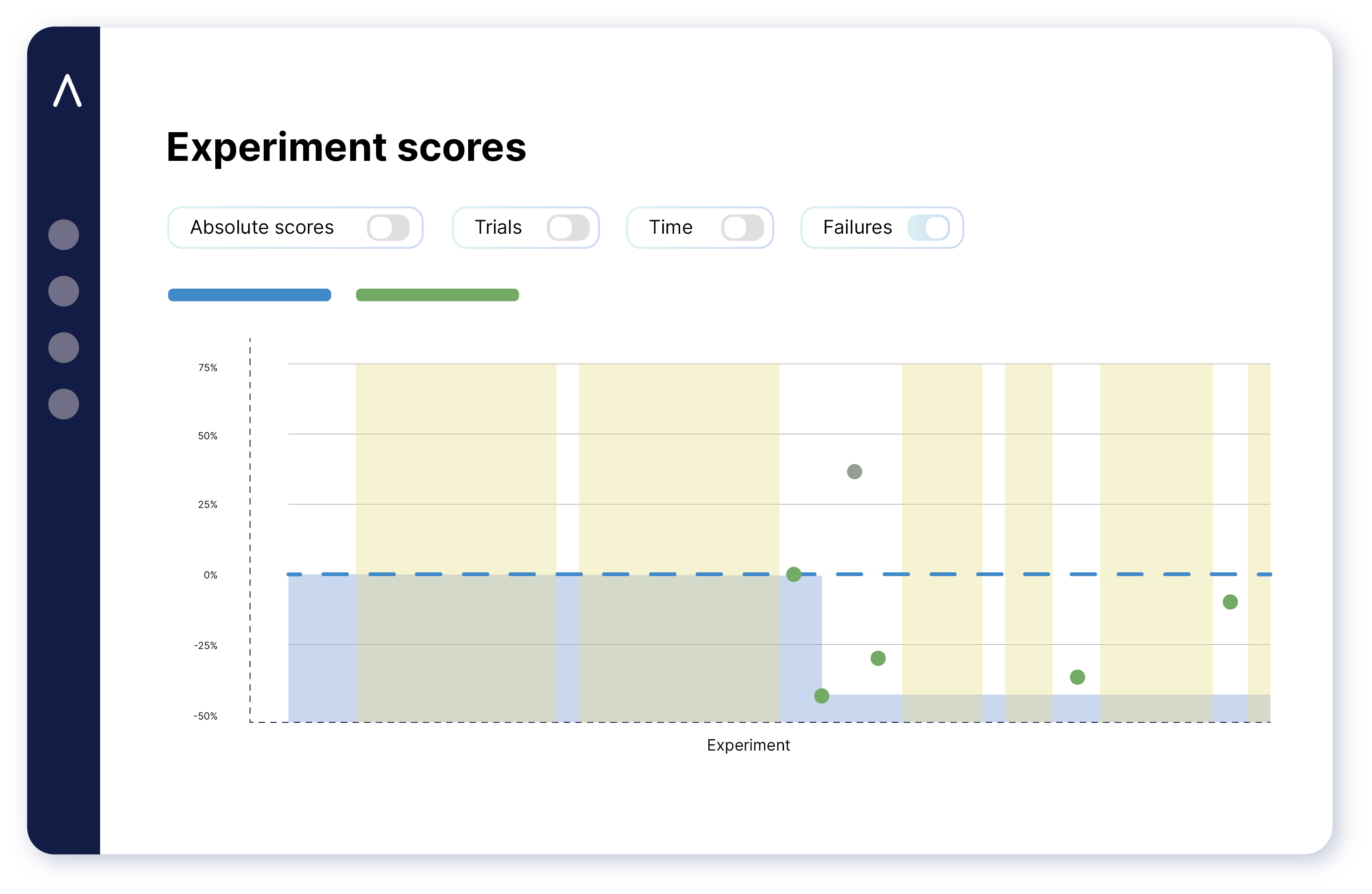

Runtime Java Performance Tuning And Optimization Akamas Model pruning involves reducing the number of parameters in a neural network by identifying and removing insignificant weights without compromising performance. this technique can significantly decrease model size and computation time. We’ll introduce some common strategies to improve model performance including selecting the best algorithm, tuning model settings, and adding new features (aka feature engineering). Instead of attempting to push beyond these limitations, it is more effective to build on a unique model and improve it up to 99% of model performance issues. the five steps outlined in this framework have been proven to be effective ways to improve the performance of a model. Optimize machine learning models with advanced techniques in gpu utilization, hyperparameter tuning, and data preprocessing for faster, cost efficient ai model performance and scalability.

Runtime Java Performance Tuning And Optimization Akamas Instead of attempting to push beyond these limitations, it is more effective to build on a unique model and improve it up to 99% of model performance issues. the five steps outlined in this framework have been proven to be effective ways to improve the performance of a model. Optimize machine learning models with advanced techniques in gpu utilization, hyperparameter tuning, and data preprocessing for faster, cost efficient ai model performance and scalability.

Comments are closed.