Implicit Neural Representations With Periodic Activation Functions

Vincent Sitzmann Julien Martel Alexander Bergman David Lindell We demonstrate that periodic activation functions are ideally suited for representing complex natural signals and their derivatives using implicit neural representations. We introduce sinusoidal trainable activation functions (staf), designed to directly tackle this limitation by enabling networks to adaptively learn and represent complex signals with higher precision and efficiency.

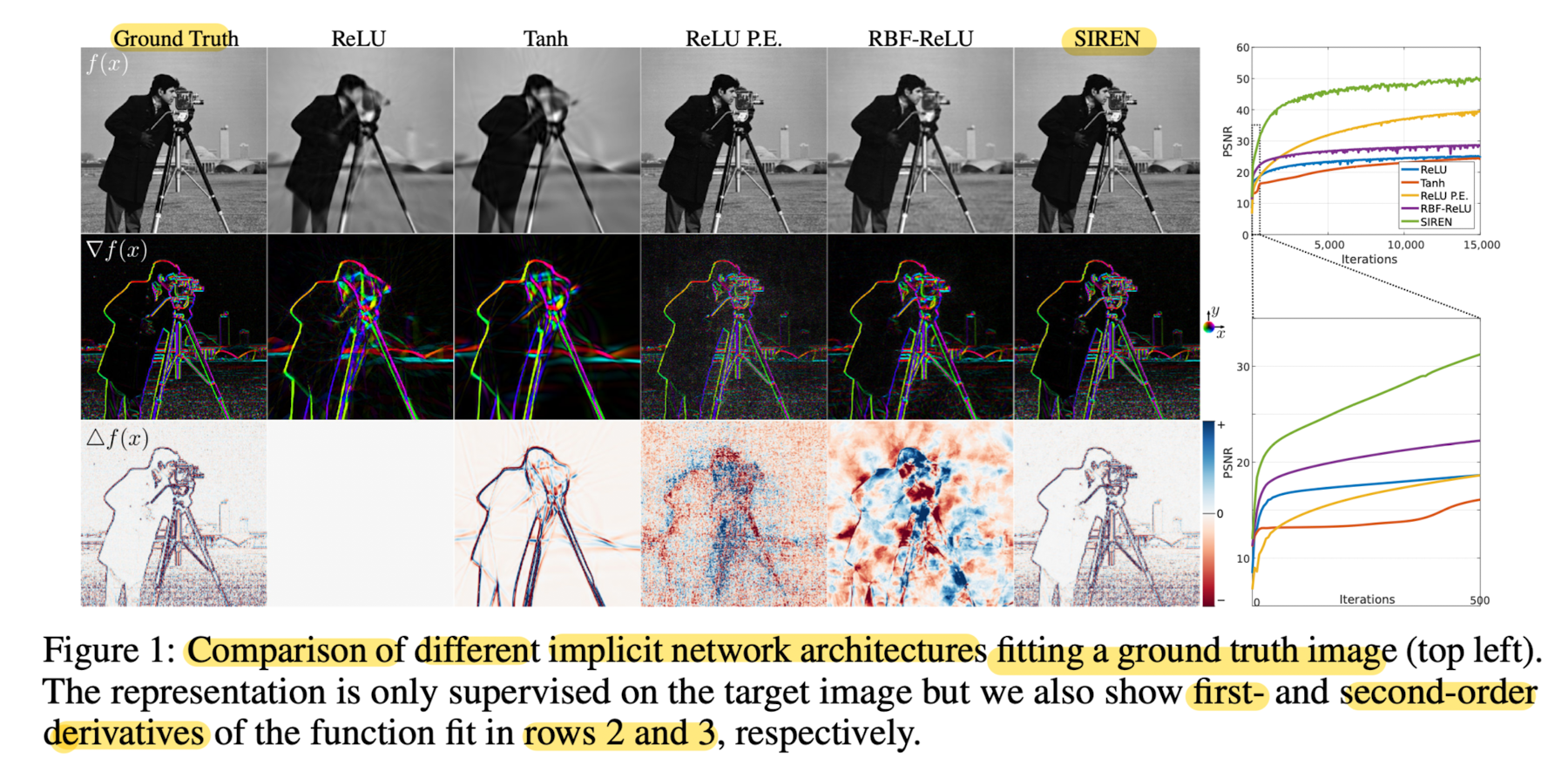

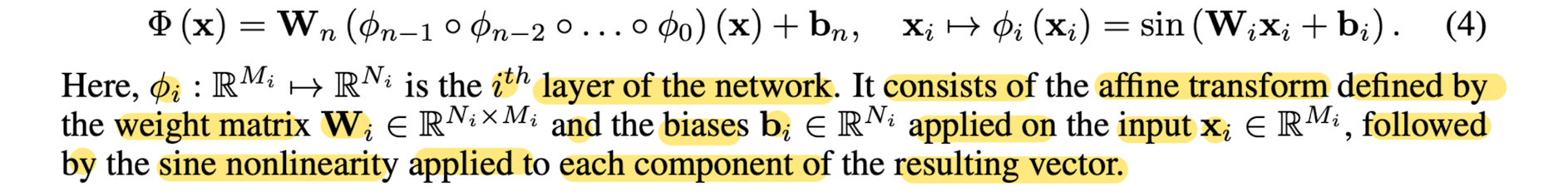

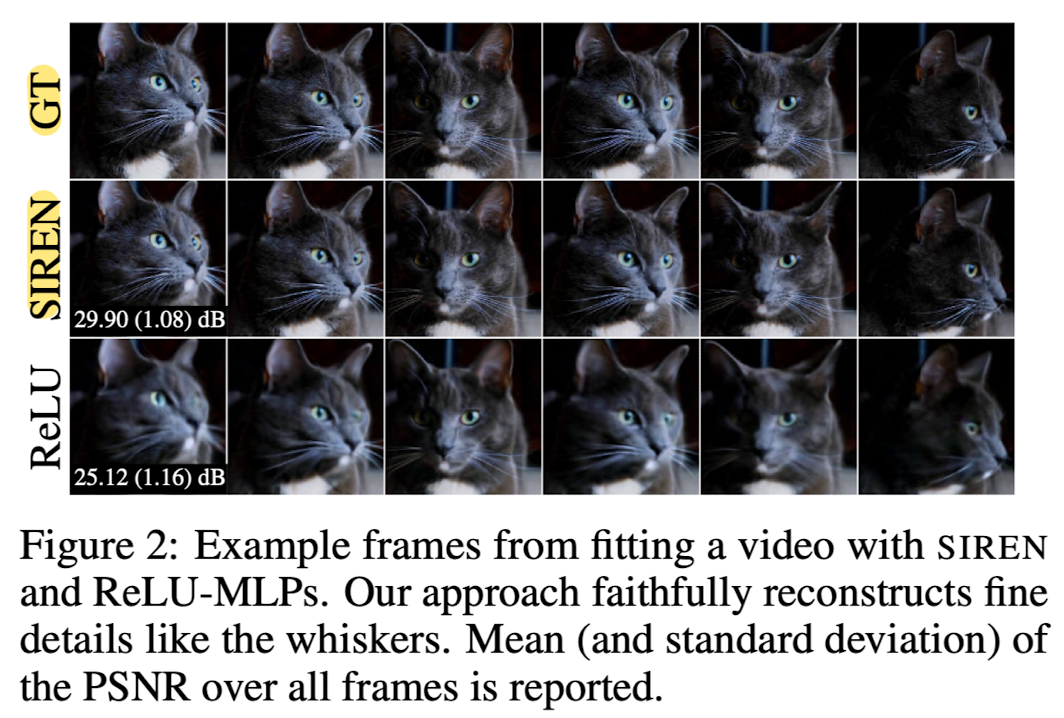

Implicit Neural Representations With Periodic Activation Functions Let us explore and attempt to get a feel for the new paper from stanford researchers on using periodic activation functions for implicit representations. for people from traditional ml. We propose to leverage periodic activation functions for implicit neural representations and demonstrate that these networks, dubbed sinusoidal representation networks or sirens, are ideally suited for representing complex natural signals and their derivatives. We propose leveraging periodic activation functions for implicit neural representations, dubbed sinusoidal representation networks (sirens). sirens are ideally suited for representing complex natural signals and their derivatives, and can solve challenging boundary value problems. To overcome this limitation, we propose a novel variable periodic activation function for inr. this innovation allows for tuning the supported frequency set by adjusting the initialization range of the bias vector in the neu ral network.

Implicit Neural Representations With Periodic Activation Functions We propose leveraging periodic activation functions for implicit neural representations, dubbed sinusoidal representation networks (sirens). sirens are ideally suited for representing complex natural signals and their derivatives, and can solve challenging boundary value problems. To overcome this limitation, we propose a novel variable periodic activation function for inr. this innovation allows for tuning the supported frequency set by adjusting the initialization range of the bias vector in the neu ral network. The paper proposes a novel class of implicit function representations using mlps with sinusoidal activation functions, and shows its applications in various domains. the reviewers praise the method's insight, breadth, and clarity, but also point out some weaknesses and related work. We fit signed distance functions parameterized by implicit neural representations directly on point clouds. compared to relu implicit representations, our periodic activations significantly improve detail of objects (left) and complexity of entire scenes (right). The paper proposes a new activation function for implicit neural representations (inr) based on hyperbolic sine and cosine. it claims that this function improves the performance of inr in computer vision and fluid flow tasks compared to sinusoidal activation functions. We introduce a novel implicit neural representation that allows for flexible tuning of the spectral bias, enhancing signal representation and optimization. 🚀.

Implicit Neural Representations With Periodic Activation Functions The paper proposes a novel class of implicit function representations using mlps with sinusoidal activation functions, and shows its applications in various domains. the reviewers praise the method's insight, breadth, and clarity, but also point out some weaknesses and related work. We fit signed distance functions parameterized by implicit neural representations directly on point clouds. compared to relu implicit representations, our periodic activations significantly improve detail of objects (left) and complexity of entire scenes (right). The paper proposes a new activation function for implicit neural representations (inr) based on hyperbolic sine and cosine. it claims that this function improves the performance of inr in computer vision and fluid flow tasks compared to sinusoidal activation functions. We introduce a novel implicit neural representation that allows for flexible tuning of the spectral bias, enhancing signal representation and optimization. 🚀.

Comments are closed.