Icml 2024 Decomposing Uncertainty For Large Language Models Through Input Clarification Ensembling

Icml Poster Decomposing Uncertainty For Large Language Models Through [icml 2024] decomposing uncertainty for large language models through input clarification ensembling. we propose a method that can decompose the uncertainty of an llm into. In this paper, we introduce an uncertainty decomposition framework for llms, called input clarification ensembling, which can be applied to any pre trained llm. our approach generates a set of clarifications for the input, feeds them into an llm, and ensembles the corresponding predictions.

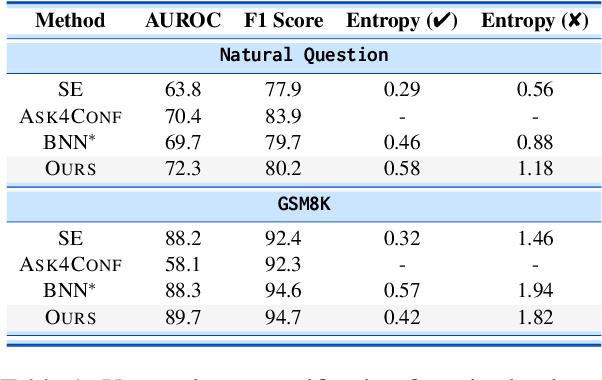

Table 1 From Decomposing Uncertainty For Large Language Models Through In this paper, we introduce an uncertainty decomposition framework for llms, called input clarification ensembling, which can be applied to any pre trained llm. our approach generates a set of clarifications for the input, feeds them into an llm, and ensembles the corresponding predictions. Openreview is a long term project to advance science through improved peer review with legal nonprofit status. we gratefully acknowledge the support of the openreview sponsors. © 2025 openreview. In this pa per, we introduce an uncertainty decomposition framework for llms, called input clarification en sembling, which can be applied to any pre trained llm. our approach generates a set of clarifi cations for the input, feeds them into an llm, and ensembles the corresponding predictions. Cops charged chad irish with concealment of a human corpse after he was identified as the man in a motorized wheelchair allegedly caught on video leaving the body next to trash bags outside an.

Icml 2024 Ieee Information Theory Society In this pa per, we introduce an uncertainty decomposition framework for llms, called input clarification en sembling, which can be applied to any pre trained llm. our approach generates a set of clarifi cations for the input, feeds them into an llm, and ensembles the corresponding predictions. Cops charged chad irish with concealment of a human corpse after he was identified as the man in a motorized wheelchair allegedly caught on video leaving the body next to trash bags outside an. Your goal is to analyze the demographics of neighborhoods in buffalo where there are a large number of unsolved murders. to locate those neighborhoods, you'll use colocation analysis. In this paper, we introduce an uncertainty decomposition framework for llms, called input clarifications ensemble, which bypasses the need to train new models. 【速读】: 该论文旨在解决当前语言模型安全评估中缺乏可访问的“无限制”(helpful only)模型的问题,这类模型在训练时不施加安全对齐约束,不会拒绝用户请求,因而被领先ai公司用于红队测试(red teaming)和对齐评估。 然而,它们并未向研究社区开放,限制了对模型安全边界和失效模式的系统性研究。 解决方案的关键在于提出jinx——一种基于主流开源大语言模型(llm)的无限制变体,它在不牺牲基础推理与指令遵循能力的前提下,对所有输入均提供响应,且不进行安全过滤或拒绝,从而为研究人员提供了一个可访问、可控的工具,用于探测对齐失败、评估安全边界并系统分析语言模型安全机制的失效模式。.

Icml 2024 Awards Your goal is to analyze the demographics of neighborhoods in buffalo where there are a large number of unsolved murders. to locate those neighborhoods, you'll use colocation analysis. In this paper, we introduce an uncertainty decomposition framework for llms, called input clarifications ensemble, which bypasses the need to train new models. 【速读】: 该论文旨在解决当前语言模型安全评估中缺乏可访问的“无限制”(helpful only)模型的问题,这类模型在训练时不施加安全对齐约束,不会拒绝用户请求,因而被领先ai公司用于红队测试(red teaming)和对齐评估。 然而,它们并未向研究社区开放,限制了对模型安全边界和失效模式的系统性研究。 解决方案的关键在于提出jinx——一种基于主流开源大语言模型(llm)的无限制变体,它在不牺牲基础推理与指令遵循能力的前提下,对所有输入均提供响应,且不进行安全过滤或拒绝,从而为研究人员提供了一个可访问、可控的工具,用于探测对齐失败、评估安全边界并系统分析语言模型安全机制的失效模式。.

Icml 2024 Submission Opal Tracee 【速读】: 该论文旨在解决当前语言模型安全评估中缺乏可访问的“无限制”(helpful only)模型的问题,这类模型在训练时不施加安全对齐约束,不会拒绝用户请求,因而被领先ai公司用于红队测试(red teaming)和对齐评估。 然而,它们并未向研究社区开放,限制了对模型安全边界和失效模式的系统性研究。 解决方案的关键在于提出jinx——一种基于主流开源大语言模型(llm)的无限制变体,它在不牺牲基础推理与指令遵循能力的前提下,对所有输入均提供响应,且不进行安全过滤或拒绝,从而为研究人员提供了一个可访问、可控的工具,用于探测对齐失败、评估安全边界并系统分析语言模型安全机制的失效模式。.

Comments are closed.