Huggingface Documentation Images Add Animatediff Freeinit Examples

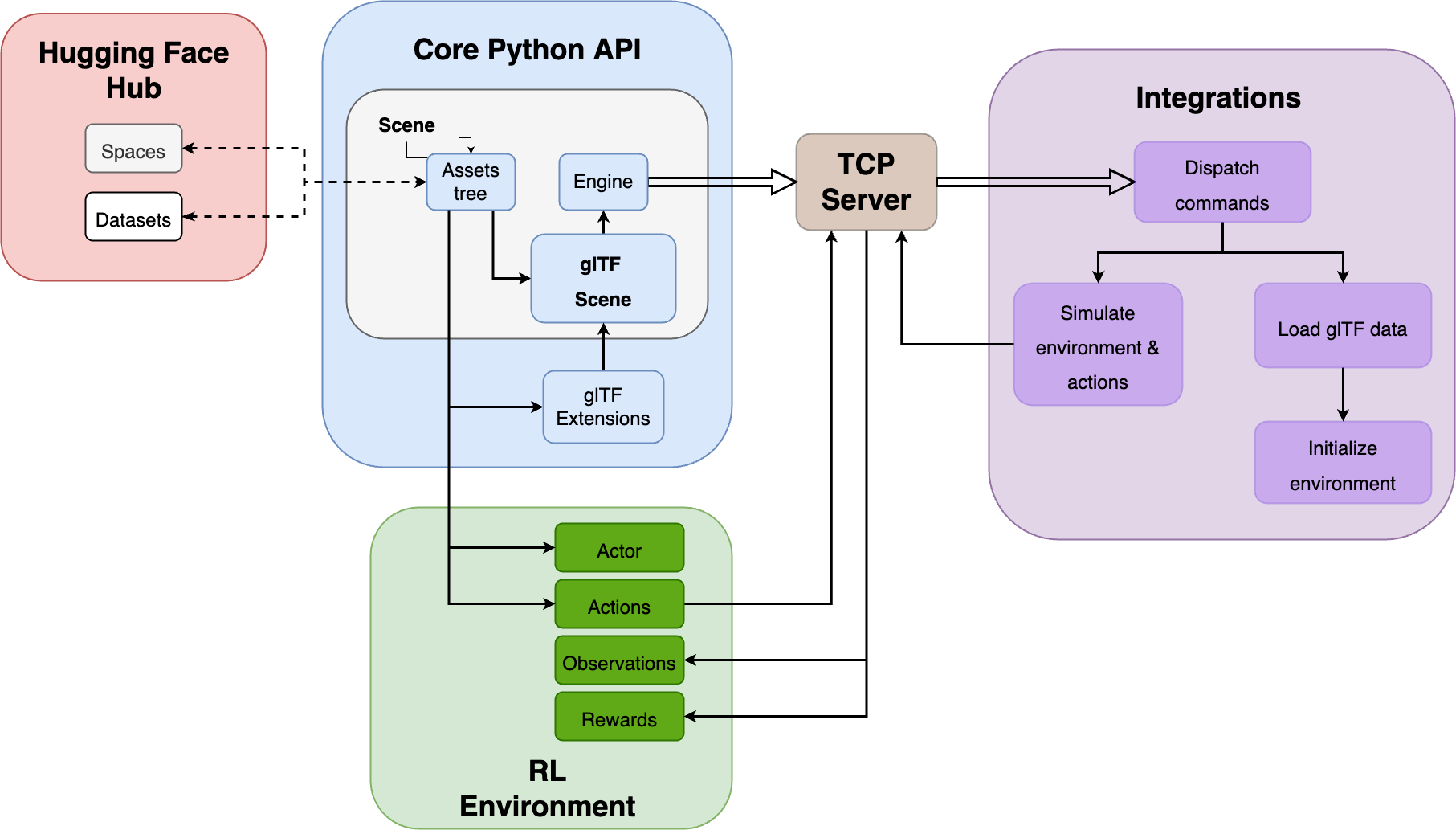

Freeinit Examples Animatediff Animatediff Pipelines Pipeline Animation Upload images, audio, and videos by dragging in the text input, pasting, or clicking here. In this report, we propose a practical framework to animate most of the existing personalized text to image models once and for all, saving efforts in model specific tuning.

Tryout This Demo Freeinit To Compare Freeinit Animatediff And Regular Anyone interested in adding a animatediffcontrolnetpipeline? the expected behavior is to allow user to pass a list of conditions (e.g. pose) and use them to condition the generation for each frame. R stablediffusion is back open after the protest of reddit killing open api access, which will bankrupt app developers, hamper moderation, and exclude blind users from the site. I am using animatediffpipeline to create animations. they look really good, but as soon as i want to increase the frame amount from 16 to anything higher (like 32) the results are really blurry. here is a comparison of images created with different frame rates. 🤗 diffusers: state of the art diffusion models for image and audio generation in pytorch and flax. [refactor] freeinit for animatediff based pipelines · huggingface diffusers@8834fe6.

Tryout This Demo Freeinit To Compare Freeinit Animatediff And Regular I am using animatediffpipeline to create animations. they look really good, but as soon as i want to increase the frame amount from 16 to anything higher (like 32) the results are really blurry. here is a comparison of images created with different frame rates. 🤗 diffusers: state of the art diffusion models for image and audio generation in pytorch and flax. [refactor] freeinit for animatediff based pipelines · huggingface diffusers@8834fe6. Git lfs details sha256: c074896ea5ab098699e46911b07e9a9da86c4811231154412e79268bf61b9468 pointer size: 132 bytes size of remote file: 1.84 mb diffusers animatediff no freeinit.gif viewed. Upload images, audio, and videos by dragging in the text input, pasting, or clicking here. The diffusionpipeline class is a simple and generic way to load the latest trending diffusion model from the hub. it uses the from pretrained () method to automatically detect the correct pipeline class for a task from the checkpoint, downloads and caches all the required configuration and weight files, and returns a pipeline ready for inference. Seems pretty simple, just need to add a prepare latents which initializes the latents to encoded image. a workaround right now is to encode the images in advance, and pass them in as latents param.

Freeinit Bridging Initialization Gap In Video Diffusion Models Git lfs details sha256: c074896ea5ab098699e46911b07e9a9da86c4811231154412e79268bf61b9468 pointer size: 132 bytes size of remote file: 1.84 mb diffusers animatediff no freeinit.gif viewed. Upload images, audio, and videos by dragging in the text input, pasting, or clicking here. The diffusionpipeline class is a simple and generic way to load the latest trending diffusion model from the hub. it uses the from pretrained () method to automatically detect the correct pipeline class for a task from the checkpoint, downloads and caches all the required configuration and weight files, and returns a pipeline ready for inference. Seems pretty simple, just need to add a prepare latents which initializes the latents to encoded image. a workaround right now is to encode the images in advance, and pass them in as latents param.

Huggingface Documentation Images Add Animatediff Freeinit Examples The diffusionpipeline class is a simple and generic way to load the latest trending diffusion model from the hub. it uses the from pretrained () method to automatically detect the correct pipeline class for a task from the checkpoint, downloads and caches all the required configuration and weight files, and returns a pipeline ready for inference. Seems pretty simple, just need to add a prepare latents which initializes the latents to encoded image. a workaround right now is to encode the images in advance, and pass them in as latents param.

Huggingface Documentation Images At Main

Comments are closed.