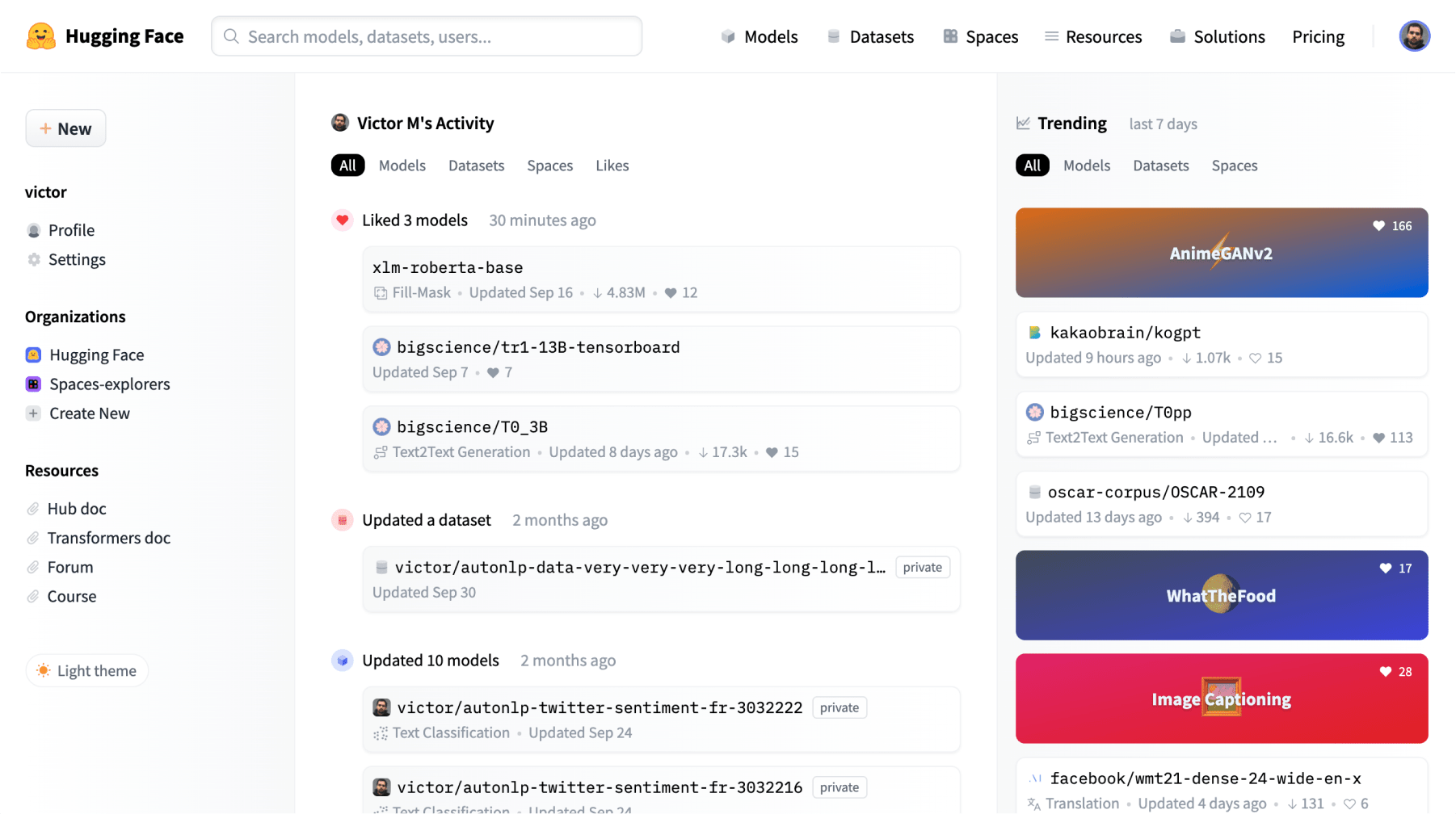

Hugging Face Explained How To Run Ai Models On Your Machine Locally In Minutes

Hugging Face The Ai Community Building The Future The video breaks down how openai’s surprising release of gpt oss, a state of the art open source ai model, changes the ai landscape. it explains how anyone can download, test, and run. By following the steps outlined in this guide, you can efficiently run hugging face models locally, whether for nlp, computer vision, or fine tuning custom models.

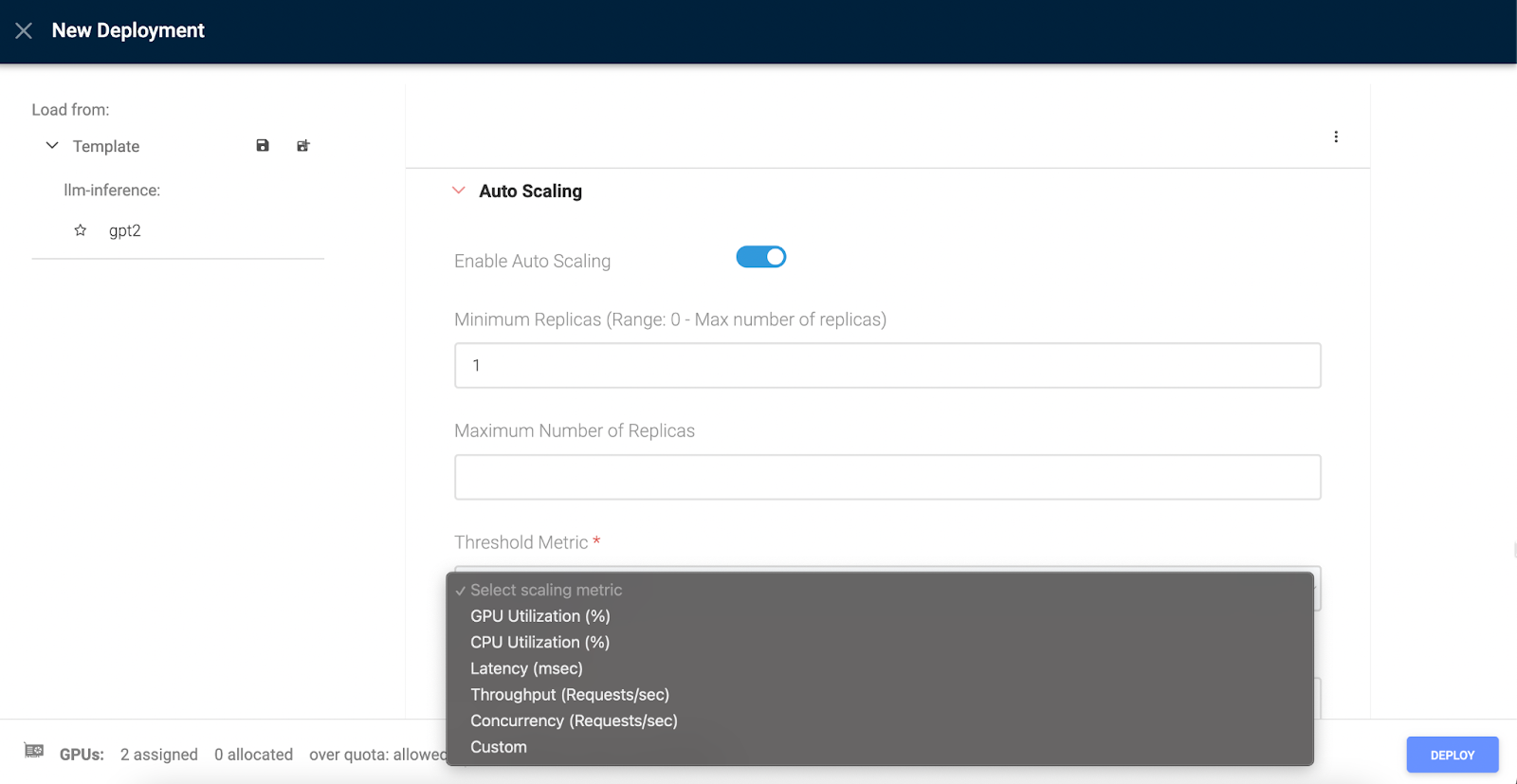

How To Deploy Hugging Face Models With Run Ai Run this ai model locally: on the player’s machine. both are valid solutions, but they have advantages and disadvantages. i run the model on a remote server, and send api calls from the game. i can use an api service to help deploy the model. In this guide, i’ll walk you through the entire process, from requesting access to loading the model locally and generating model output — even without an internet connection in an offline way after the initial setup. To run hugging face locally on your machine, you'll first need to install the necessary packages. this includes the transformers library and the hugging face cli. the transformers library can be installed using pip, and it's recommended to use a virtual environment to keep your dependencies organized. Instead of relying on external apis, you can run models directly on your machine — unlocking: platforms like docker and hugging face make cutting edge ai models instantly accessible without building from scratch. running them locally means lower latency, better privacy, and faster iteration. open docker desktop.

How To Deploy Hugging Face Models With Run Ai To run hugging face locally on your machine, you'll first need to install the necessary packages. this includes the transformers library and the hugging face cli. the transformers library can be installed using pip, and it's recommended to use a virtual environment to keep your dependencies organized. Instead of relying on external apis, you can run models directly on your machine — unlocking: platforms like docker and hugging face make cutting edge ai models instantly accessible without building from scratch. running them locally means lower latency, better privacy, and faster iteration. open docker desktop. Here’s a simple example of how to use hugging face to summarize text in just a few lines of code: text = "artificial intelligence is revolutionizing multiple industries " this will. Ever wanted to run cutting edge ai models on your own machine? welcome to the world of hugging face, where ai magic happens right on your pc. let's dive into the best models and how to get them running locally! why run models locally? before we jump in, here's why local deployment matters: 1. language models that think like humans. The transformers library by hugging face provides a flexible and powerful framework for running large language models both locally and in production environments. Hugging face offers the capability to run models and apps on gpus locally, enhancing performance, especially for resource intensive tasks such as deep learning model inference and training.

How To Deploy Hugging Face Models With Run Ai Here’s a simple example of how to use hugging face to summarize text in just a few lines of code: text = "artificial intelligence is revolutionizing multiple industries " this will. Ever wanted to run cutting edge ai models on your own machine? welcome to the world of hugging face, where ai magic happens right on your pc. let's dive into the best models and how to get them running locally! why run models locally? before we jump in, here's why local deployment matters: 1. language models that think like humans. The transformers library by hugging face provides a flexible and powerful framework for running large language models both locally and in production environments. Hugging face offers the capability to run models and apps on gpus locally, enhancing performance, especially for resource intensive tasks such as deep learning model inference and training.

How To Deploy Hugging Face Models With Run Ai The transformers library by hugging face provides a flexible and powerful framework for running large language models both locally and in production environments. Hugging face offers the capability to run models and apps on gpus locally, enhancing performance, especially for resource intensive tasks such as deep learning model inference and training.

How To Deploy Hugging Face Models With Run Ai

Comments are closed.