How To Run Llms Locally Beginner Friendly

Run Llms Locally 7 Simple Methods Datacamp Here you will quickly learn all about local llm hardware, software & models to try out first. there are many reasons why one might try to get into local large language models. one is wanting to own a local and fully private, personal ai assistant. another is a need for a capable roleplay companion or story writing helper. whatever your goal is, this guide will walk you through the basics of. A beginner friendly guide for anyone and everyone who wants to try running a llm on their own pc or laptop.

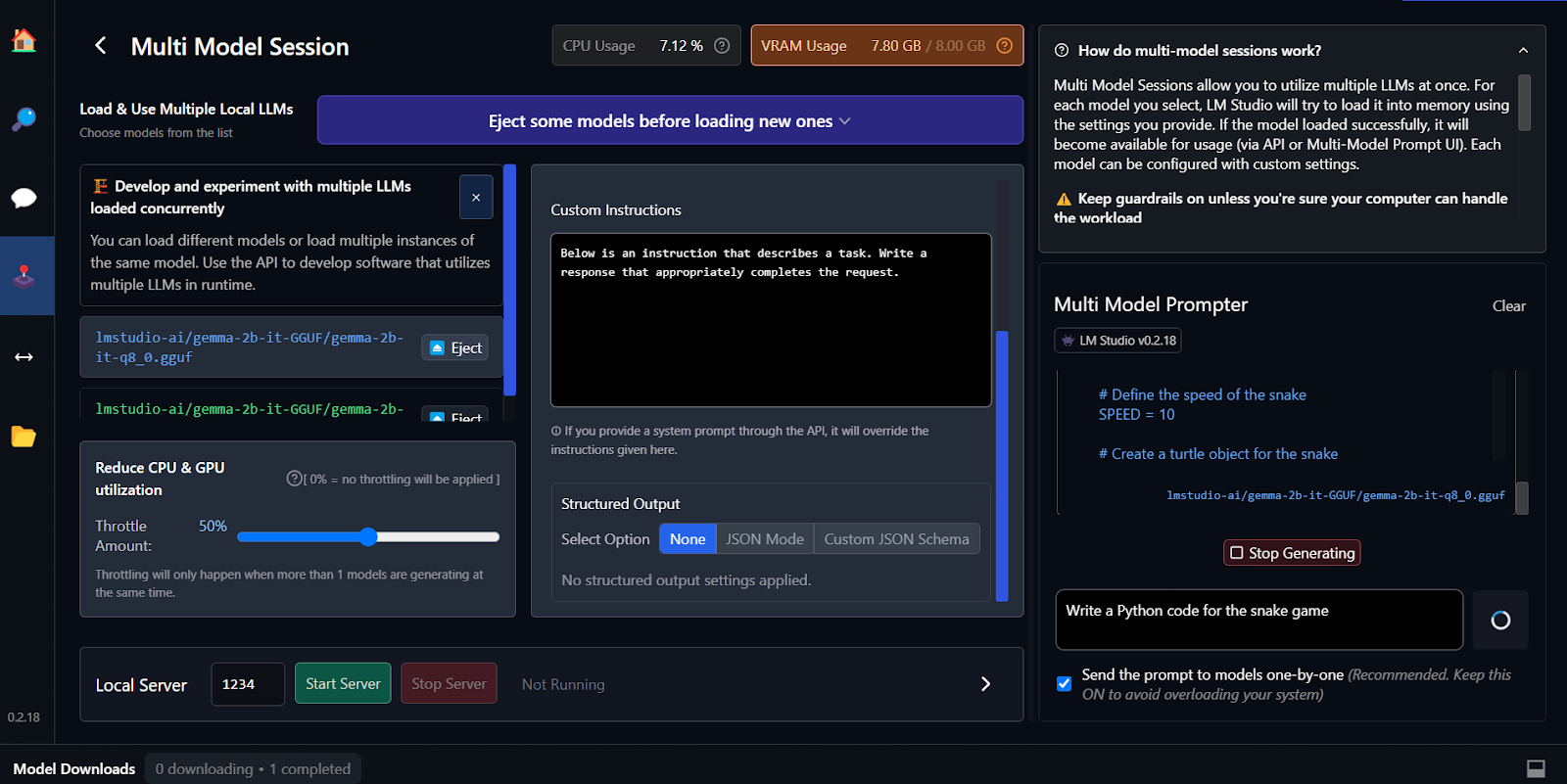

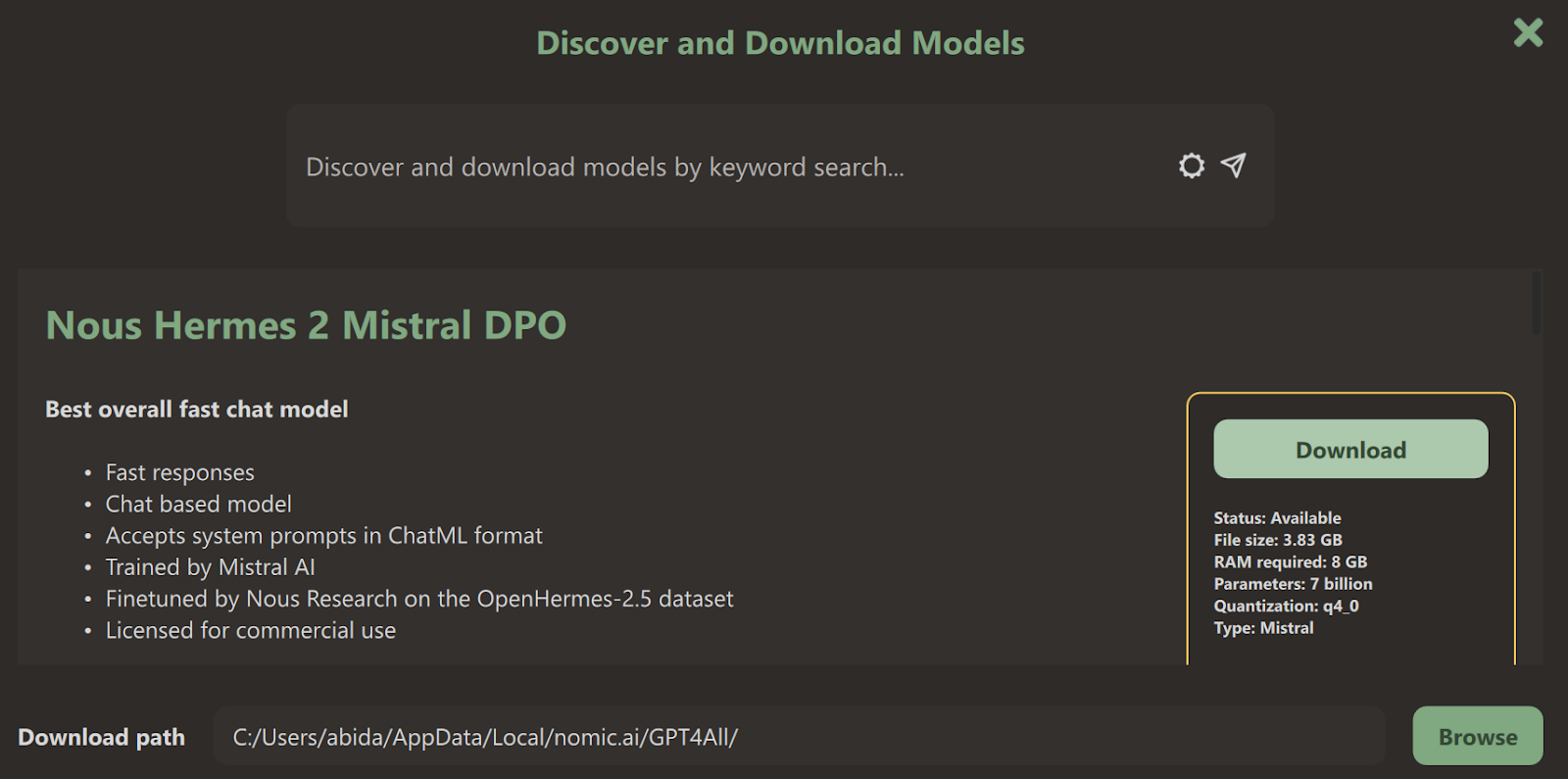

Run Llms Locally 7 Simple Methods Datacamp Here in this guide, you will learn the step by step process to run any llm models chatgpt, deepseek, and others, locally. this guide covers three proven methods to install llm models locally on mac, windows, or linux. so, choose the method that suits your workflow and hardware. Getting started: a novice friendly guide to running local ai with ollama and anythingllm this guide will walk you through setting up a powerful, private and user friendly local ai environment on your computer. In this guide, we’ll walk you through what a local llm is, what you need to run one, and how to get started —plus some tools and model recommendations to make the journey easier. Just starting with local llms in your coding workflow? you’re in the right place. i remember how overwhelming it felt when i first tried running models locally – the setup seemed complex and the results unpredictable. this guide will walk you through everything step by step, just like i wish someone had done for me.

Run Llms Locally 7 Simple Methods Datacamp In this guide, we’ll walk you through what a local llm is, what you need to run one, and how to get started —plus some tools and model recommendations to make the journey easier. Just starting with local llms in your coding workflow? you’re in the right place. i remember how overwhelming it felt when i first tried running models locally – the setup seemed complex and the results unpredictable. this guide will walk you through everything step by step, just like i wish someone had done for me. How to run a llm locally without coding? what is a local llm? a local llm is a large language model that runs right on your own device—your laptop, desktop, or even a home server. no cloud. no internet. no outside company involved. it's kind of like downloading spotify music to your phone. Learn how to set up, run, and fine tune a self hosted llm cut api costs, keep data private & optimize models locally without enterprise gpus. Discover how to run powerful large language models (llms) locally on your device for enhanced privacy, unlimited access, and total control. By running an llm locally, you have the freedom to experiment, customize, and fine tune the model to your specific needs without external dependencies. you can choose from a wide range of open source models, tailor them to your specific tasks, and even experiment with different configurations to optimize performance.

Comments are closed.