How To Run Hugging Face Llms With Llamaedge On Your Own Device

Evaluate Llms With Hugging Face Lighteval On Amazon Sagemaker Powered by the llama.cpp runtime, it supports any models in the gguf format. in this article, we'll provide a step by step guide to running a newly open sourced model with llamaedge. with just the gguf file and corresponding prompt template, you'll be able to quickly run emergent models on your own. understand the command line to run the model. Tinker with llms in the privacy of your own home using llama.cpp everything you need to know to build, run, serve, optimize and quantize models on your pc tobias mann sun 24 aug 2025 11:11 utc.

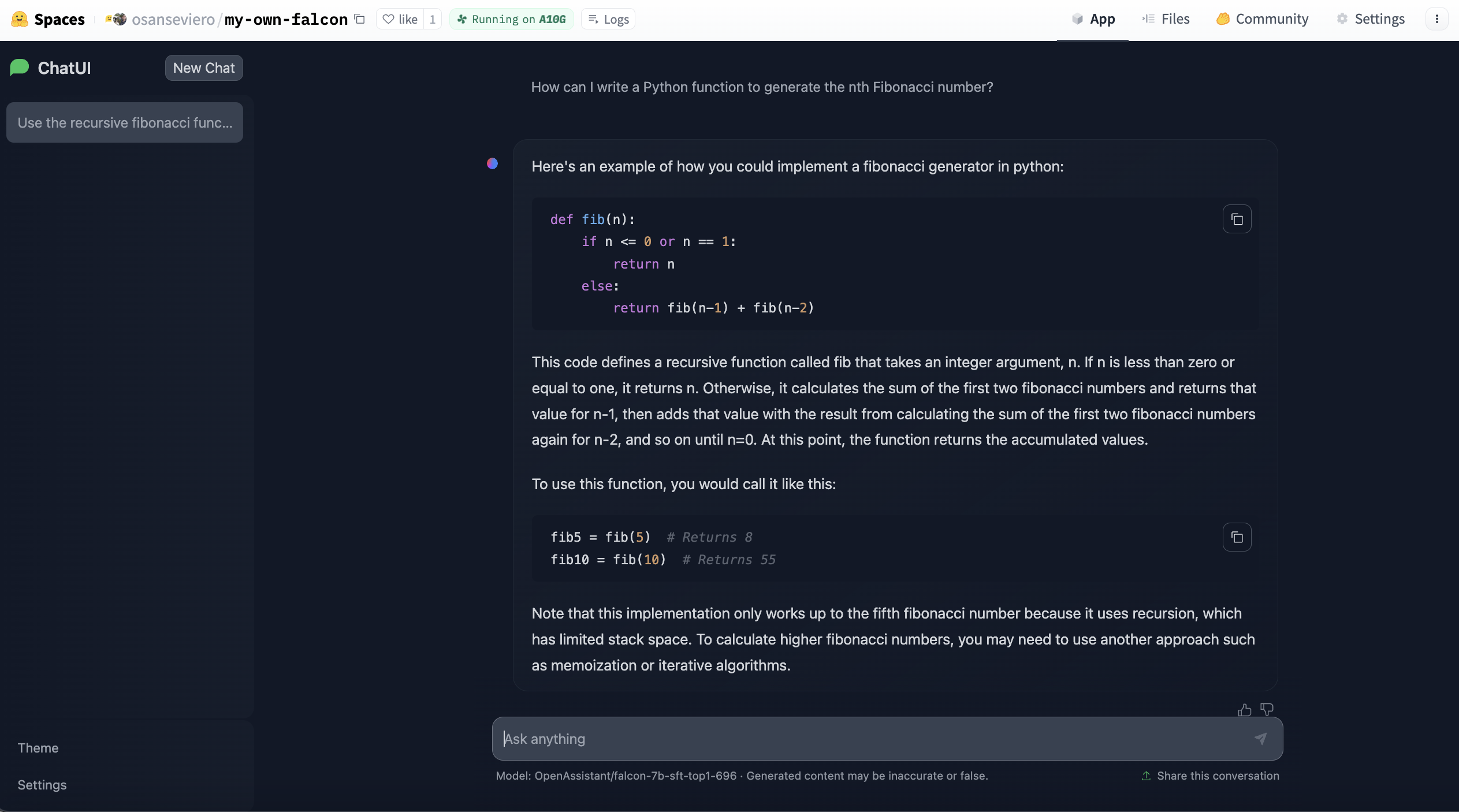

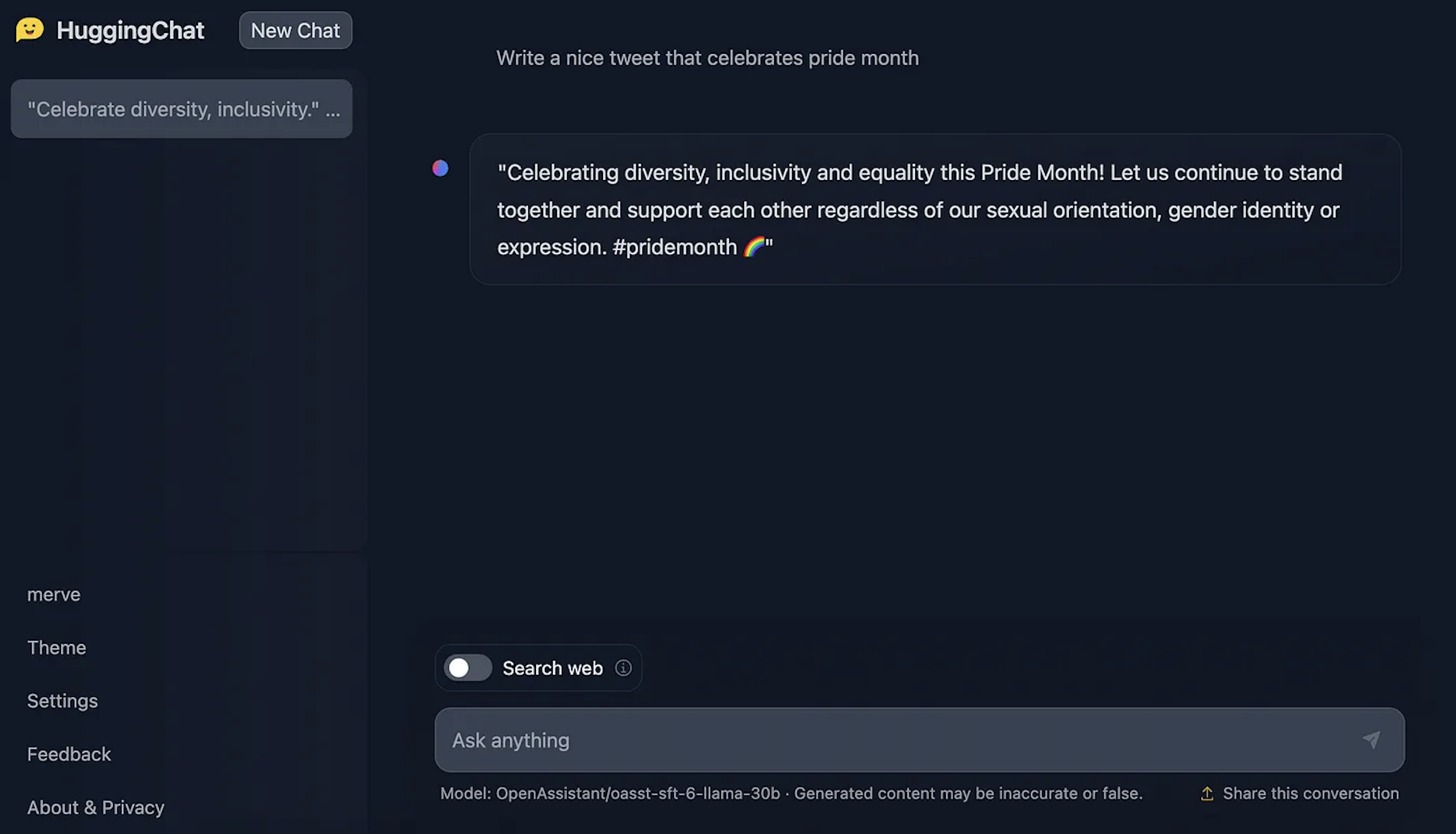

Open Source Text Generation Llm Ecosystem At Hugging Face Llamaedge provides a set of modular components for you to assemble your own llm agents and applications like lego blocks. you can do this entirely in rust or javascript, and compile down to a self contained application binary that runs without modification across many devices. In this guide, i’ll walk you through the entire process, from requesting access to loading the model locally and generating model output — even without an internet connection in an offline way after the initial setup. Isn’t there a simpler way to run llms models locally? hi everyone, i’m currently exploring a project idea : create an ultra simple tool for launching open source llm models locally, without the hassle, and i’d like to get your feedback. By following the steps outlined in this guide, you can efficiently run hugging face models locally, whether for nlp, computer vision, or fine tuning custom models.

Open Source Text Generation Llm Ecosystem At Hugging Face Isn’t there a simpler way to run llms models locally? hi everyone, i’m currently exploring a project idea : create an ultra simple tool for launching open source llm models locally, without the hassle, and i’d like to get your feedback. By following the steps outlined in this guide, you can efficiently run hugging face models locally, whether for nlp, computer vision, or fine tuning custom models. This guide demonstrates how easy it is to run llms locally and integrate them into your python code. by following these steps, you can harness the power of llama models while maintaining full control over your deployment environment. In this video, we'll learn how to run a large language model (llm) from hugging face on our own machine.blog post: markhneedham blog 2023 06. You can login using your huggingface.co credentials. this forum is powered by discourse and relies on a trust level system. as a new user, you’re temporarily limited in the number of topics and posts you can create. I am using a llm as part of my project, the model i am using is llama 3.1 8b with fp16, i tried to test the model's performance in colab notebook, here is the parameters and it was set to the most common settings for these models.

Comments are closed.