How To Install The Enterprise Grade Ai Playground From Hugging Face Text Generation Inference Tgi

Text Generation Inference Text Generation Inference Want it to be opensource and backed up by a top tier company? the text generation interface (tgi) platform from hugging face fits the bill. host your own ai playground .more. Text generation inference (tgi) is a toolkit for deploying and serving large language models (llms). tgi enables high performance text generation for the most popular open source llms, including llama, falcon, starcoder, bloom, gpt neox, and t5.

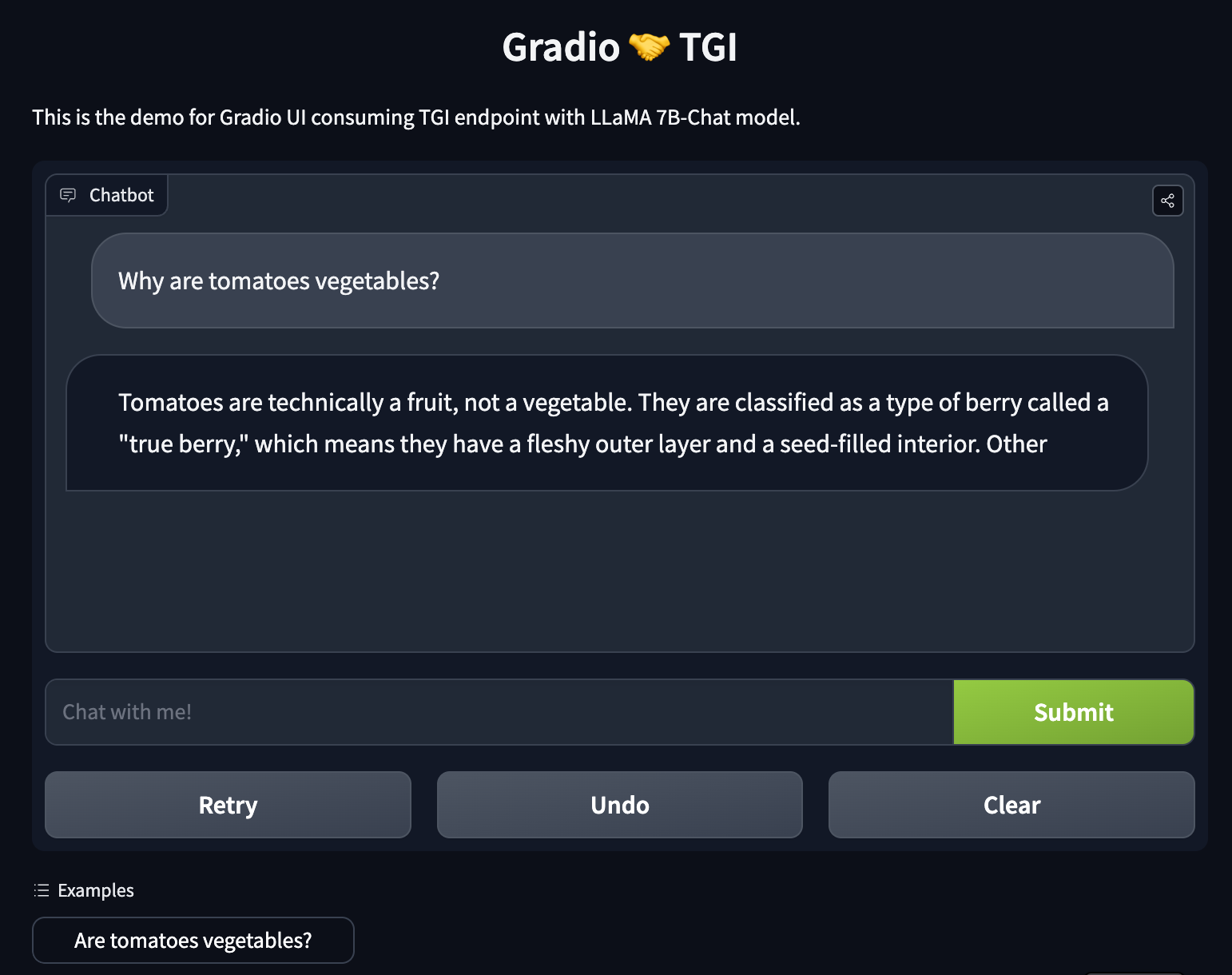

Text Generation Inference Text Generation Inference Once tgi is running, you can use the generate endpoint or the open ai chat completion api compatible messages api by doing requests. to learn more about how to query the endpoints, check the consuming tgi section, where we show examples with utility libraries and uis. Learn how to install and utilize ai playground for top notch text generation. boost your ai skills with hugging face's innovative text generation inference (tgi)!. In this "how to" video we cover what the text generation interface software is used for, and we share a detailed step by step installation guide. we also share exclusive content on how to run it 100% privately with scripts you can use to do it safely and securely. Below are step by step instructions how to install tgi on virtual servers with v100 gpus on ibm cloud. in the easiest case containers can be used which host specific models and provides rest endpoints. in previous posts i described how to provision v100 gpus in the ibm cloud and how to fine tune your own models: my virtual server runs ubuntu.

Text Generation Inference In this "how to" video we cover what the text generation interface software is used for, and we share a detailed step by step installation guide. we also share exclusive content on how to run it 100% privately with scripts you can use to do it safely and securely. Below are step by step instructions how to install tgi on virtual servers with v100 gpus on ibm cloud. in the easiest case containers can be used which host specific models and provides rest endpoints. in previous posts i described how to provision v100 gpus in the ibm cloud and how to fine tune your own models: my virtual server runs ubuntu. This tutorial demonstrates how to configure and run tgi using amd instinct™ gpus, leveraging the rocm software stack for accelerated performance. learn how to set up your environment, containerize your workflow, and test your inference server by sending customized queries. Text generation inference (tgi) is a toolkit for deploying and serving large language models (llms). tgi enables high performance text generation for the most popular open access llms. How to use the hugging face text generation interface (tgi) opensource solution to safely and securely host large language models. A step by step tutorial on how to deploy hugging face text generation inference (tgi) on azure container instance.

Consuming Text Generation Inference This tutorial demonstrates how to configure and run tgi using amd instinct™ gpus, leveraging the rocm software stack for accelerated performance. learn how to set up your environment, containerize your workflow, and test your inference server by sending customized queries. Text generation inference (tgi) is a toolkit for deploying and serving large language models (llms). tgi enables high performance text generation for the most popular open access llms. How to use the hugging face text generation interface (tgi) opensource solution to safely and securely host large language models. A step by step tutorial on how to deploy hugging face text generation inference (tgi) on azure container instance.

Comments are closed.