How To Install An Llm On Macos And Why You Should Zdnet

How To Install An Llm On Macos And Why You Should Zdnet How to install an llm on macos (and why you should) if you like the idea of ai but don't want to share your content or information with a third party, you can always install. The ollama team just released a native gui for mac and windows, making it easy to run ai on your own computer and pull whichever llm you prefer.

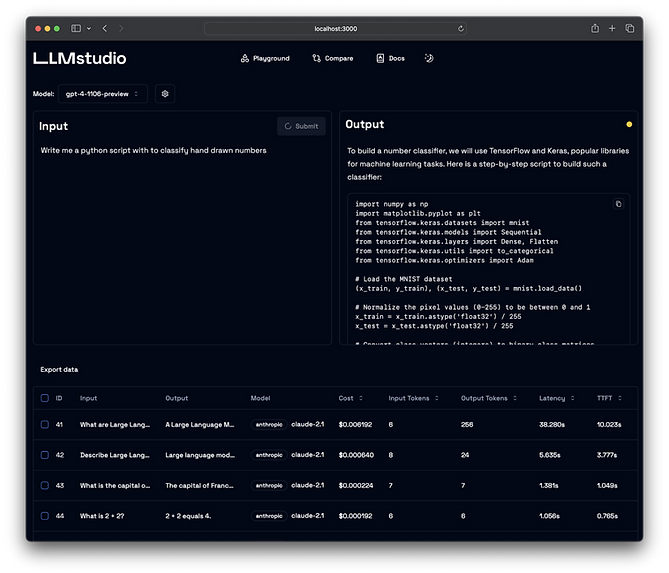

Llm Deployment Assessment Tensorops Macos does not lack ai apps and services. my go to when using my macbook pro is a one two combination of ollama and msty because the llm is locally installed, so i do not have to worry that my. From automating workflows to enhancing creativity, installing an llm on your macos system means you’re adopting a cutting edge solution. while some believe it’s a complicated endeavor, the process is surprisingly straightforward. here’s everything you need to know in simple, actionable steps. Whether you’re a seasoned llm expert or just a little curious about llms and generative ai, this is a great product to try out. heck, it’s free, so why not?. Local ai models now run directly on mac devices, letting users process sensitive data without relying on cloud services. the emergence of ollama brings local large language model (llm) capabilities to macos users, allowing them to leverage ai technology while maintaining data privacy.

Introducing Private Llm V1 4 For Macos Faster Smarter And More User Whether you’re a seasoned llm expert or just a little curious about llms and generative ai, this is a great product to try out. heck, it’s free, so why not?. Local ai models now run directly on mac devices, letting users process sensitive data without relying on cloud services. the emergence of ollama brings local large language model (llm) capabilities to macos users, allowing them to leverage ai technology while maintaining data privacy. Llms are particularly useful for those who want to explore the capabilities of ai without compromising their personal data or information. by installing an llm on your apple device, you can enjoy a high level of autonomy and control over how and when you use these models. Download both the gui tool from ollama download and install the cli tool from homebrew. find a model you want to run. llama3.1 is considered the best currently available open source model, but the 8b version struggles with tool usage, and the 70b is too big for a lot of computers. I will say this: what you will end up with is an ai that you access via the command line. there is a gui that can be installed, but it's web based, and most of the other guis are either quite challenging to install or shouldn't be trusted. The article explains how to install ollama, a local language model (llm) for macos, allowing users to utilize ai without sharing their data with third parties. it provides a step by step guide, requiring macos 11 or later, detailing the installation process through a terminal command.

Github Skywing Llm Dev The Common Setup To Run Llm Locally Use Llms are particularly useful for those who want to explore the capabilities of ai without compromising their personal data or information. by installing an llm on your apple device, you can enjoy a high level of autonomy and control over how and when you use these models. Download both the gui tool from ollama download and install the cli tool from homebrew. find a model you want to run. llama3.1 is considered the best currently available open source model, but the 8b version struggles with tool usage, and the 70b is too big for a lot of computers. I will say this: what you will end up with is an ai that you access via the command line. there is a gui that can be installed, but it's web based, and most of the other guis are either quite challenging to install or shouldn't be trusted. The article explains how to install ollama, a local language model (llm) for macos, allowing users to utilize ai without sharing their data with third parties. it provides a step by step guide, requiring macos 11 or later, detailing the installation process through a terminal command.

Navigating The Llm Deployment Dilemma I will say this: what you will end up with is an ai that you access via the command line. there is a gui that can be installed, but it's web based, and most of the other guis are either quite challenging to install or shouldn't be trusted. The article explains how to install ollama, a local language model (llm) for macos, allowing users to utilize ai without sharing their data with third parties. it provides a step by step guide, requiring macos 11 or later, detailing the installation process through a terminal command.

Comments are closed.