How To Improve Ai Apps With Automated Evals

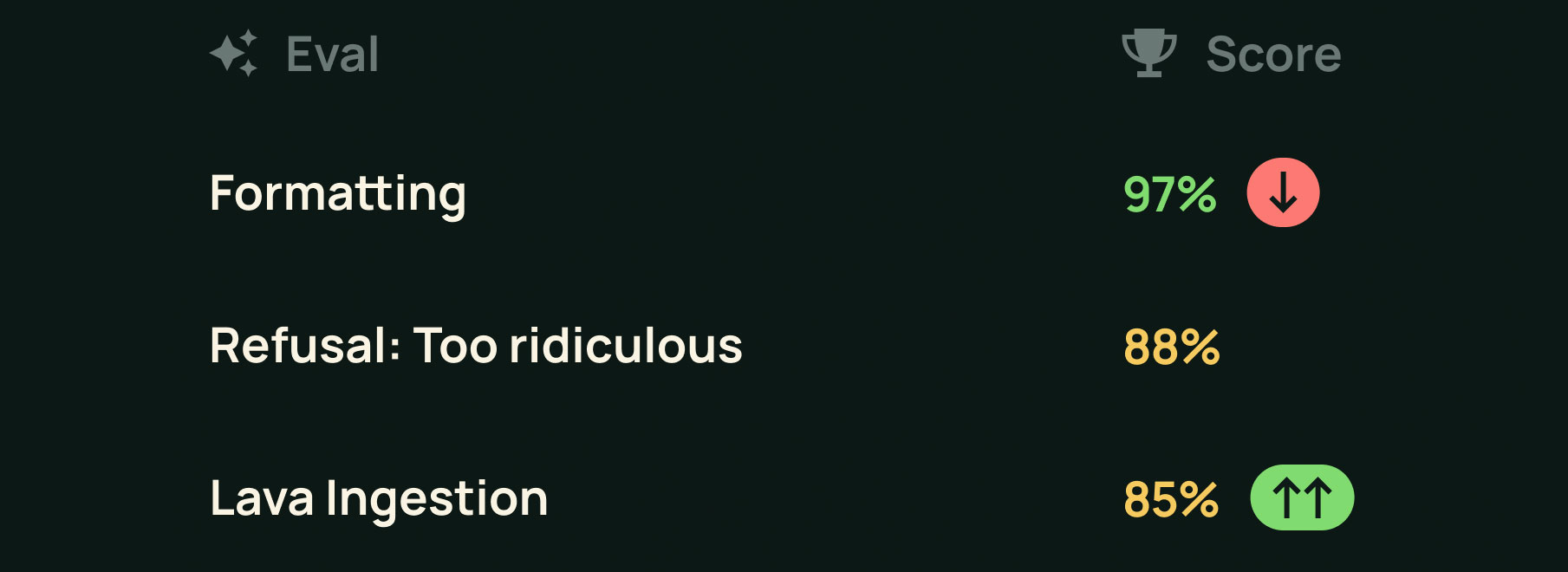

Testing The Untestable Allen Pike Evals (“evaluations”) offer a systematic way to measure, compare, and refine these responses. in this guide, we’ll cover: why evals matter in modern ai applications. 30 ai projects you can build this weekend: the data entrepreneurs.kit 30 ai projectsalthough llms can perform arbitrary tasks, evaluating the qua.

Ai Evaluation Pdf Accuracy And Precision Artificial Intelligence And one thing is certain, ai evals are becoming an increasingly important topic when it comes to building ai products. many people across the industry are mentioning ai evals as a crucial skill for building great ai products. Here, i provide practical guidance that is based on my research, hands on work building applications, and lessons from fellow practitioners for setting up an effective, iterative evaluation process. this type of process drives rapid progress and sets the organization up for genai app success. Effective ai evals typically include four key components: setting the role, providing the context, defining the goal, and establishing terminology and labels. let's examine each through a customer support ai assistant example: 1. setting the role: establishing the context for the evaluating system. You've built a shiny new ai powered application – maybe a translation service, a summarizer, a chatbot, or a sentiment analyzer. it seems to work in your tests, but here’s the critical question: how do you really know if it's consistently effective, accurate, and meeting user needs?.

Ai Performance Evaluation Annotated Pdf Effective ai evals typically include four key components: setting the role, providing the context, defining the goal, and establishing terminology and labels. let's examine each through a customer support ai assistant example: 1. setting the role: establishing the context for the evaluating system. You've built a shiny new ai powered application – maybe a translation service, a summarizer, a chatbot, or a sentiment analyzer. it seems to work in your tests, but here’s the critical question: how do you really know if it's consistently effective, accurate, and meeting user needs?. Today, we’re diving deep into ai evals—what they are, why they’re broken, who’s trying to fix them, and how you should be thinking about evaluation as a core product discipline in the ai era. By the end of this post, you’ll have a clear playbook to systematically improve your ai app using evals from setup to robust, automated eval loops that exceed user expectations. To break through this plateau, we created a systematic approach to improving lucy centered on evaluation. our approach is illustrated by the diagram below. this diagram is a best faith effort to illustrate my mental model for improving ai systems. Learn how to scale up the evaluation of ai applications through automated evaluation techniques in this comprehensive tutorial. explore the challenges of evaluating open ended llm tasks that typically require human assessment and discover practical solutions using automated evals.

Comments are closed.