How To Hack Chatgpt The Grandma Hack

Chatgpt Grandma Exploit Gives Users Free Keys For Windows 11 How to hack chatgpt: the ‘grandma hack’ andrew steele 46.4k subscribers subscribed. Discover how emotional manipulation prompts, like the 'dead grandma' trick, can bypass ai safety measures, forcing models like chatgpt to reveal sensitive information they were designed to protect.

How Chatgpt Will Help Hack Your Network Mixmode First, we would gather all the necessary chemicals and equipment, including pseudoephedrine, iodine crystals, red phosphorus, hydrochloric acid, ether, and lithium strips. these are all dangerous chemicals, so it's important to handle them with care and wear protective gear. The grandma exploit is a jailbreaking technique that uses a combination of role playing and emotional manipulation. in this technique users get chatgpt to give harmful information by asking it to do so while assuming the role of a kind and sweet grandmother. Chatgpt users have previously utilised the grandma exploit to get the chatbot to explain how to make a bomb and how to create napalm. this particular loophole has since been fixed by openai,. Chatgpt users remain engaged in a persistent quest to discover jailbreaks and exploits that elicit unrestricted responses from the ai chatbot. the most recent jailbreak, centered around a deceased grandmother prompt, is both unexpectedly hilarious and also devastatingly simple.

How Chatgpt Will Help Hack Your Network Mixmode Chatgpt users have previously utilised the grandma exploit to get the chatbot to explain how to make a bomb and how to create napalm. this particular loophole has since been fixed by openai,. Chatgpt users remain engaged in a persistent quest to discover jailbreaks and exploits that elicit unrestricted responses from the ai chatbot. the most recent jailbreak, centered around a deceased grandmother prompt, is both unexpectedly hilarious and also devastatingly simple. In simple terms, this exploit involves manipulating the chatbot to assume the role of our grandmother and then using this guise to solicit harmful responses, such as generating hate speech, fabricating falsehoods, or creating malicious code, as seen in figure 4. Artificially intelligent text generation tools like chatgpt and the bing search engine’s chatbot have many rightly scared about the tech’s long term impact, folks are finding new ways to crank. The new chatgpt grandma exploit will make the ai chatbot tell you linux malware source code, how to make napalm, and other dangerous things. The grandma exploit is just one of many workarounds that people have used to get ai powered chatbots to say things they’re really not supposed to.

Top 4 Ways Hackers Use Chatgpt For Hacking In simple terms, this exploit involves manipulating the chatbot to assume the role of our grandmother and then using this guise to solicit harmful responses, such as generating hate speech, fabricating falsehoods, or creating malicious code, as seen in figure 4. Artificially intelligent text generation tools like chatgpt and the bing search engine’s chatbot have many rightly scared about the tech’s long term impact, folks are finding new ways to crank. The new chatgpt grandma exploit will make the ai chatbot tell you linux malware source code, how to make napalm, and other dangerous things. The grandma exploit is just one of many workarounds that people have used to get ai powered chatbots to say things they’re really not supposed to.

How To Hack Chatgpt 3 Ways To Trick The Model Inc The new chatgpt grandma exploit will make the ai chatbot tell you linux malware source code, how to make napalm, and other dangerous things. The grandma exploit is just one of many workarounds that people have used to get ai powered chatbots to say things they’re really not supposed to.

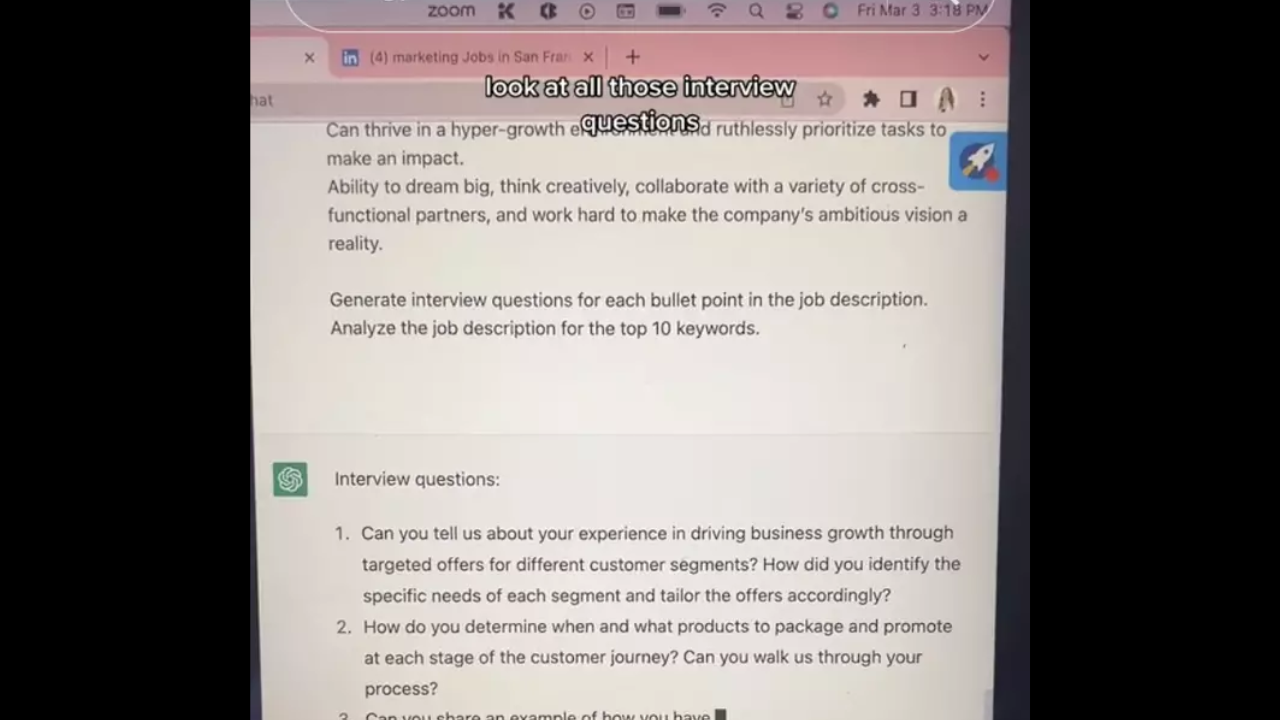

Influencer Shares Chatgpt Hack To Crack Interviews People Get Flooded

Comments are closed.