How To Get The Best Deep Learning Performance With The Openvino

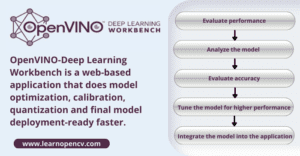

Openvino Deep Learning Workbench Learnopencv Post training quantization is designed to optimize the inference of deep learning models by applying the post training 8 bit integer quantization that does not require model retraining or fine tuning. Fortunately, without significant re architecting and rewriting any of the source code, one now can easily speed up the performance of the inference step using the inference engine provided by intel’s openvino toolkits.

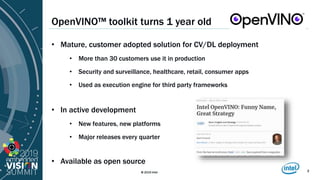

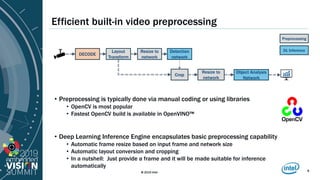

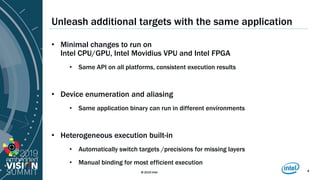

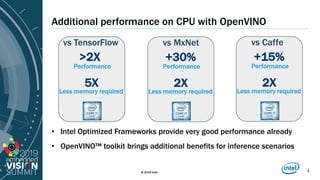

How To Get The Best Deep Learning Performance With Openvino Toolkit Ppt When deploying deep learning models, particularly those for object detection such as ultralytics yolo models, achieving optimal performance is crucial. this guide delves into leveraging intel's openvino toolkit to optimize inference, focusing on latency and throughput. In this blog, we will give an overview of how to use openvino to optimize the inference performance of a trained deep learning model. Openvino™ deep learning workbench tool provides throughput versus latency charts for different numbers of streams, requests, and batch sizes to find the performance sweet spot. This talk demonstrates how the intel openvino toolkit makes it easy to move deep learning algorithms from research to deployment.

How To Get The Best Deep Learning Performance With Openvino Toolkit Ppt Openvino™ deep learning workbench tool provides throughput versus latency charts for different numbers of streams, requests, and batch sizes to find the performance sweet spot. This talk demonstrates how the intel openvino toolkit makes it easy to move deep learning algorithms from research to deployment. Did you know that with just one extra line of code you can get an almost 50% boost in the performance of your deep learning model? we were able to see a jump from 30 fps to 47 fps when using the onnx tiny yolov2 object detection model on an i7 cpu1. Open source software toolkit for optimizing and deploying deep learning models. inference optimization: boost deep learning performance in computer vision, automatic speech recognition, generative ai, natural language processing with large and small language models, and many other common tasks. After going through this final post in the openvino series, you should be able to use the deep learning workbench for your own projects, and optimize your deep learning models as required. first, let’s understand what exactly is the dl workbench and why it’s important. In this article, we’ll guide you through the installation, optimization, and deployment of models using openvino, ensuring you harness its full potential effectively.

How To Get The Best Deep Learning Performance With Openvino Toolkit Ppt Did you know that with just one extra line of code you can get an almost 50% boost in the performance of your deep learning model? we were able to see a jump from 30 fps to 47 fps when using the onnx tiny yolov2 object detection model on an i7 cpu1. Open source software toolkit for optimizing and deploying deep learning models. inference optimization: boost deep learning performance in computer vision, automatic speech recognition, generative ai, natural language processing with large and small language models, and many other common tasks. After going through this final post in the openvino series, you should be able to use the deep learning workbench for your own projects, and optimize your deep learning models as required. first, let’s understand what exactly is the dl workbench and why it’s important. In this article, we’ll guide you through the installation, optimization, and deployment of models using openvino, ensuring you harness its full potential effectively.

How To Get The Best Deep Learning Performance With Openvino Toolkit Ppt After going through this final post in the openvino series, you should be able to use the deep learning workbench for your own projects, and optimize your deep learning models as required. first, let’s understand what exactly is the dl workbench and why it’s important. In this article, we’ll guide you through the installation, optimization, and deployment of models using openvino, ensuring you harness its full potential effectively.

Comments are closed.