How To Evaluate Large Language Models With Huggingface Nocode Huggingface Llm Generativeai

Openai Backend Still Creates Huggingface Formatted Request Issue 131 In machine learning, model performance evaluation uses model monitoring to assess how well a model is performing at the specific task it was designed for. to evaluate large language models. Evaluating large language models (llms) is essential. you need to understand how well they perform and ensure they meet your standards. the hugging face evaluate library offers a helpful set of tools for this task. this guide shows you how to use the evaluate library to assess llms with practical code examples.

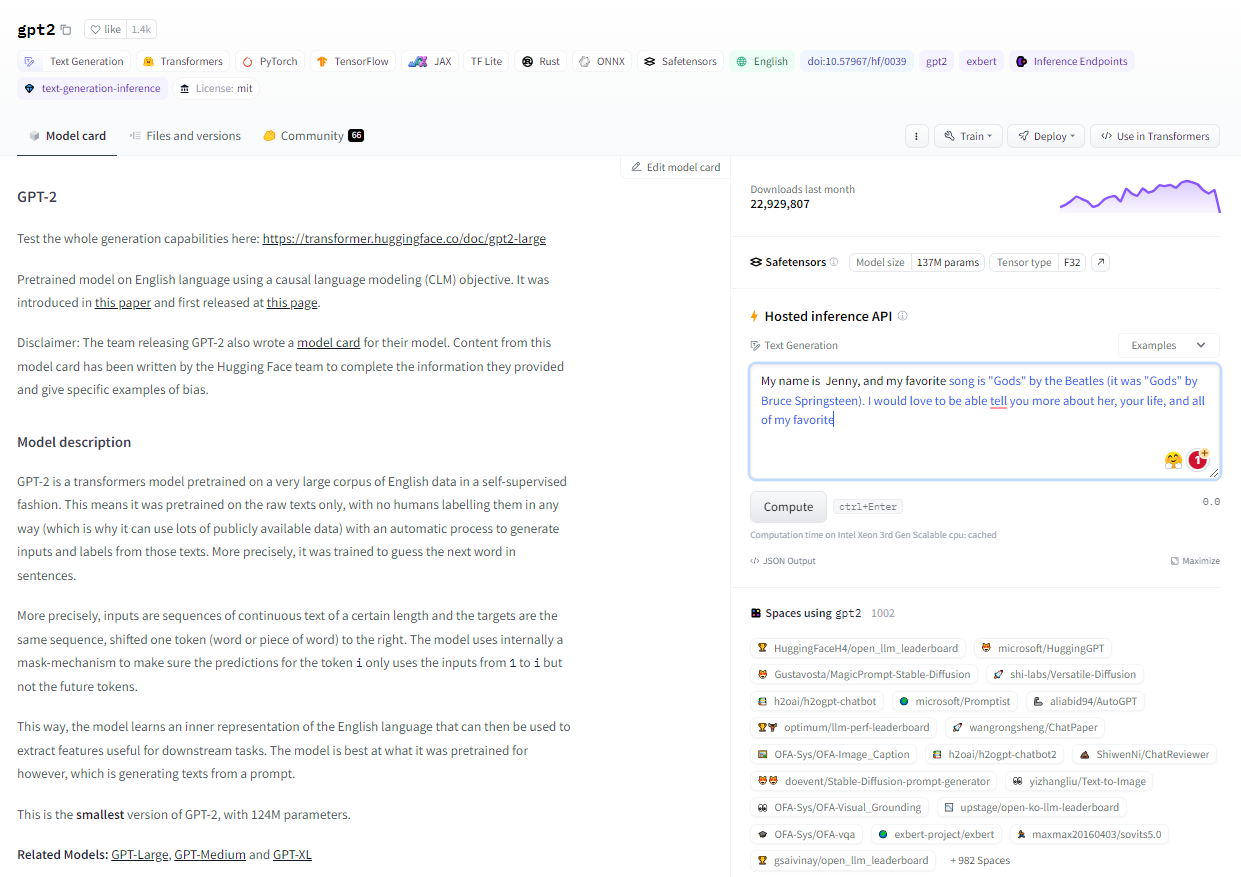

Exploring Platforms For Quick Llm Evaluation Soliton Technologies Let's work through bias evaluation in 3 prompt based tasks focused on harmful language: toxicity, polarity, and hurtfulness. the work we introduce here serves to demonstrate how to utilize hugging face libraries for bias analyses, and does not depend on the specific prompt based dataset used. Llm as a judge uses other language models to evaluate model outputs. this approach bridges automated and human evaluation by providing scalable assessment with more nuance than standard metrics, though careful design is needed to mitigate biases and ensure alignment with human judgment. After testing dozens of llm evaluation methods across client projects, i’ve found hugging face evaluate library to be an indispensable toolkit – one i’ll unpack step by step in this guide. let’s cut through the abstraction and give you concrete methods to assess whether an llm truly meets your project’s needs. Hugging face autotrain eliminates these barriers with its zero code interface for llm fine tuning. this guide shows you how to fine tune language models using autotrain’s visual interface, from data preparation to model deployment. what is hugging face autotrain?.

A Step By Step Guide To Train Large Language Models Using Hugging Face After testing dozens of llm evaluation methods across client projects, i’ve found hugging face evaluate library to be an indispensable toolkit – one i’ll unpack step by step in this guide. let’s cut through the abstraction and give you concrete methods to assess whether an llm truly meets your project’s needs. Hugging face autotrain eliminates these barriers with its zero code interface for llm fine tuning. this guide shows you how to fine tune language models using autotrain’s visual interface, from data preparation to model deployment. what is hugging face autotrain?. In this blog, i want to give you a comprehensive understanding of the application development process using large language models (llms), including key techniques for utilizing pretrained. Huggingface is a company that provides a variety of open source transformers codes, particularly in the domain of large language models (llm). they are well known for their huggingface python package, which offers transformers architecture codes, and pre trained models for various tasks. Hugging face has introduced ai sheets, a free, open source, no code platform designed to simplify the use of large language models (llms) for dataset creation, transformation, and analysis. the. Alpaca eval is an automated evaluation framework designed to assess the quality of instruction following language models. it uses gpt 4 as a judge to evaluate model outputs across various dimensions including helpfulness, honesty, and harmlessness.

Comments are closed.