How To Align Large Language Models Llms Through Data

:format(webp))

How To Align Large Language Models Llms Through Data Aligning large language models (llms) effectively is crucial for their performance and relevance. the guide highlights the significance of using high quality, well curated datasets, precise data filtering, and comprehensive benchmarking in this process. In this post, we’ll take a look at the various approaches available to adapt llms to domain data.

Transformative Impact Of Large Language Models Llms On 44 Off In this section, we aim to understand how alignment performance scales with the amount of dataset used and whether less data can provide enough signal to align the model comparable to full sample. To address these challenges, we propose aligning llms through representation editing. the core of our method is to view a pre trained autoregressive llm as a discrete time stochastic dynamical system. to achieve alignment for specific objectives, we introduce external control signals into the state space of this language dynamical system. Discover how to enhance the performance of large language models (llms) with effective data alignment strategies. learn practical techniques for better results. We first discuss traditional rl based alignment methods and then pivot to non rl methods like kto (kahneman tversky optimization). 🤔 why align? tldr: to prevent llms from generating illegal, factually incorrect or redundant responses.

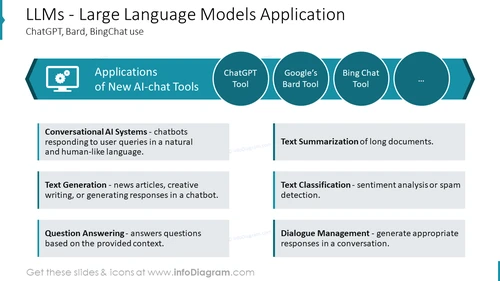

Llms Large Language Models Application Discover how to enhance the performance of large language models (llms) with effective data alignment strategies. learn practical techniques for better results. We first discuss traditional rl based alignment methods and then pivot to non rl methods like kto (kahneman tversky optimization). 🤔 why align? tldr: to prevent llms from generating illegal, factually incorrect or redundant responses. In our experiments, we simulate and evaluate populations of unique individuals in llms using these two inference time techniques. post training alignment techniques such as rlhf and rlaif are now standard parts of llm development. Training a large language model (llm) requires three phases: pre training. fine tuning. alignment. every step of this process demands data and a lot of it. alignment requires data in the form of prompts, the model’s responses to those prompts, and some form of human feedback for that prompt response pairing (whether direct or by proxy). Our comprehensive analysis of alignment between large language models (llms) and geometric deep models (gdms) reveals critical insights that can guide more principled protein focused mllm development. Learn how to align large language models (llms) with human intentions, ethical standards, and societal norms to prevent bias and harmful outputs.

Large Language Models Llms Tutorial Workshop Argonne National In our experiments, we simulate and evaluate populations of unique individuals in llms using these two inference time techniques. post training alignment techniques such as rlhf and rlaif are now standard parts of llm development. Training a large language model (llm) requires three phases: pre training. fine tuning. alignment. every step of this process demands data and a lot of it. alignment requires data in the form of prompts, the model’s responses to those prompts, and some form of human feedback for that prompt response pairing (whether direct or by proxy). Our comprehensive analysis of alignment between large language models (llms) and geometric deep models (gdms) reveals critical insights that can guide more principled protein focused mllm development. Learn how to align large language models (llms) with human intentions, ethical standards, and societal norms to prevent bias and harmful outputs.

Comments are closed.