Handling Imbalanced Dataset In Machine Learning Deep Learning

Handling Imbalanced Dataset In Machine Learning Deep Learning A Visual A key component of machine learning classification tasks is handling unbalanced data, which is characterized by a skewed class distribution with a considerable overrepresentation of one class over the others. Let’s consider undersampling, oversampling, smote and ensemble methods for combating imbalanced data sets with deep learning. what is imbalanced data? imbalanced data arises.

Handling Imbalanced Dataset In Machine Learning Deep Learning Machine In this post you will discover the tactics that you can use to deliver great results on machine learning datasets with imbalanced data. kick start your project with my new book imbalanced classification with python, including step by step tutorials and the python source code files for all examples. let’s get started. Handling imbalanced datasets in deep learning is a challenge, but it's not impossible. with the right strategies and a bit of experimentation, you can build models that catch those rare, important cases. One way to handle an imbalanced dataset is to downsample and upweight the majority class. here are the definitions of those two new terms: downsampling (in this context) means training on a. Take your machine learning expertise to the next level with this essential guide, utilizing libraries like imbalanced learn, pytorch, scikit learn, pandas, and numpy to maximize model performance and tackle imbalanced data.

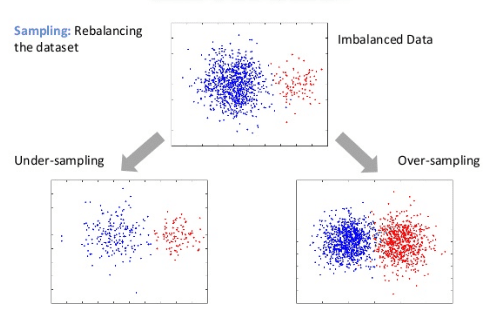

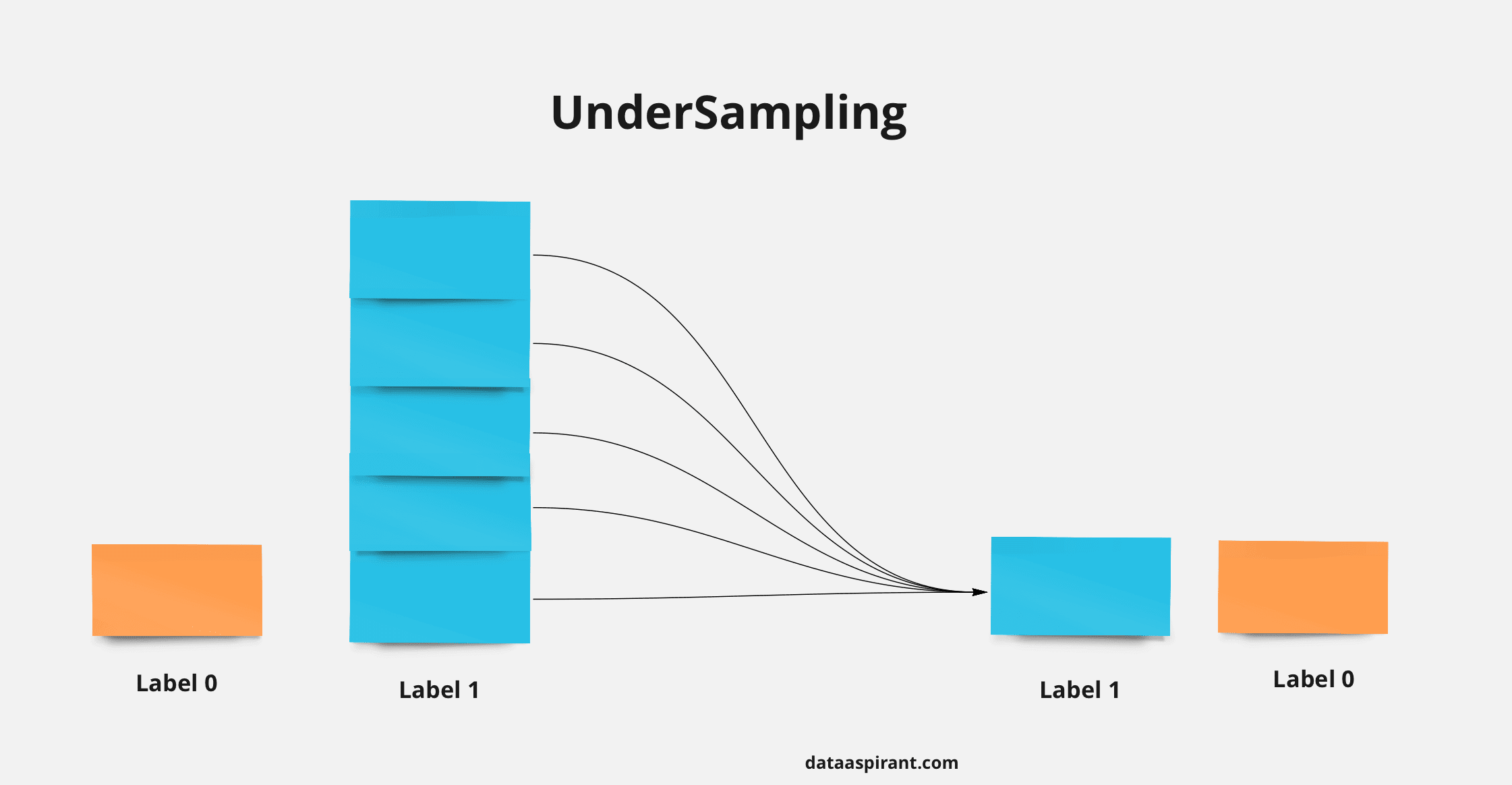

Handling Imbalanced Dataset In Machine Learning Deep Learning One way to handle an imbalanced dataset is to downsample and upweight the majority class. here are the definitions of those two new terms: downsampling (in this context) means training on a. Take your machine learning expertise to the next level with this essential guide, utilizing libraries like imbalanced learn, pytorch, scikit learn, pandas, and numpy to maximize model performance and tackle imbalanced data. In this guide, we’ll try out different approaches to solving the imbalance issue for classification tasks. that isn’t the only issue on our hands. our dataset is real, and we’ll have to deal with multiple problems imputing missing data and handling categorical features. In this detailed post, we’ll go into the depth of imbalanced datasets, explore the impacts on training, and demonstrate effective strategies for handling such datasets. Resampling, which modifies the sample distribution, is a frequently used technique for handling very unbalanced datasets. this can be accomplished by either over sampling, which adds more examples from the minority class, or under sampling, which removes samples from the majority class. Handling imbalanced data is a crucial step in many machine learning workflows. in this article, we have taken a look at five different ways of going about this: resampling methods, ensemble strategies, class weighting, correct evaluation measures, and generating artificial samples.

Imbalanced Dataset Handling In Machine Learning In this guide, we’ll try out different approaches to solving the imbalance issue for classification tasks. that isn’t the only issue on our hands. our dataset is real, and we’ll have to deal with multiple problems imputing missing data and handling categorical features. In this detailed post, we’ll go into the depth of imbalanced datasets, explore the impacts on training, and demonstrate effective strategies for handling such datasets. Resampling, which modifies the sample distribution, is a frequently used technique for handling very unbalanced datasets. this can be accomplished by either over sampling, which adds more examples from the minority class, or under sampling, which removes samples from the majority class. Handling imbalanced data is a crucial step in many machine learning workflows. in this article, we have taken a look at five different ways of going about this: resampling methods, ensemble strategies, class weighting, correct evaluation measures, and generating artificial samples.

Handling Imbalanced Dataset In Machine Learning Deep Learning Resampling, which modifies the sample distribution, is a frequently used technique for handling very unbalanced datasets. this can be accomplished by either over sampling, which adds more examples from the minority class, or under sampling, which removes samples from the majority class. Handling imbalanced data is a crucial step in many machine learning workflows. in this article, we have taken a look at five different ways of going about this: resampling methods, ensemble strategies, class weighting, correct evaluation measures, and generating artificial samples.

Comments are closed.