Hadoop Batch Processing Simplified 101 Learn Hevo

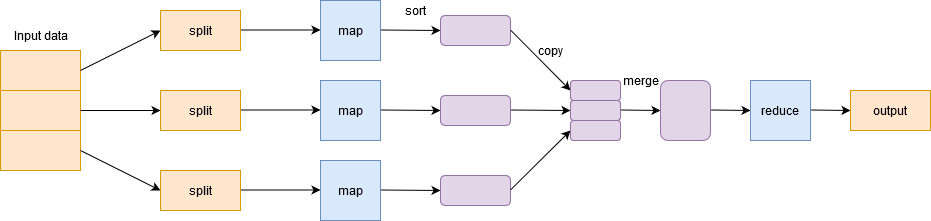

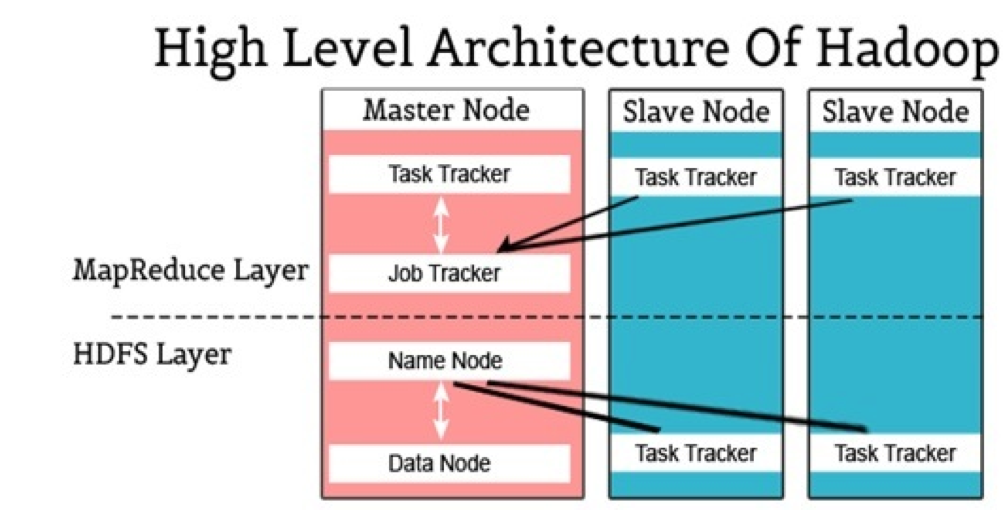

Hadoop Batch Processing Simplified 101 Learn Hevo The apache hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. Apache hadoop ( həˈduːp ) is a collection of open source software utilities for reliable, scalable, distributed computing. it provides a software framework for distributed storage and processing of big data using the mapreduce programming model.

Hadoop Batch Processing Simplified 101 Learn Hevo Hadoop is designed to scale up from a single computer to thousands of clustered computers, with each machine offering local computation and storage. in this way, hadoop can efficiently store and. Hadoop is an open source software framework that is used for storing and processing large amounts of data in a distributed computing environment. it is designed to handle big data and is based on the mapreduce programming model, which allows for the parallel processing of large datasets. Apache hadoop is an open source software framework for running distributed applications and storing large amounts of structured, semi structured, and unstructured data on clusters of inexpensive commodity hardware. hadoop is credited with democratizing big data analytics. Hadoop makes it easier to use all the storage and processing capacity in cluster servers, and to execute distributed processes against huge amounts of data. hadoop provides the building blocks on which other services and applications can be built.

Hadoop Batch Processing Simplified 101 Learn Hevo Apache hadoop is an open source software framework for running distributed applications and storing large amounts of structured, semi structured, and unstructured data on clusters of inexpensive commodity hardware. hadoop is credited with democratizing big data analytics. Hadoop makes it easier to use all the storage and processing capacity in cluster servers, and to execute distributed processes against huge amounts of data. hadoop provides the building blocks on which other services and applications can be built. The hadoop documentation includes the information you need to get started using hadoop. begin with the single node setup which shows you how to set up a single node hadoop installation. Hadoop is an open source software framework for storing data and running applications on clusters of commodity hardware. it provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs. What is hadoop? hadoop is an open source distributed processing framework that manages data processing and storage for big data applications in scalable clusters of computer servers. Hadoop is an open source framework written in java that allows distributed storage and processing of large datasets. before hadoop, traditional systems were limited to processing structured data mainly using rdbms and couldn't handle the complexities of big data.

Hadoop Batch Processing Simplified 101 Learn Hevo The hadoop documentation includes the information you need to get started using hadoop. begin with the single node setup which shows you how to set up a single node hadoop installation. Hadoop is an open source software framework for storing data and running applications on clusters of commodity hardware. it provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs. What is hadoop? hadoop is an open source distributed processing framework that manages data processing and storage for big data applications in scalable clusters of computer servers. Hadoop is an open source framework written in java that allows distributed storage and processing of large datasets. before hadoop, traditional systems were limited to processing structured data mainly using rdbms and couldn't handle the complexities of big data.

Comments are closed.