Hadoop And Big Data Pdf Apache Hadoop Map Reduce

Big Data Analysis Using Hadoop Mapreduce Pdf Apache Hadoop Map Reduce In the initial mapreduce implementation, all keys and values were strings, users where expected to convert the types if required as part of the map reduce functions. Hadoop mapreduce is a software framework for easily writing applications which process vast amounts of data (multi terabyte data sets) in parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault tolerant manner.

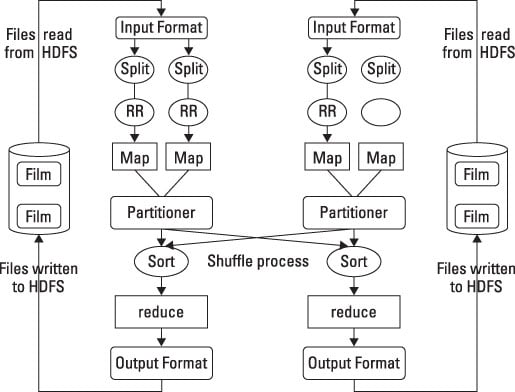

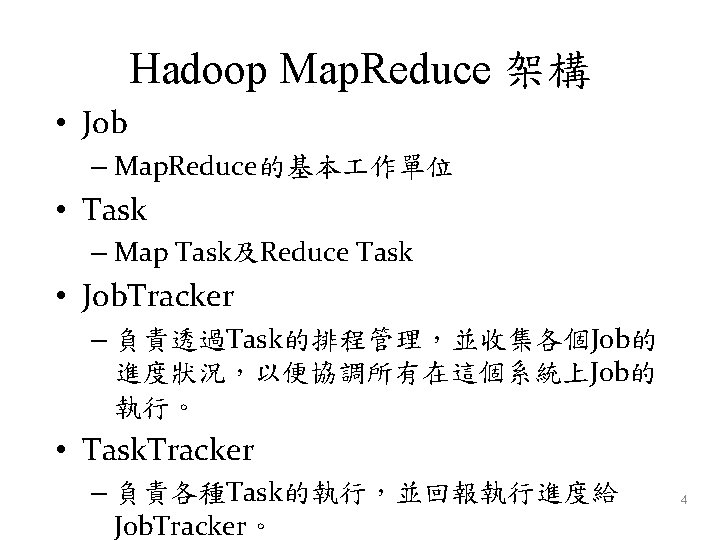

Hadoop Mapreduce For Big Data Dummies Mapreduce (mr) can refer to usage is usually clear from context! enqueues jobs and schedules individual tasks. attempts to assign tasks to support data locality. The apache hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. it is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Analyzing the data with hadoop to take advantage of the parallel processing that hadoop provides, we need to express our query as a mapreduce job.map and reduce. We will compare with already existing mapreduce technique with hadoop to afford high performance and efficiency for large volume of dataset. hadoop distributed architecture with mapreduce programming is analysis here. keywords : mapreduce, hadoop, distributed computing.

Hadoop Map Reduce Hadoop Map Reduce Hadoop Map Analyzing the data with hadoop to take advantage of the parallel processing that hadoop provides, we need to express our query as a mapreduce job.map and reduce. We will compare with already existing mapreduce technique with hadoop to afford high performance and efficiency for large volume of dataset. hadoop distributed architecture with mapreduce programming is analysis here. keywords : mapreduce, hadoop, distributed computing. Mapreduce software framework for processing large data sets in a distributed computing environment. Mapreduce is a parallel programming model developed by google as a mechanism for processing large amounts of raw data, e.g., web pages the search engine has crawled. this data is so large that it must be distributed across thousands of machines in order to be processed in a reasonable time. Preduce suffered from severe per ormance problems. today, this is becoming history. there are many techniques that can be used with hadoop mapreduc jobs to boost performance by orders of ma nitude. in this tutorial we teach such techniques. first, we will briefly familiarize the audience with hadoop mapr. Unit 2 big data free download as pdf file (.pdf), text file (.txt) or read online for free. the document provides an overview of hadoop, an open source framework for distributed processing of large datasets, detailing its requirements, design principles, and comparison with sql and rdbms.

Comments are closed.