Gradient Descent Explained

Gradient Descent Gradient descent is the core optimization algorithm for machine learning and deep learning models. almost all modern ai architectures, including gpt 4, resnet and alphago, rely on gradient descent to adjust their weights, improving prediction accuracy. Gradient descent minimizes the mean squared error (mse) which serves as the loss function to find the best fit line. gradient descent is used to iteratively update the weights (coefficients) and bias by computing the gradient of the mse with respect to these parameters.

Gradient Descent Explained The Infotech Gradient descent is a method for unconstrained mathematical optimization. it is a first order iterative algorithm for minimizing a differentiable multivariate function. Gradient descent is an optimization algorithm that is used to minimize a function by slowly moving in the direction of steepest descent, which is defined by the negative of the gradient. What is gradient descent? gradient descent is an optimization algorithm which is commonly used to train machine learning models and neural networks. it trains machine learning models by minimizing errors between predicted and actual results. Gradient descent is widely used in the machine learning world and is essentially an optimization algorithm used to find the minimum of a cost function. in data science, gradient descent is used to refine the parameters (coefficients) of our model to minimize error.

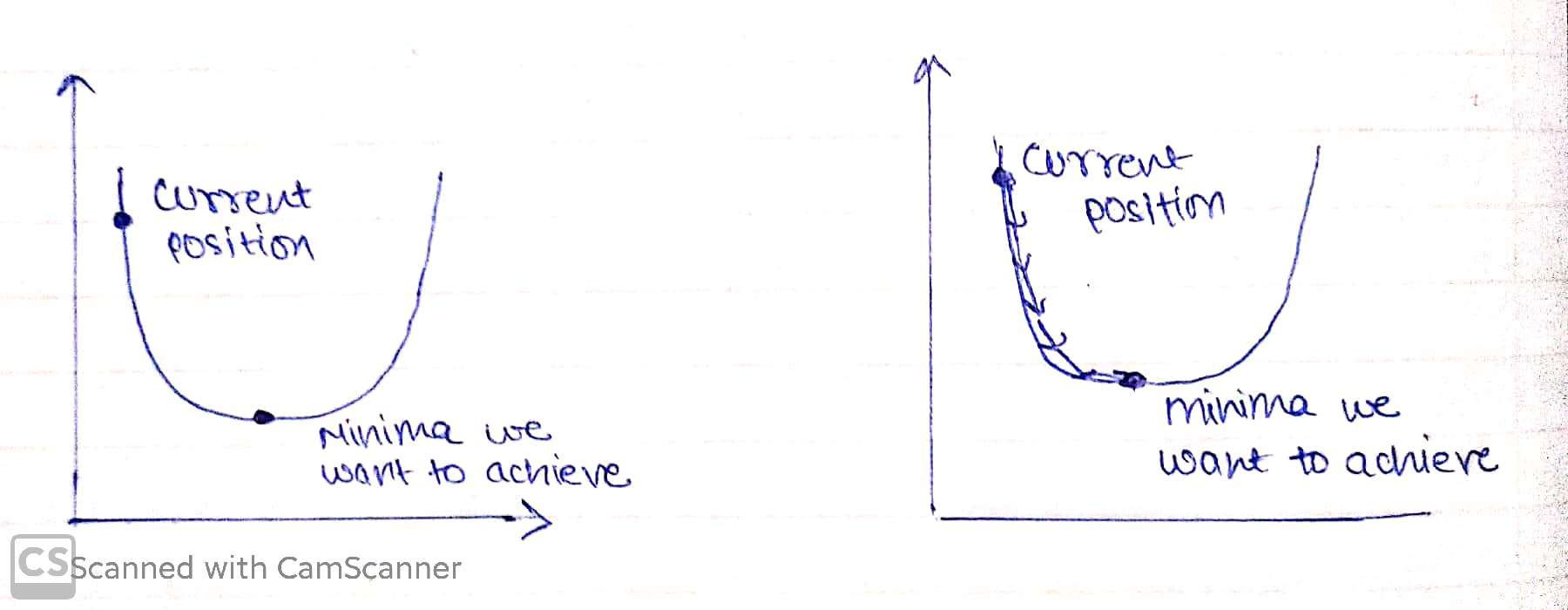

Gradient Descent Explained What is gradient descent? gradient descent is an optimization algorithm which is commonly used to train machine learning models and neural networks. it trains machine learning models by minimizing errors between predicted and actual results. Gradient descent is widely used in the machine learning world and is essentially an optimization algorithm used to find the minimum of a cost function. in data science, gradient descent is used to refine the parameters (coefficients) of our model to minimize error. Gradient descent is an iterative optimization algorithm used to minimize a cost (or loss) function. it adjusts model parameters (weights and biases) step by step to reduce the error in predictions. Gradient descent is an optimization algorithm used in machine learning to minimize the cost function by iteratively adjusting parameters in the direction of the negative gradient, aiming to find the optimal set of parameters. the cost function represents the discrepancy between the predicted output of the model and the actual output. In this article you'll learn to understand what is gradient descent and all the component apart of it. and then explore a few modifications of it from simple to advanced to solidify your understanding!. Gradient descent is an optimization algorithm that’s used when training a machine learning model. it’s based on a convex function and tweaks its parameters iteratively to minimize a given function to its local minimum.

How Gradient Descent Works Gradient Descent Explained Gradient descent is an iterative optimization algorithm used to minimize a cost (or loss) function. it adjusts model parameters (weights and biases) step by step to reduce the error in predictions. Gradient descent is an optimization algorithm used in machine learning to minimize the cost function by iteratively adjusting parameters in the direction of the negative gradient, aiming to find the optimal set of parameters. the cost function represents the discrepancy between the predicted output of the model and the actual output. In this article you'll learn to understand what is gradient descent and all the component apart of it. and then explore a few modifications of it from simple to advanced to solidify your understanding!. Gradient descent is an optimization algorithm that’s used when training a machine learning model. it’s based on a convex function and tweaks its parameters iteratively to minimize a given function to its local minimum.

Comments are closed.