Gpu Memory Understanding When Hosting A Model With Tgi Issue 955

Tbm Gpu Pdf Graphics Processing Unit Multi Core Processor For example, will there be extra model instances hosted if memory is allowed, and if there's a way to get that information. you can use cuda memory fraction to limit instance memory usage, and use num shard and cuda visible devices to set how to use multiple cards for instance. I found that the easiest way to run the 34b model across both gpus is by using tgi (text generation inference) from huggingface. here are quick steps on how to do it:.

Gpu Memory Issue Usage Issues Image Sc Forum Running such model with tgi quantization flag really helps: quantize=bitsandbytes. the question is, is it possible to run this model as a full but using both gpu cpu memory, 48 32=80gb?. I'm trying to load the google gemma 3 27b it model using hugging face's text generation inference (tgi) with docker on a windows server machine equipped with 3 x nvidia rtx 3090 gpus (each 24gb vram). Large language models present unique memory challenges during inference: tgi implements several specialized memory management strategies to address these challenges. sources: the kv cache is a critical component that stores intermediate key and value tensors generated during the attention process. I explore quantization considerations, gpu selection, popular inference toolkits tgi and vllm, and include the python code i use for querying models. i also evaluate performance, particularly tokens per second, and discuss other metrics including latency and throughput.

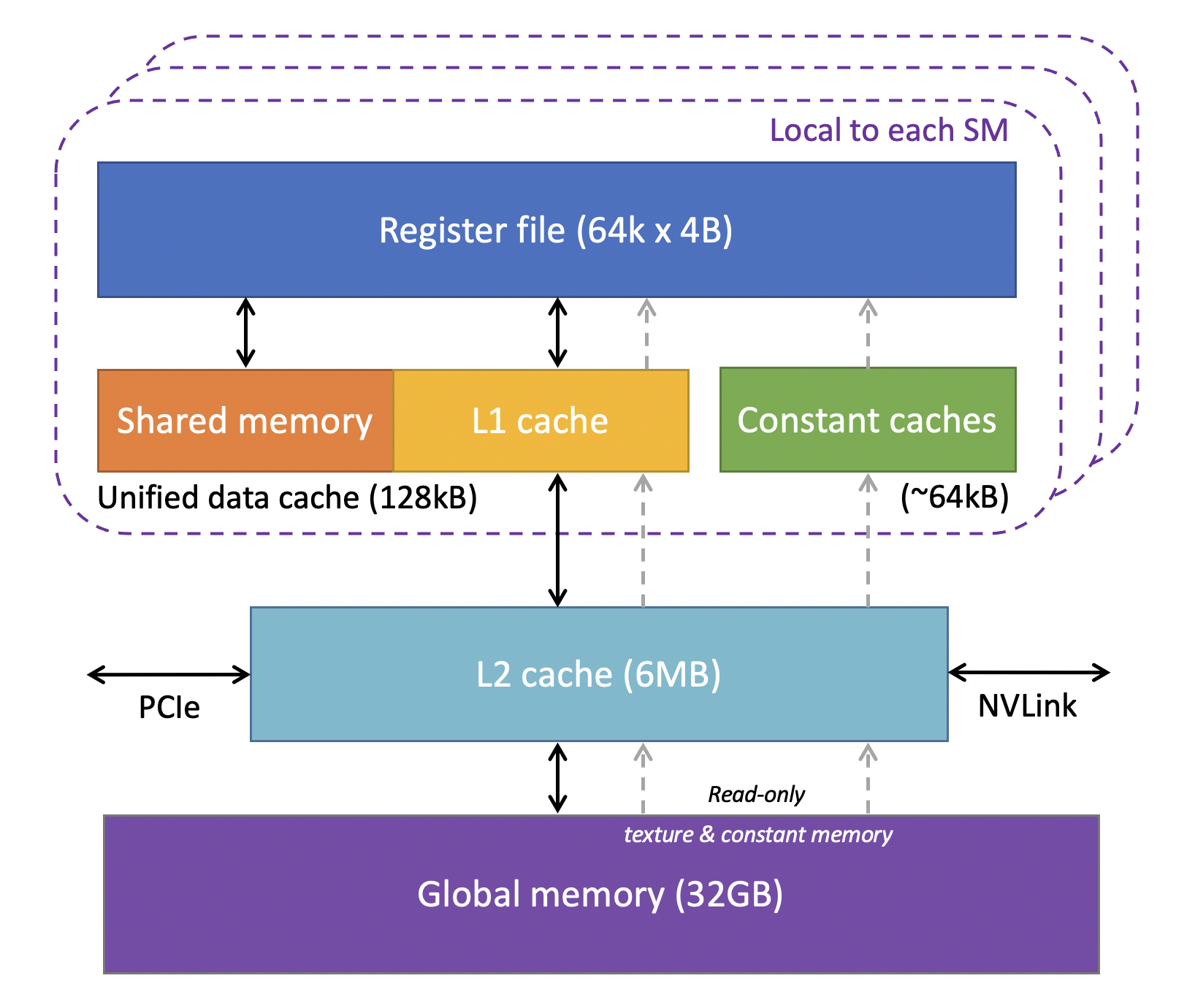

Cornell Virtual Workshop Understanding Gpu Architecture Gpu Memory Large language models present unique memory challenges during inference: tgi implements several specialized memory management strategies to address these challenges. sources: the kv cache is a critical component that stores intermediate key and value tensors generated during the attention process. I explore quantization considerations, gpu selection, popular inference toolkits tgi and vllm, and include the python code i use for querying models. i also evaluate performance, particularly tokens per second, and discuss other metrics including latency and throughput. Initially, i thought simply running two tgi instances, each pointing to the respective model would be a reasonable approach, but i'm wondering if my assumptions are correct? any thoughts? this is the correct way to go about it. Learn best practices for optimizing large language model (llm) inference and serving with gpus on gke by using quantization, tensor parallelism, and memory optimization. We’re on a journey to advance and democratize artificial intelligence through open source and open science. In vllm, a profile run is conducted before allocating the kv cache to separate the memory used for model inference from the memory needed for the kv cache.

Gpu Memory Model Overview Initially, i thought simply running two tgi instances, each pointing to the respective model would be a reasonable approach, but i'm wondering if my assumptions are correct? any thoughts? this is the correct way to go about it. Learn best practices for optimizing large language model (llm) inference and serving with gpus on gke by using quantization, tensor parallelism, and memory optimization. We’re on a journey to advance and democratize artificial intelligence through open source and open science. In vllm, a profile run is conducted before allocating the kv cache to separate the memory used for model inference from the memory needed for the kv cache.

Comments are closed.