Gpt 2 Gpt2 Vs Gpt 3 Gpt3 The Openai Showdown

Gpt 2 Gpt2 Vs Gpt 3 Gpt3 The Openai Showdown Gpt 3 is better than gpt 2 gpt 3 is the clear winner over its predecessor thanks to its more robust performance and significantly more parameters containing text with a wider variety of. Both gpt 2 and gpt 3 are developed by openai and are open source, which allows researchers and developers to access the code and use it for their own projects. both are pre trained.

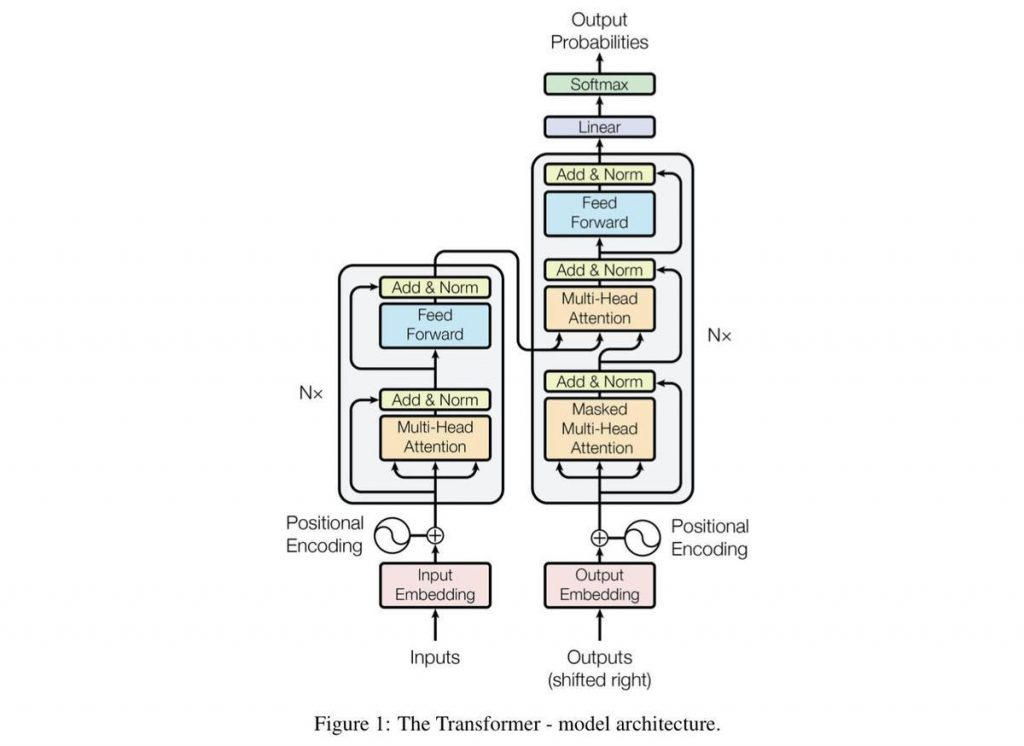

Gpt 2 Gpt2 Vs Gpt 3 Gpt3 The Openai Showdown Gpt 2 and gpt 3 have the same underpinning language models (generative pretrained transformer). transformer is just a funny name for self attention architecture. that is to say, language models can predict next words from a text seed. gpt 3 has been trained on a lot more textual data and with fewer bugs than gpt 2. In this review, i will summarize the two key papers by openai that have motivated much of the recent r&d around notional.ai by east agile. openai’s language models gpt 2 and gpt 3 have revolutionized natural language processing (nlp) technology through their transformer based language models. Openai released gpt 2 back in 2019 as an ai language model. it was an open source ai model, trained on 1.5 billion parameters to predict the next word of any sentence. in addition, gpt 2 could create human text with the help of deep algorithm learning. this enables it to learn all the context needed to generate its text. In this article, we will delve deep into the intricacies of gpt 3 and gpt 2, exploring their architectures, capabilities, practical applications, limitations, and implications for the future of ai.

Gpt 2 Gpt2 Vs Gpt 3 Gpt3 The Openai Showdown Openai released gpt 2 back in 2019 as an ai language model. it was an open source ai model, trained on 1.5 billion parameters to predict the next word of any sentence. in addition, gpt 2 could create human text with the help of deep algorithm learning. this enables it to learn all the context needed to generate its text. In this article, we will delve deep into the intricacies of gpt 3 and gpt 2, exploring their architectures, capabilities, practical applications, limitations, and implications for the future of ai. Openai just released their new open weight llms this week: gpt oss 120b and gpt oss 20b, their first open weight models since gpt 2 in 2019. and yes, thanks to some clever optimizations, they can run locally (but more about this later). this is the first time since gpt 2 that openai has shared a large, fully open weight model. Gpt2 and gpt3 are two of the most popular natural language processing (nlp) models available today. while they share some similarities, there are also some key differences between them that can make a big difference in how you use them. Gpt 2 and gpt 3 are both language models developed by openai, but the leap between them is monumental! gpt 2 has 1.5 billion parameters, which was groundbreaking in 2019, but gpt 3 skyrocketed to 175 billion parameters, making it over 100 times more powerful. In this article, we will explore the evolution of language models, the significance of language models in nlp tasks, and delve into the three generations of gpt models: gpt 1, gpt 2, and gpt 3. we will examine their features, limitations, use cases, and real world impact.

Gpt 2 Gpt2 Vs Gpt 3 Gpt3 The Openai Showdown By James Montantes Openai just released their new open weight llms this week: gpt oss 120b and gpt oss 20b, their first open weight models since gpt 2 in 2019. and yes, thanks to some clever optimizations, they can run locally (but more about this later). this is the first time since gpt 2 that openai has shared a large, fully open weight model. Gpt2 and gpt3 are two of the most popular natural language processing (nlp) models available today. while they share some similarities, there are also some key differences between them that can make a big difference in how you use them. Gpt 2 and gpt 3 are both language models developed by openai, but the leap between them is monumental! gpt 2 has 1.5 billion parameters, which was groundbreaking in 2019, but gpt 3 skyrocketed to 175 billion parameters, making it over 100 times more powerful. In this article, we will explore the evolution of language models, the significance of language models in nlp tasks, and delve into the three generations of gpt models: gpt 1, gpt 2, and gpt 3. we will examine their features, limitations, use cases, and real world impact.

Comments are closed.