Github Tgautam03 Transformers A Gentle Introduction To Transformers

Github Rajdeepbasu Transformers Transformers transformers neural network written from scratch and trained on imdb dataset. for more details check out the code in classification.ipynb. for detailed explanation, please watch the video associated with this repository. Tushar gautam tgautam03 "what i cannot create, i do not understand." richard feynman.

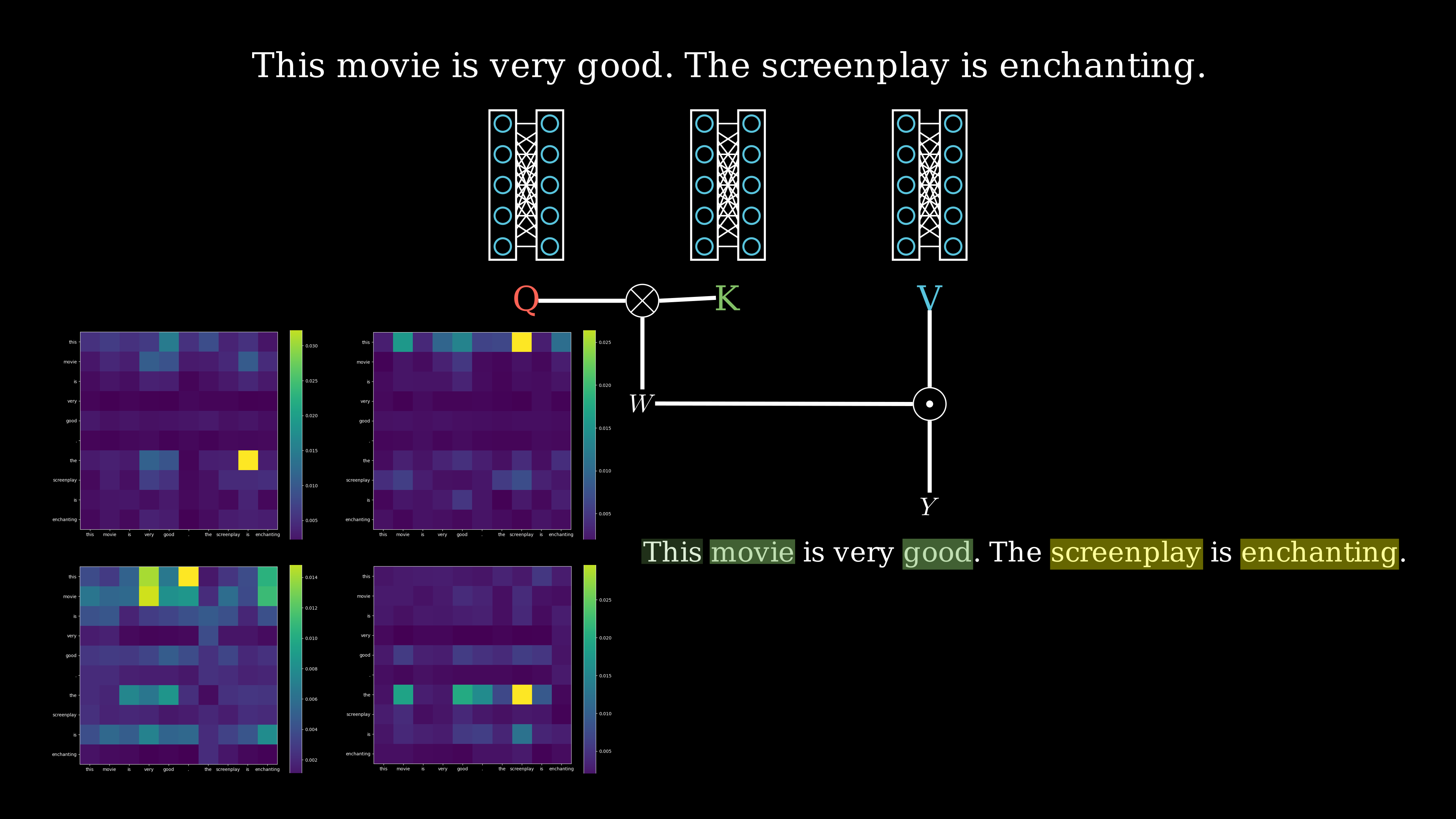

Github Surajitgithub Transformers Learning Transformers A gentle introduction to transformers neural network releases · tgautam03 transformers. A gentle introduction to transformers neural network transformers classification.ipynb at master · tgautam03 transformers. I find most transformer posts are heavily focused on what computations are involved, walking through each tensor transformation and how you might see it in code. Here we introduce basic concepts of transformers and present key techniques that form the recent advances of these models. this includes a description of the standard transformer architecture, a series of model refinements, and common applications.

Github Sbouslama Transformers Transformers And Related Projects Are I find most transformer posts are heavily focused on what computations are involved, walking through each tensor transformation and how you might see it in code. Here we introduce basic concepts of transformers and present key techniques that form the recent advances of these models. this includes a description of the standard transformer architecture, a series of model refinements, and common applications. So, here’s a relatively non technical introduction to transformers and what’s the big deal about them. Here we introduce basic concepts of transformers and present key techniques that form the recent advances of these models. this includes a description of the standard transformer architecture, a series of model refinements, and common applications. Bert (bidirectional encoder representations from transformers) bert jointly encodes the right and left context of a word in a sentence to improve the learned feature representations. Our brains are made of neurons! let’s play a game! there is an input layer, a hidden layer, and an output layer. you just learned what is feedforward!! wrinkles? not in. nyc! sprinkles? got in. nyc! but how does the model learn?? this is essentially backpropagation!! you!!!.

Github Tgautam03 Transformers A Gentle Introduction To Transformers So, here’s a relatively non technical introduction to transformers and what’s the big deal about them. Here we introduce basic concepts of transformers and present key techniques that form the recent advances of these models. this includes a description of the standard transformer architecture, a series of model refinements, and common applications. Bert (bidirectional encoder representations from transformers) bert jointly encodes the right and left context of a word in a sentence to improve the learned feature representations. Our brains are made of neurons! let’s play a game! there is an input layer, a hidden layer, and an output layer. you just learned what is feedforward!! wrinkles? not in. nyc! sprinkles? got in. nyc! but how does the model learn?? this is essentially backpropagation!! you!!!.

Github Spain Ai Transformers Notebook Showing How To Use The Bert (bidirectional encoder representations from transformers) bert jointly encodes the right and left context of a word in a sentence to improve the learned feature representations. Our brains are made of neurons! let’s play a game! there is an input layer, a hidden layer, and an output layer. you just learned what is feedforward!! wrinkles? not in. nyc! sprinkles? got in. nyc! but how does the model learn?? this is essentially backpropagation!! you!!!.

Comments are closed.