Generate Images Using Stable Diffusion 2 1 With Huggingface Pipelines

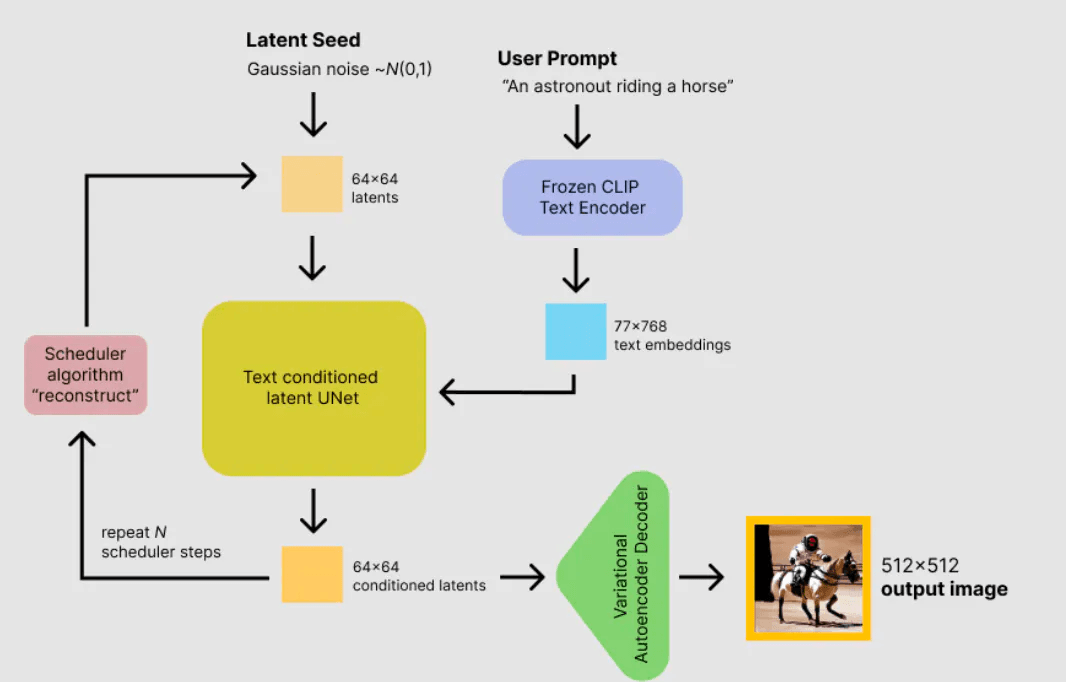

Hieupm Stable Diffusion 2 1 Inpainting Hugging Face Stable diffusion is a text to image latent diffusion model created by the researchers and engineers from compvis, stability ai and laion. latent diffusion applies the diffusion process over a lower dimensional latent space to reduce memory and compute complexity. In this video, you will be able to generate your own realistic images using huggingface pipeline for free. more.

Stable Diffusion Img2img Cpu A Hugging Face Space By Fffiloni Learn how to generate images using stable diffusion, a hugging face pipeline built on pytorch for text to image & text to video conversion. Learn how you can generate similar images with depth estimation (depth2img) using stable diffusion with huggingface diffusers and transformers libraries in python. This notebook shows how to create a custom diffusers pipeline for text guided image to image generation with stable diffusion model using 🤗 hugging face 🧨 diffusers library. To address these issues, we introduce a new image synthesis and editing method, stochastic differential editing (sdedit), based on a diffusion model generative prior, which synthesizes realistic images by iteratively denoising through a stochastic differential equation (sde).

Stable Diffusion With Hugging Face Inference Endpoints This notebook shows how to create a custom diffusers pipeline for text guided image to image generation with stable diffusion model using 🤗 hugging face 🧨 diffusers library. To address these issues, we introduce a new image synthesis and editing method, stochastic differential editing (sdedit), based on a diffusion model generative prior, which synthesizes realistic images by iteratively denoising through a stochastic differential equation (sde). In this notebook we demonstrate how to use free models such as stable diffusion 2 from the huggingface hub to generate images. the example code shown here is modified from this source. Recently, they have expanded to include the ability to generate images directly from text descriptions, prominently featuring models like stable diffusion. in this article, we will explore how we can use the stable diffusion xl base model to transform textual descriptions into vivid images. Load textual inversion embeddings into the text encoder of stable diffusion pipelines. both diffusers and automatic1111 formats are supported (see example below). In this blog post, we will explore the installation and implementation of the stable diffusion image to image pipeline using the hugging face diffusers library, sagemaker notebooks, and private or public image data from roboflow.

Comments are closed.