Firefox Labs Run Llm Models Within Firefox Browser Open Ai Google Gemini Huggingface Mistral Claude

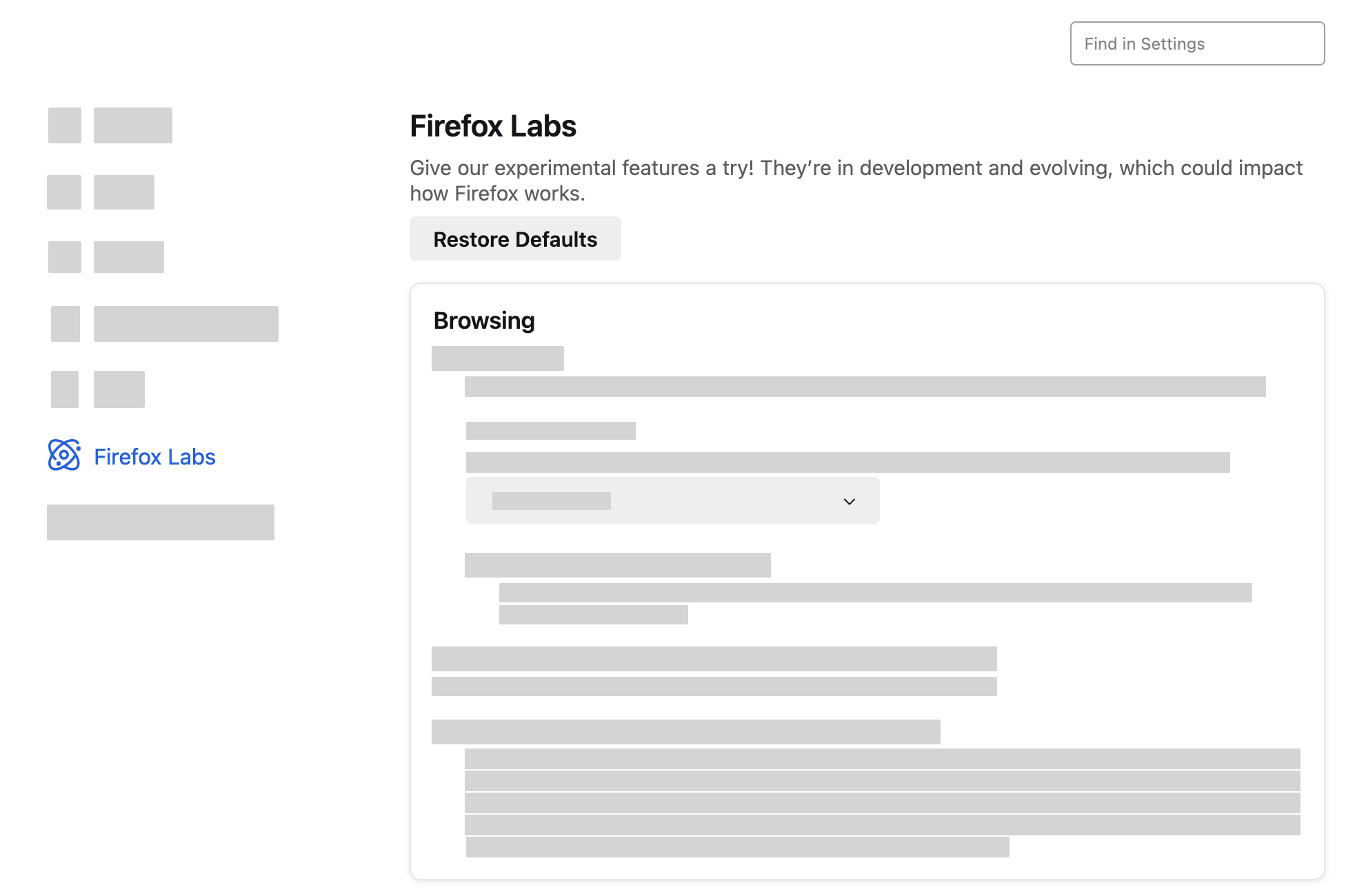

Firefox Labs Explore Experimental Features In Firefox Firefox Súgó Download multi llm ai chat for firefox. multi llm ai chat lets you chat with local ollama models and cloud ai apis including openai, anthropic, google gemini, groq, xai (grok), deepseek, openrouter, perplexity, together ai, and zhipu ai (glm) — plus dall·e image generation. Firefox labs run llm models within firefox browser: open ai google gemini huggingface mistral claude.

In Browser Llm App In Pure Python Gemini Nano Gradio Lite I’m looking to run an open source ai model locally and came across ollama and llamafile. since privacy guides recommends ollama, i assume it’s well supported, but i also found llamafile, which focuses on portability and performance. Webllm is an open source project that enables running large language models entirely in the browser using webgpu. this means you can execute llms like llama 3, mistral, and gemma locally on your machine without requiring api calls to external servers. Discover how to run large language models directly in your browser, enhancing privacy and performance while eliminating api dependencies. ai researchers never stop trying to extend the envelope on what is possible with machine learning. A new firefox extension called page assist enables users to interact with ollama, a local large language model (llm), through a browser based interface rather than command line interactions.

Google Gemini Ai Artificial Intelligence Llm Language Model Editorial Discover how to run large language models directly in your browser, enhancing privacy and performance while eliminating api dependencies. ai researchers never stop trying to extend the envelope on what is possible with machine learning. A new firefox extension called page assist enables users to interact with ollama, a local large language model (llm), through a browser based interface rather than command line interactions. This is a browser based llm chat application that runs ai models directly in your browser using webgpu technology, presented with a pc themed desktop interface. all processing happens locally on your device. no server required. Large language models (llm) are at the heart of natural language ai tools like chatgpt, and web llm shows it is now possible to run an llm directly in a browser. Imagine building lightweight ai powered applications that run entirely in a web browser on an ai pc. these apps reduce server costs, enhance privacy, and even work offline. How to deploy google's gemma 2 2b instruction tuned model on cloud run using vllm as an inference engine. how to invoke the backend service to do sentence completion.

Comments are closed.