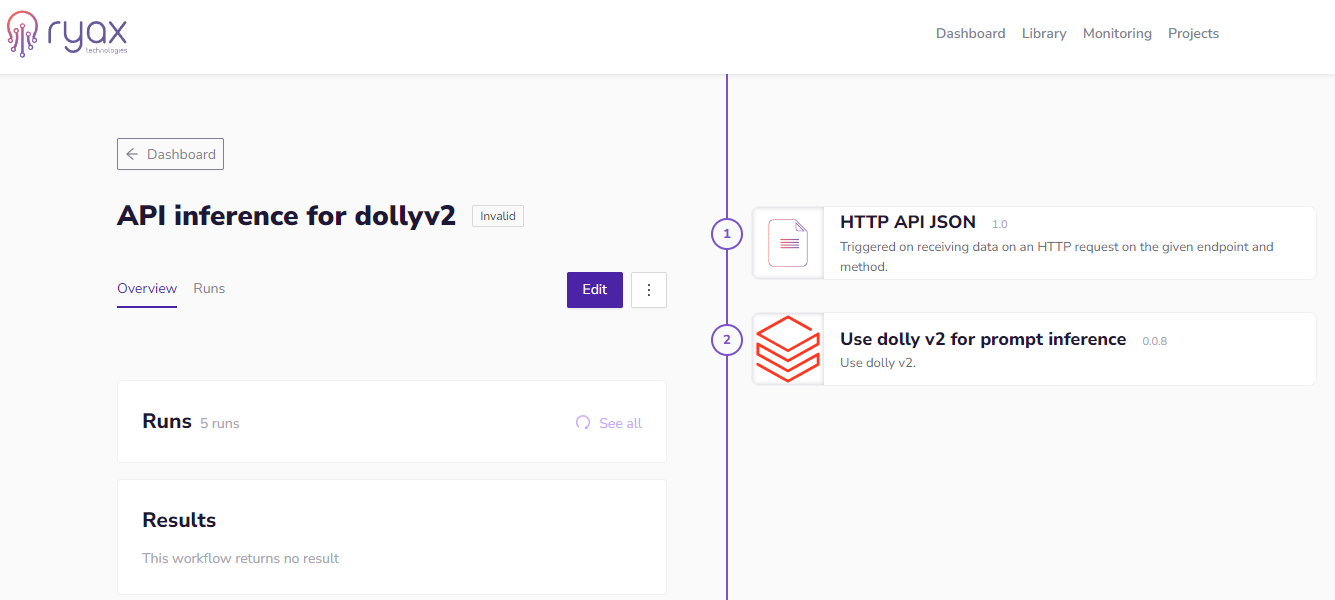

Fine Tuning Of Your Llm With Your Data Ryax Technologies

Llm Fine Tuning Pdf Artificial Intelligence Intelligence Ai This is because fine-tuning requires a lot of memory compared to running the model During inference, you can calculate your memory requirements by multiplying the parameter count by its precision The fine-tuning process with LoRA includes integrating LoRA layers, freezing original parameters, training on the dataset, and validating the model to ensure task-specific adaptation and performance

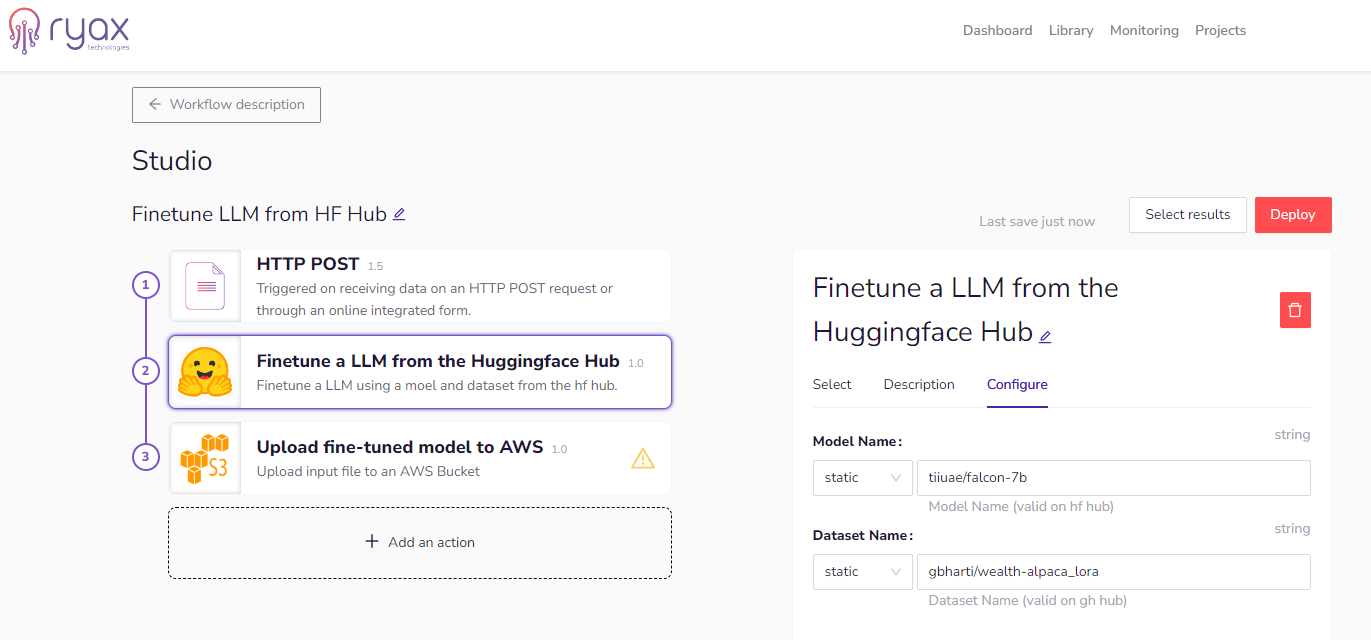

Fine Tuning Of Your Llm With Your Data Ryax Technologies The technical innovation: How TAO reinvents LLM fine-tuning At its core, TAO shifts the paradigm of how developers personalize models for specific domains These capabilities include GenAI evaluation tools for use-case-specific benchmarks, streamlined LLM fine-tuning workflows and advanced named entity recognition (NER) for PDFs—all of which “Augmented fine-tuning will generally make the model fine-tuning process more expensive, because it requires an additional step of ICL to augment the data, followed by fine-tuning,” Lampinen said In other cases, fine-tuning an existing LLM will be faster and more cost-efficient 2 Data Quality And Quantity: To effectively train a domain-specific LLM, you need a lot of high-quality, niche

Fine Tuning Of Your Llm With Your Data Ryax Technologies “Augmented fine-tuning will generally make the model fine-tuning process more expensive, because it requires an additional step of ICL to augment the data, followed by fine-tuning,” Lampinen said In other cases, fine-tuning an existing LLM will be faster and more cost-efficient 2 Data Quality And Quantity: To effectively train a domain-specific LLM, you need a lot of high-quality, niche Supports over 70 open-source LLM backbone models on Hugging Face Customizable training settings for flexible fine-tuning Compatible with GIGABYTE AI TOP series hardware for enhanced performance We implement a scalable and efficient DB infrastructure to support analysis and detail our preprocessing pipeline to enforce high-quality data before DB insertion The resulting dataset comprises

Comments are closed.