Fine Tuning Multimodal Embeddings On Custom Text Image Pairs

Free Video Fine Tuning Multimodal Embeddings For Custom Text Image Multimodal embeddings: introduction & use cases (with python) fine tuning text embeddings for domain specific search (w python). In this article, i’ll discuss how we can mitigate these issues via fine tuning multimodal embedding models.

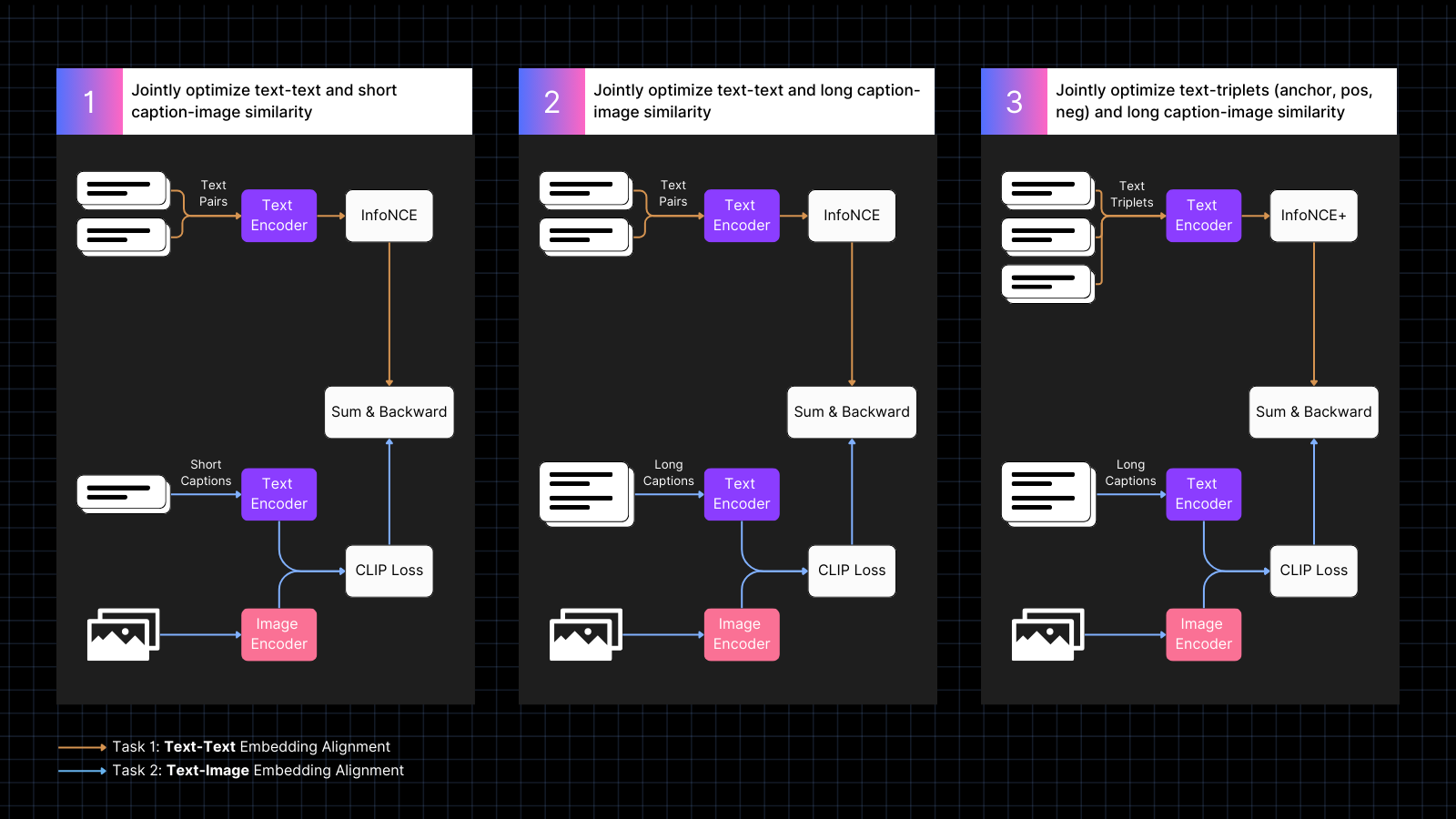

Self Bootstrapping 3 3 Top Fine Tuning With Frozen Text Learn how to fine tune clip (contrastive language image pre training) models on custom text image pairs through a detailed video tutorial that walks through the process using titles and thumbnails. To address both single image text and multi image text scenarios, we use two datasets that are well suited for this task. this dataset is a reformatted version of llava instruct mix. it consists of conversations where a user provides both text and a single image as input. In this article, i’ll discuss how we can mitigate these issues via fine tuning multimodal embedding models. photo by markus winkler on unsplash. multimodal embeddings represent multiple data modalities in the same vector space such that similar concepts are co located. To address these issues, we propose moca, a two stage framework for transforming pre trained vlms into effective bidirectional multimodal embedding models.

Feature Embeddings Before After Model Finetuning Download Scientific In this article, i’ll discuss how we can mitigate these issues via fine tuning multimodal embedding models. photo by markus winkler on unsplash. multimodal embeddings represent multiple data modalities in the same vector space such that similar concepts are co located. To address these issues, we propose moca, a two stage framework for transforming pre trained vlms into effective bidirectional multimodal embedding models. Multimodal embedding models, like clip, unlock countless 0 shot use cases such as image classification and retrieval. here, we saw how we can fine tune such a model to adapt it to a specialized domain (i.e. my titles and thumbnails). This project explores transformer based models across a variety of tasks, including causal language modeling, text classification, translation, and multimodal learning. To begin, prepare a dataset that pairs different modalities. for image text models, this might involve collecting images with captions or descriptions. the data should be cleaned and formatted to match the input requirements of the base model. Here, i will fine tune clip on titles and thumbnails from my channel. at the end of this, we will have a model that can take title thumbnail pairs and return a similarity score.

Jina Clip V2 Multilingual Multimodal Embeddings For Text And Images Multimodal embedding models, like clip, unlock countless 0 shot use cases such as image classification and retrieval. here, we saw how we can fine tune such a model to adapt it to a specialized domain (i.e. my titles and thumbnails). This project explores transformer based models across a variety of tasks, including causal language modeling, text classification, translation, and multimodal learning. To begin, prepare a dataset that pairs different modalities. for image text models, this might involve collecting images with captions or descriptions. the data should be cleaned and formatted to match the input requirements of the base model. Here, i will fine tune clip on titles and thumbnails from my channel. at the end of this, we will have a model that can take title thumbnail pairs and return a similarity score.

Fine Tuning Embeddings For Domain Specific Nlp To begin, prepare a dataset that pairs different modalities. for image text models, this might involve collecting images with captions or descriptions. the data should be cleaned and formatted to match the input requirements of the base model. Here, i will fine tune clip on titles and thumbnails from my channel. at the end of this, we will have a model that can take title thumbnail pairs and return a similarity score.

Jina Clip V1 A Truly Multimodal Embeddings Model For Text And Image

Comments are closed.