Figure 1 From Using Semi Supervised Convolutional Neural Networks For

Figure 1 From Law Text Classification Using Semi Supervised In this article, we delve into the concept of semi supervised classification with gcns, exploring how this innovative technique is revolutionizing the way we approach complex data classification tasks. The aim of glcn is to learn an optimal graph structure that best serves graph cnns for semi supervised learning by integrating both graph learning and graph convolution in a unified network architecture.

Figure 1 From Graph Based Semi Supervised Learning With Convolution In this paper, we lift this assumption and present two semi supervised methods based on convolutional neural networks (cnns) to learn discriminative hidden features. our semi supervised cnns learn from both labeled and unlabeled data while also performing feature learning on raw sensor data. We introduce a convolutional neural network that operates directly on graphs, allowing end to end learning of the feature pipeline. this architecture generalizes standard molecular. To this end, by adding a clustering layer to a deep convolutional neural network (cnn), we present a new training algorithm for a semi supervised method that learns feature representations from unlabeled data while keeping the model consistent with the labeled data. Graph convolutional neural networks (graph cnns) have been widely used for graph data representation and semi supervised learning tasks. however, existing graph.

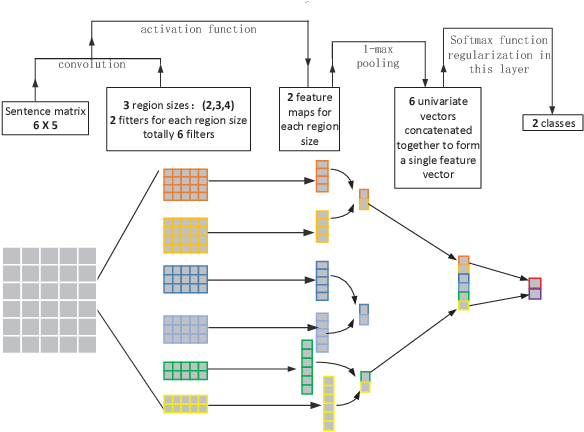

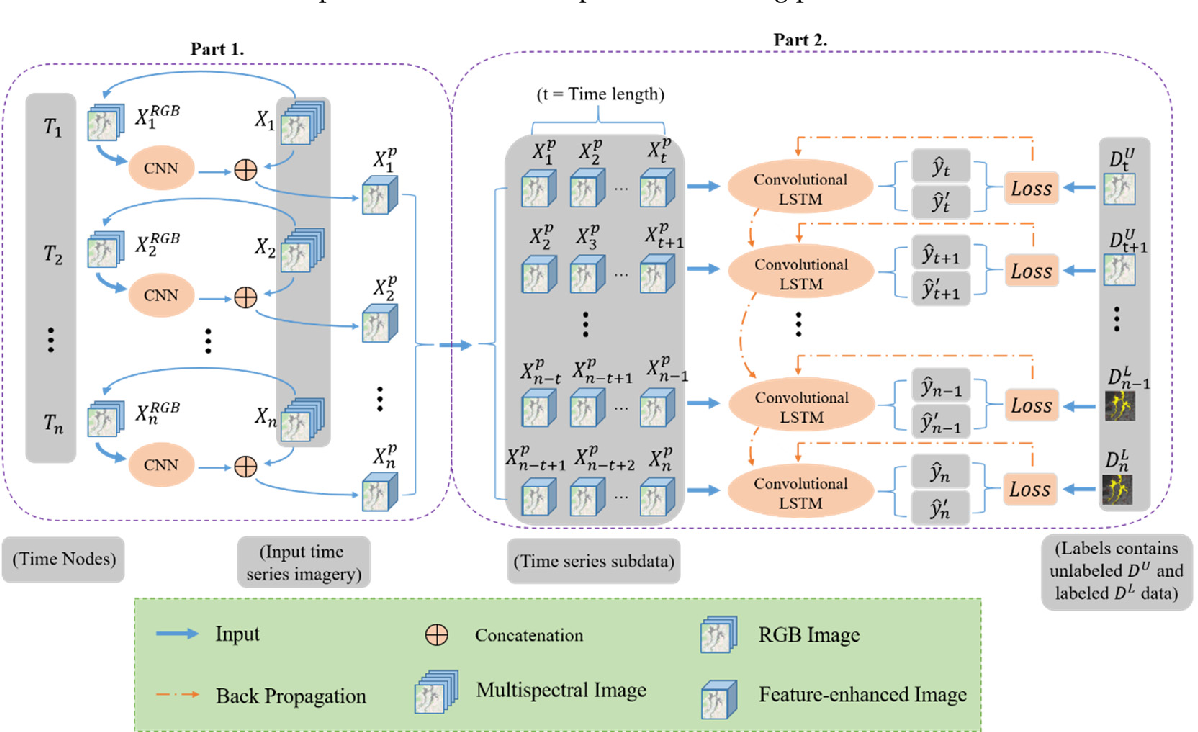

Figure 1 From Semi Supervised Convolutional Long Short Term Memory To this end, by adding a clustering layer to a deep convolutional neural network (cnn), we present a new training algorithm for a semi supervised method that learns feature representations from unlabeled data while keeping the model consistent with the labeled data. Graph convolutional neural networks (graph cnns) have been widely used for graph data representation and semi supervised learning tasks. however, existing graph. This paper presents a new semi supervised framework with convolutional neural networks (cnns) for text categorization. unlike the previous approaches that rely on word embeddings, our method learns embeddings of small text regions from unlabeled data for integration into a supervised cnn. In this paper, we demystify the gcn model for semi supervised learning. in particular, we show that the graph convolution of the gcn model is simply a special form of laplacian smoothing, which mixes the features of a vertex and its nearby neighbors. We propose a novel semi supervised learning method for convolutional neural networks (cnns). cnn is one of the most popular models for deep learning and its successes among various types of applications include image and speech recognition, image captioning, and the game of ‘go’. We test our model in a number of experiments: semi supervised document classification in cita tion networks, semi supervised entity classification in a bipartite graph extracted from a knowledge graph, an evaluation of various graph propagation models and a run time analysis on random graphs.

Figure 1 From Semi Supervised Convolutional Neural Networks With Label This paper presents a new semi supervised framework with convolutional neural networks (cnns) for text categorization. unlike the previous approaches that rely on word embeddings, our method learns embeddings of small text regions from unlabeled data for integration into a supervised cnn. In this paper, we demystify the gcn model for semi supervised learning. in particular, we show that the graph convolution of the gcn model is simply a special form of laplacian smoothing, which mixes the features of a vertex and its nearby neighbors. We propose a novel semi supervised learning method for convolutional neural networks (cnns). cnn is one of the most popular models for deep learning and its successes among various types of applications include image and speech recognition, image captioning, and the game of ‘go’. We test our model in a number of experiments: semi supervised document classification in cita tion networks, semi supervised entity classification in a bipartite graph extracted from a knowledge graph, an evaluation of various graph propagation models and a run time analysis on random graphs.

Figure 1 From Graph Convolutional Network For Semi Supervised Node We propose a novel semi supervised learning method for convolutional neural networks (cnns). cnn is one of the most popular models for deep learning and its successes among various types of applications include image and speech recognition, image captioning, and the game of ‘go’. We test our model in a number of experiments: semi supervised document classification in cita tion networks, semi supervised entity classification in a bipartite graph extracted from a knowledge graph, an evaluation of various graph propagation models and a run time analysis on random graphs.

Comments are closed.