Figure 1 From Fast And Private Inference Of Deep Neural Networks By Co

Fast And Private Inference Of Deep Neural Networks By Co Designing This problem is par ticularly prevalent in deep neural networks (dnns), where a non linear activation separates each of the many linear layers. this work addresses the non linear layers by taking a co design approach between the activation functions and mpc. Motivated by the success of previous work co designing machine learning and mpc, we develop an activation function co design. we replace all relus with a polynomial approximation and evaluate them with single round mpc protocols, which give state of the art inference times in wide area networks.

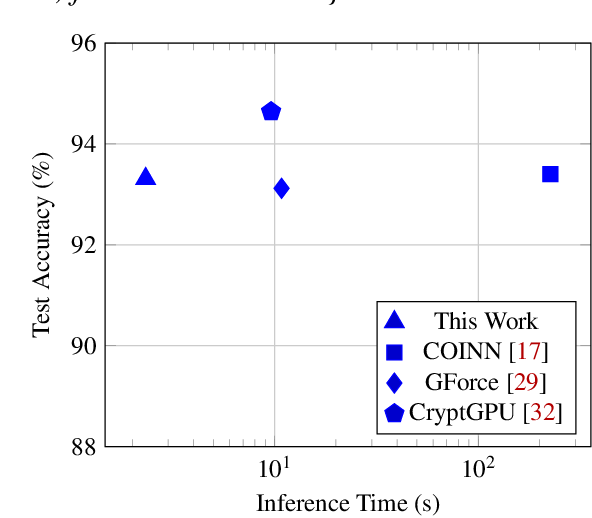

Figure 1 From Fast And Private Inference Of Deep Neural Networks By Co We replace all relus with a polynomial approximation and evaluate them with single round mpc protocols, which give state of the art inference times in wide area networks. Pillar espn this repo includes the implementation code for the paper: fast and private inference of deep neural networks by co designing activation functions. Figure 1: summary of the inference time in seconds vs. test accuracy for each state of the art approach on the cifar 10 dataset in the wan (100 ms roundtrip delay). Figure 1: summary of the inference time in seconds vs. test accuracy for each state of the art approach on the cifar 10 dataset in the wan (100 ms roundtrip delay).

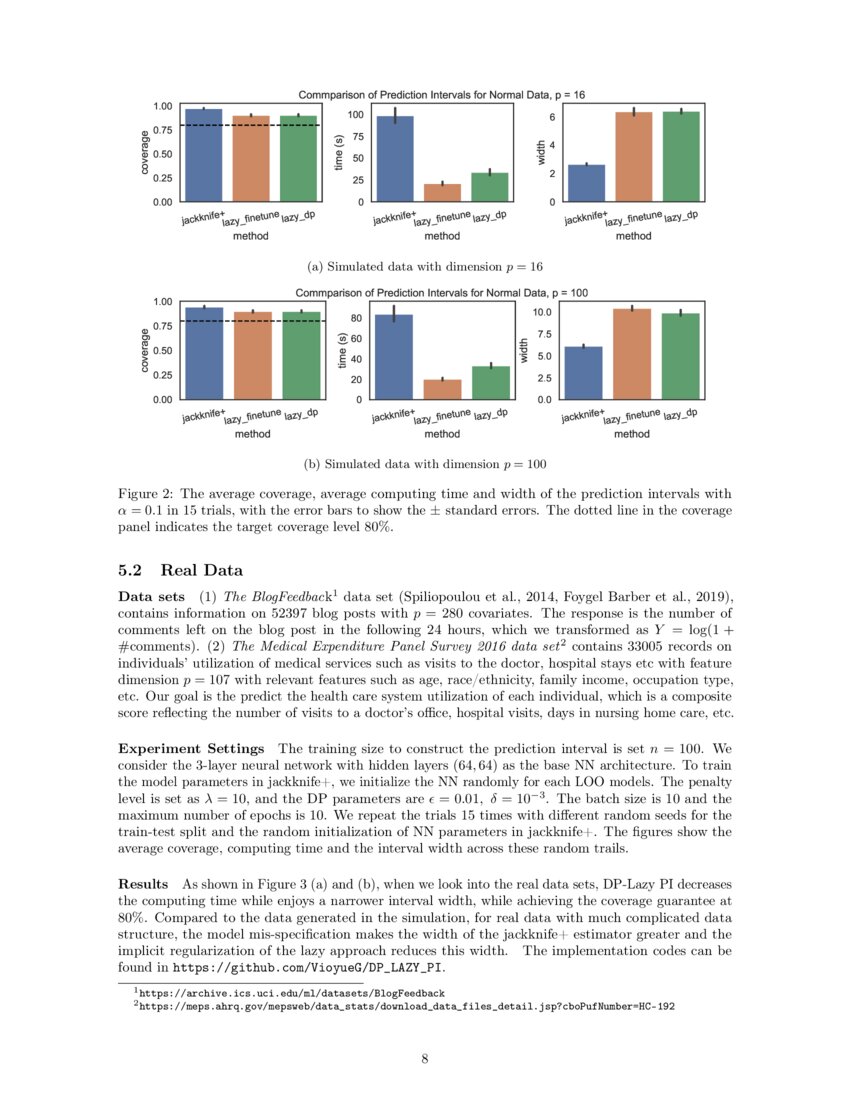

Fast Distribution Free Predictive Inference For Neural Networks With Figure 1: summary of the inference time in seconds vs. test accuracy for each state of the art approach on the cifar 10 dataset in the wan (100 ms roundtrip delay). Figure 1: summary of the inference time in seconds vs. test accuracy for each state of the art approach on the cifar 10 dataset in the wan (100 ms roundtrip delay). This work presents cheetah, a new 2pc nn inference system that is faster and more communication efficient than state of the arts, and presents intensive benchmarks over several large scale deep neural networks. Motivated by the success of previous work co designing machine learning and mpc, we develop an activation function co design. we replace all relus with a polynomial approximation and evaluate them with single round mpc protocols, which give state of theart inference times in wide area networks. Bibliographic details on fast and private inference of deep neural networks by co designing activation functions. Motivated by the success of previous work co designing machine learning and mpc, we develop an activation function co design. we replace all relus with a polynomial approximation and evaluate them with single round mpc protocols, which give state of theart inference times in wide area networks.

Pdf Fast Inference Of Deep Neural Networks In Fpgas For Particle Physics This work presents cheetah, a new 2pc nn inference system that is faster and more communication efficient than state of the arts, and presents intensive benchmarks over several large scale deep neural networks. Motivated by the success of previous work co designing machine learning and mpc, we develop an activation function co design. we replace all relus with a polynomial approximation and evaluate them with single round mpc protocols, which give state of theart inference times in wide area networks. Bibliographic details on fast and private inference of deep neural networks by co designing activation functions. Motivated by the success of previous work co designing machine learning and mpc, we develop an activation function co design. we replace all relus with a polynomial approximation and evaluate them with single round mpc protocols, which give state of theart inference times in wide area networks.

Comments are closed.