Faster Training Of Diffusion Models And Improved Density Estimation Via

Faster Training Of Diffusion Models And Improved Density Estimation Via View a pdf of the paper titled faster training of diffusion models and improved density estimation via parallel score matching, by etrit haxholli and 1 other authors. We contrast the density estimation performance of dpms trained via parallel score matching and those trained through the standard approach (sa dpm). we conduct experiments on 2d data of toy distributions, alongside standard benchmark datasets including cifar 10, celeba, and imagenet.

Deep Diffusion Models For Robust Channel Estimation Deepai This work develops an approach to systematically and slowly destroy structure in a data distribution through an iterative forward diffusion process, then learns a reverse diffusion process that restores structure in data, yielding a highly flexible and tractable generative model of the data. To expedite training and augment model flexibility, we leverage the intrinsic property of dpms that allows scores at different time points to be optimized independently. As demonstrated empirically on synthetic and image datasets, not only does our approach greatly speed up the training process, but it also improves the density estimation performance as compared to the standard training approach for dpms. We demonstrate the effectiveness of our proposed strategies on various models and show that they can significantly reduce the training time and improve the quality of the generated images.

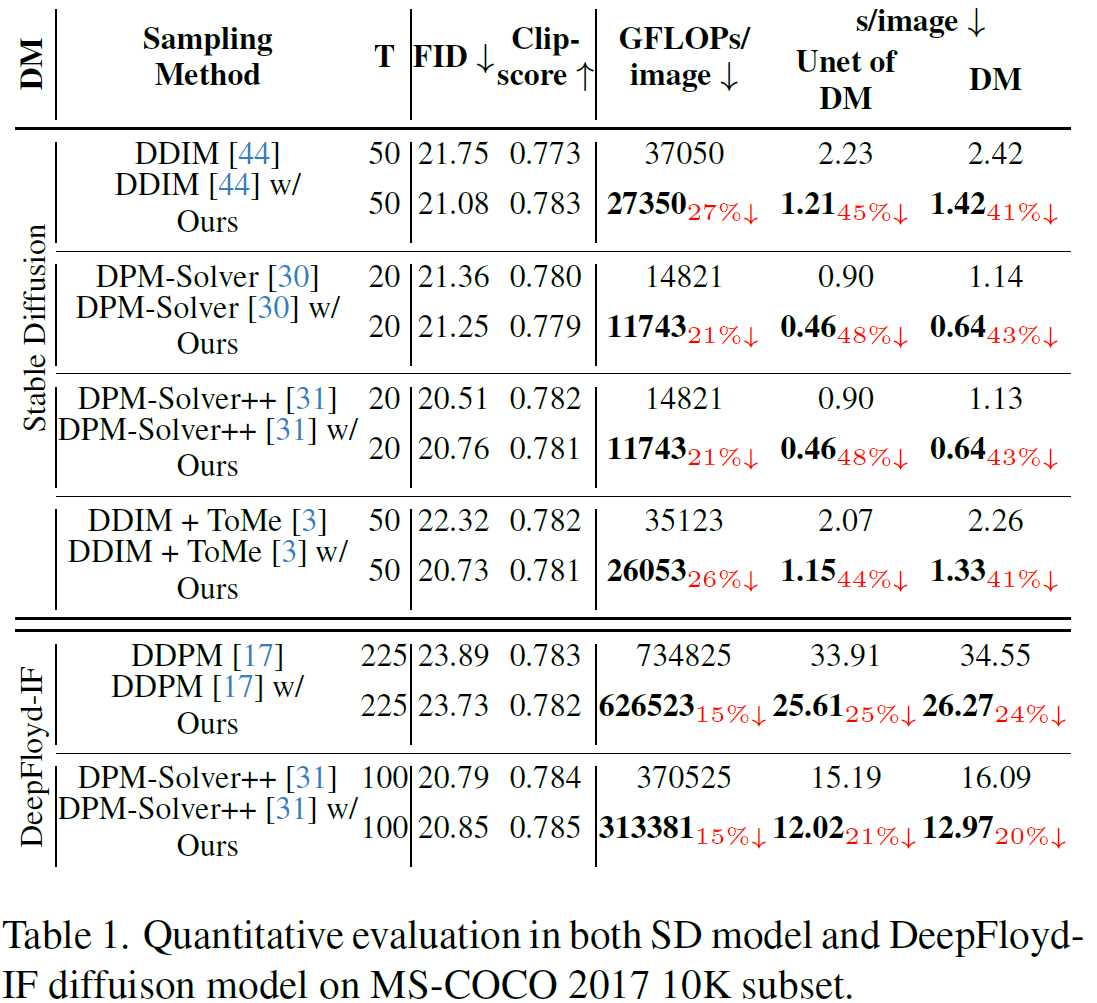

Faster Diffusion Rethinking The Role Of Unet Encoder In Diffusion Models As demonstrated empirically on synthetic and image datasets, not only does our approach greatly speed up the training process, but it also improves the density estimation performance as compared to the standard training approach for dpms. We demonstrate the effectiveness of our proposed strategies on various models and show that they can significantly reduce the training time and improve the quality of the generated images. In diffusion probabilistic models (dpms), the task of modeling the score evolution via a single time dependent neural network necessitates extended training periods and may potentially impede. In this paper, we resort to the optimal transport theory to accelerate the training of diffusion models, providing an in depth analysis of the forward diffusion process. We introduce a flexible family of diffusion based generative models that achieves state of the art likelihoods on image density estimation benchmarks. unlike other diffusion based models, our method allows for efficient optimization of the noise schedule jointly with the rest of the model. In this work, we propose several improved techniques, in cluding both the evaluation perspective and training perspec tive, to allow the likelihood estimation by diffusion odes to outperform the existing state of the art likelihood estima tors.

Comments are closed.