Explainable Ai For Pre Trained Code Models What Do They Learn When

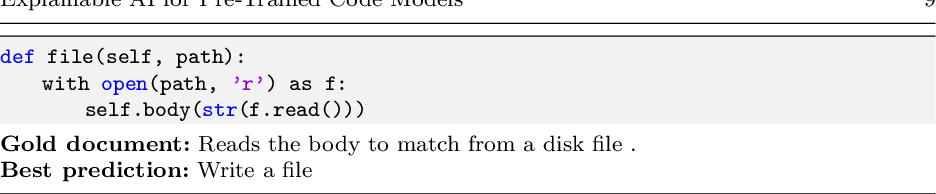

Explainable Ai For Pre Trained Code Models What Do They Learn When In this paper, using an example explainable ai (xai) method (attention mechanism), we study two recent large language models (llms) for code (codebert and graphcodebert) on a set of software engineering downstream tasks: code document generation (cdg), code refinement (cr), and code translation (ct). Professionals in the industry. one potential remedy is to ofer explainable ai (xai) methods to provide the missing explainability. in this thesis, we aim to explore to what extent xai has been studied in the se domain (xai4se) and provide a comprehensive view of the current state of the art as well as challenges and a roadmap for future.

Figure 1 From Explainable Ai For Pre Trained Code Models What Do They The future of explainable ai the journey toward fully explainable ai is still unfolding. researchers are working on models that are inherently interpretable, new visualization tools that make hidden processes visible, and frameworks that measure the quality of explanations themselves. In this course, you’ll explore key techniques for interpreting models, from simple linear regression to complex neural networks. you’ll learn how to analyze feature importance, visualize decision making processes, and build more transparent ai systems. One way to investigate this is with diagnostic tasks called probes. in this paper, we construct four probing tasks (probing for surface level, syntactic, structural, and semantic information) for pre trained code models. Pre training enables ai models to: learn from massive text data without explicit supervision. generalize across different tasks like summarization, translation, and coding.

Table 1 From Explainable Ai For Pre Trained Code Models What Do They One way to investigate this is with diagnostic tasks called probes. in this paper, we construct four probing tasks (probing for surface level, syntactic, structural, and semantic information) for pre trained code models. Pre training enables ai models to: learn from massive text data without explicit supervision. generalize across different tasks like summarization, translation, and coding. These models utilize a branch of ai known as machine learning, where they are trained on vast datasets of code. over time, they learn to identify syntax, structure, and even the purpose of different coding elements. In this paper, using an explainable ai (xai) method (attention mechanism), we study state of the art transformer based models (codebert and graphcodebert) on a set of software engineering. In this paper, using an explainable ai (xai) method (attention mechanism), we study state of the art transformer based models (codebert and graphcodebert) on a set of software engineering downstream tasks: code document generation (cdg), code refinement (cr), and code translation (ct). Within artificial intelligence (ai), explainable ai (xai), often overlapping with interpretable ai or explainable machine learning (xml), is a field of research that explores methods that provide humans with the ability of intellectual oversight over ai algorithms. [1][2] the main focus is on the reasoning behind the decisions or predictions made by the ai algorithms, [3] to make them more.

Figure 2 From Explainable Ai For Pre Trained Code Models What Do They These models utilize a branch of ai known as machine learning, where they are trained on vast datasets of code. over time, they learn to identify syntax, structure, and even the purpose of different coding elements. In this paper, using an explainable ai (xai) method (attention mechanism), we study state of the art transformer based models (codebert and graphcodebert) on a set of software engineering. In this paper, using an explainable ai (xai) method (attention mechanism), we study state of the art transformer based models (codebert and graphcodebert) on a set of software engineering downstream tasks: code document generation (cdg), code refinement (cr), and code translation (ct). Within artificial intelligence (ai), explainable ai (xai), often overlapping with interpretable ai or explainable machine learning (xml), is a field of research that explores methods that provide humans with the ability of intellectual oversight over ai algorithms. [1][2] the main focus is on the reasoning behind the decisions or predictions made by the ai algorithms, [3] to make them more.

Pre Trained Models Sentisight Ai In this paper, using an explainable ai (xai) method (attention mechanism), we study state of the art transformer based models (codebert and graphcodebert) on a set of software engineering downstream tasks: code document generation (cdg), code refinement (cr), and code translation (ct). Within artificial intelligence (ai), explainable ai (xai), often overlapping with interpretable ai or explainable machine learning (xml), is a field of research that explores methods that provide humans with the ability of intellectual oversight over ai algorithms. [1][2] the main focus is on the reasoning behind the decisions or predictions made by the ai algorithms, [3] to make them more.

Decoding Pre Trained Language Models Stable Ai Diffusion

Comments are closed.