Evaluating Retrieval Augmented Generation Pipelines With Llamaindex And

Github Praveen76 Build Retrieval Augmented Generation Pipelines To demonstrate how retrieval augmented generation (rag) works, we’ll build a simple rag system using llamaindex, a powerful tool that leverages openai’s models for both retrieval and generation. we’ll also set up the necessary environment for evaluating our rag system with trulens. let’s dive in!. In this notebook, we have explored how to build and evaluate a rag pipeline using llamaindex, with a specific focus on evaluating the retrieval system and generated responses within the pipeline.

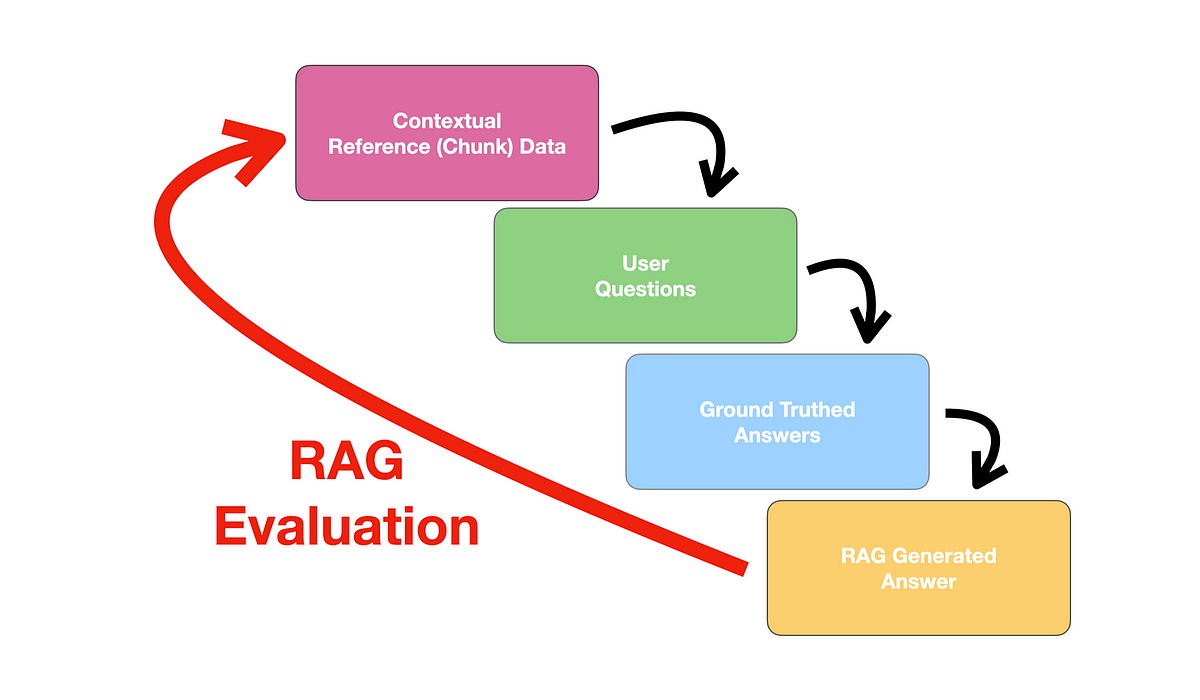

Evaluating Retrieval Augmented Generation Pipelines With Llamaindex And In this blog, we will implement and evaluate an advanced retrieval augmented generation (rag) technique using llamaindex and trulens. in my previous blog, we explored the. In simple terms, the process of rag involves three main parts: the input query, the retrieved context, and the response generated by the llm. these three elements form the most important triad in the rag process and are interdependent. In this blog, i will explore how to set up and evaluate rag pipelines using llamaindex, a python library for building and querying document indices, and trulens, a tool for evaluating the. Evaluation and benchmarking are crucial concepts in llm development. to improve the performance of an llm app (rag, agents), you must have a way to measure it. llamaindex offers key modules to measure the quality of generated results. we also offer key modules to measure retrieval quality.

Steps In Evaluating Retrieval Augmented Generation Rag Pipelines By In this blog, i will explore how to set up and evaluate rag pipelines using llamaindex, a python library for building and querying document indices, and trulens, a tool for evaluating the. Evaluation and benchmarking are crucial concepts in llm development. to improve the performance of an llm app (rag, agents), you must have a way to measure it. llamaindex offers key modules to measure the quality of generated results. we also offer key modules to measure retrieval quality. In this article, i’ll walk you through building a custom rag pipeline using llamaindex, llama 3.2, and llamaparse. we’ll start with a simple example and then explore ways to scale and optimize the setup. all the code for this article is available in our github repository. Llamaindex offers a multilayered framework for evaluating both the retrieval component and the language model output in an end to end pipeline. 1. evaluating retrieval quality. retrieval is the first stage in a rag pipeline, where relevant documents are fetched based on a user query. In the second half, you will learn how to implement a naive rag pipeline using llamaindex in python, which will then be enhanced to an advanced rag pipeline with a selection of the following advanced rag techniques: this article focuses on the advanced rag paradigm and its implementation. In this introduction, we embark on a journey to explore the intricacies of rag methodology and its synergistic relationship with llamaindex, a benchmarking tool designed to evaluate the.

Steps In Evaluating Retrieval Augmented Generation Rag Pipelines By In this article, i’ll walk you through building a custom rag pipeline using llamaindex, llama 3.2, and llamaparse. we’ll start with a simple example and then explore ways to scale and optimize the setup. all the code for this article is available in our github repository. Llamaindex offers a multilayered framework for evaluating both the retrieval component and the language model output in an end to end pipeline. 1. evaluating retrieval quality. retrieval is the first stage in a rag pipeline, where relevant documents are fetched based on a user query. In the second half, you will learn how to implement a naive rag pipeline using llamaindex in python, which will then be enhanced to an advanced rag pipeline with a selection of the following advanced rag techniques: this article focuses on the advanced rag paradigm and its implementation. In this introduction, we embark on a journey to explore the intricacies of rag methodology and its synergistic relationship with llamaindex, a benchmarking tool designed to evaluate the.

Retrieval Augmented Generation With Llamaindex Datacamp In the second half, you will learn how to implement a naive rag pipeline using llamaindex in python, which will then be enhanced to an advanced rag pipeline with a selection of the following advanced rag techniques: this article focuses on the advanced rag paradigm and its implementation. In this introduction, we embark on a journey to explore the intricacies of rag methodology and its synergistic relationship with llamaindex, a benchmarking tool designed to evaluate the.

Comments are closed.