Enabling Workflow Reproducibility In The Cloud With New Pipelines From

Zilliz Cloud Pipelines Making Unstructured Data Searchable Build real time, event driven data pipelines in microsoft fabric using azure and fabric events to process data instantly from onelake or azure blob storage. learn how to automate workflows. We outline community curated pipeline initiatives that enable novice and experienced users to perform complex, best practice analyses without having to manually assemble workflows.

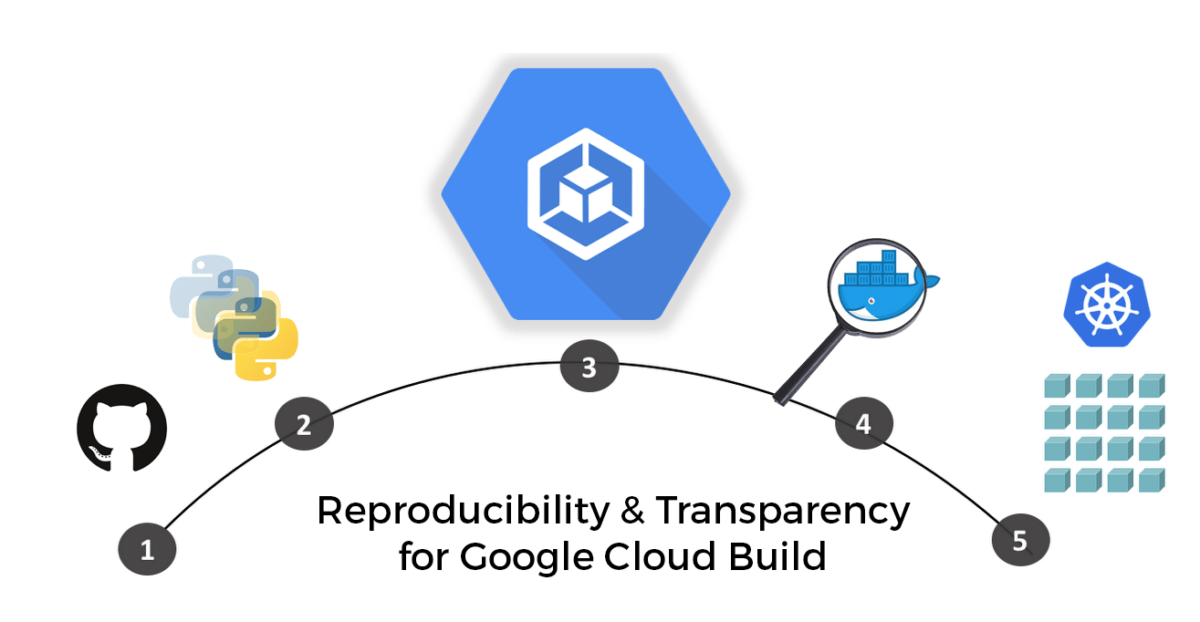

Solving Reproducibility Transparency In Google Cloud Build Pipelines We show how to create a scalable, reusable, and shareable workflow using four different workflow engines: the common workflow language (cwl), guix workflow language (gwl), snakemake, and nextflow. each of which can be run in parallel. We leverage cloud technology to integrate scientific workflows in cloud based hpc services (lsf and kubernetes) using cloud object storage, enabling better i o and data scalability. This has led to scattered and irreproducible workflow methodologies that compromise collaboration in biomedical research. with quark, scale and share your workflows without having to worry about inconsistent results. These practices aim to make it easier to add tools to a pipeline, to make it easier to directly process data, and to make wmss widely hospitable for any external tool or pipeline. we also show how following these guidelines can directly benefit the research community.

Cloud Workflow This has led to scattered and irreproducible workflow methodologies that compromise collaboration in biomedical research. with quark, scale and share your workflows without having to worry about inconsistent results. These practices aim to make it easier to add tools to a pipeline, to make it easier to directly process data, and to make wmss widely hospitable for any external tool or pipeline. we also show how following these guidelines can directly benefit the research community. Scientific workflows play a vital role in modern science as they enable scientists to specify, share and reuse computational experiments. to maximizethe benefits, workflows need to support the reproducibility of the experimental methods they capture. Standardized cp workflows abstract the complexity of individual and manual job execution and enable reproducibility and modularity to swap in new tools as needed. In this blog we’ll use workflows to orchestrate a dataflow pipeline in gcp. let’s get started! what are workflows? a workflow is made up of a series of steps described using the.

Enhancing Accuracy And Reproducibility With Data Science Pipelines A Scientific workflows play a vital role in modern science as they enable scientists to specify, share and reuse computational experiments. to maximizethe benefits, workflows need to support the reproducibility of the experimental methods they capture. Standardized cp workflows abstract the complexity of individual and manual job execution and enable reproducibility and modularity to swap in new tools as needed. In this blog we’ll use workflows to orchestrate a dataflow pipeline in gcp. let’s get started! what are workflows? a workflow is made up of a series of steps described using the.

Proposed Reproducibility Service Workflow Download Scientific Diagram In this blog we’ll use workflows to orchestrate a dataflow pipeline in gcp. let’s get started! what are workflows? a workflow is made up of a series of steps described using the.

Proposed Reproducibility Service Workflow Download Scientific Diagram

Comments are closed.