Efficient Vision Language Pretraining With Visual Concepts And

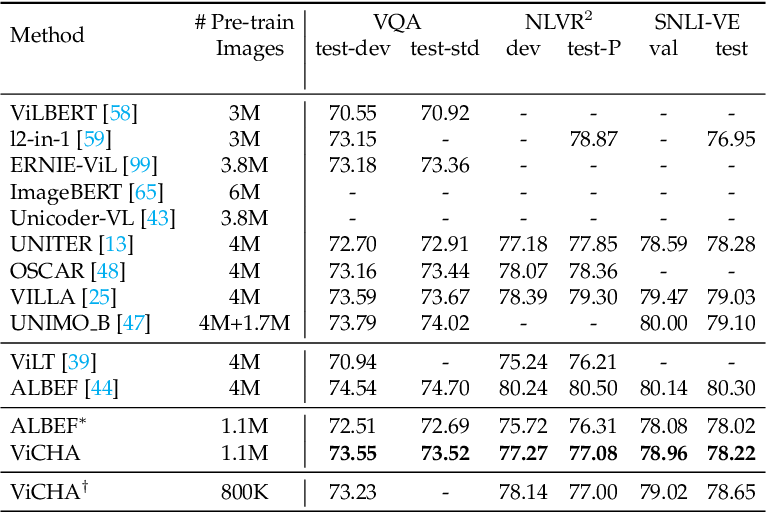

Efficient Vision Language Pretraining With Visual Concepts And Although pretrained on four times less data, our vicha strategy outperforms other approaches on several downstream tasks such as image text retrieval, vqa, visual reasoning, visual entailment and visual grounding. Illustrated in figure 2, our scheme presents vision and text encoders with three original components: a visual concepts module to enrich the image encoder with relevant vcs, a new cross modal interaction to align visual and langage feature representations at multiple levels, and a self supervised component based on the recently proposed masked.

Efficient Vision Language Pretraining With Visual Concepts And Awesome vision language pretraining papers. contribute to fawazsammani awesome vision language pretraining development by creating an account on github. We propose a simple strategy for masking image patches during visual language contrastive learning that improves the quality of the learned representations and the training speed. Recent visual transformer (vit) based approaches circumvent this issue while struggling with long visual sequences without detailed cross modal alignment information. this paper introduces a vit based vlp technique that efficiently incorporates object information through a novel patch text alignment mechanism. Abstract: vision and language pretraining has become the prevalent approach for tackling multimodal downstream tasks. the current trend is to move towards ever larger models and pretraining datasets.

Multi Grained Vision Language Pre Training Aligning Texts With Visual Recent visual transformer (vit) based approaches circumvent this issue while struggling with long visual sequences without detailed cross modal alignment information. this paper introduces a vit based vlp technique that efficiently incorporates object information through a novel patch text alignment mechanism. Abstract: vision and language pretraining has become the prevalent approach for tackling multimodal downstream tasks. the current trend is to move towards ever larger models and pretraining datasets. With a clip based filtering technique. an illustration of our approach, called vicha for eficient vision language pretraining with visual concepts and hierarch cal alignment, is presented in fig. 1. we will show the effectiveness of our approach on classical downstream tasks used for vlp evaluation while drastically limiting the size of the traini. In this paper, we propose performing multi grained vision language pre training by aligning text descriptions with the corresponding visual concepts in images. In this paper, we propose nevlp, a noise robust framework for efficient vision language pre training that requires less pre training data. Although pretrained on four times less data, our vicha strategy outperforms other approaches on several downstream tasks such as image text retrieval, vqa, visual reasoning, visual entailment and visual grounding.

Can Pre Trained Vision And Language Models Answer Visual Information With a clip based filtering technique. an illustration of our approach, called vicha for eficient vision language pretraining with visual concepts and hierarch cal alignment, is presented in fig. 1. we will show the effectiveness of our approach on classical downstream tasks used for vlp evaluation while drastically limiting the size of the traini. In this paper, we propose performing multi grained vision language pre training by aligning text descriptions with the corresponding visual concepts in images. In this paper, we propose nevlp, a noise robust framework for efficient vision language pre training that requires less pre training data. Although pretrained on four times less data, our vicha strategy outperforms other approaches on several downstream tasks such as image text retrieval, vqa, visual reasoning, visual entailment and visual grounding.

Curriculum Learning For Data Efficient Vision Language Alignment Deepai In this paper, we propose nevlp, a noise robust framework for efficient vision language pre training that requires less pre training data. Although pretrained on four times less data, our vicha strategy outperforms other approaches on several downstream tasks such as image text retrieval, vqa, visual reasoning, visual entailment and visual grounding.

Table 1 From Efficient Vision Language Pretraining With Visual Concepts

Comments are closed.