Efficient Llm Inference With Limited Memory Apple Plato Data

Efficient Llm Inference With Limited Memory Apple Plato Data This paper tackles the challenge of efficiently running llms that exceed the available dram capacity by storing the model parameters on flash memory but bringing them on demand to dram. Motivated by the challenges described in this sec tion, in section 3, we propose methods to optimize data transfer volume and enhance read throughput to significantly enhance inference speeds.

Llm Inference Pypi In this blog, we review apple’s recently published paper, llm in a flash: efficient large language model inference with limited memory. the paper introduces techniques that utilize. The paper titled “llm in a flash: efficient large language model inference with limited memory” addresses challenges and solutions for running large language models (llms) on devices with limited dram capacity. Apple ai researchers say they have made a key breakthrough in deploying large language models (llms) on iphones and other apple devices with limited memory by inventing an innovative. This paper tackles the challenge of efficiently running llms that exceed the available dram capacity by storing the model parameters in flash memory, but bringing them on demand to dram.

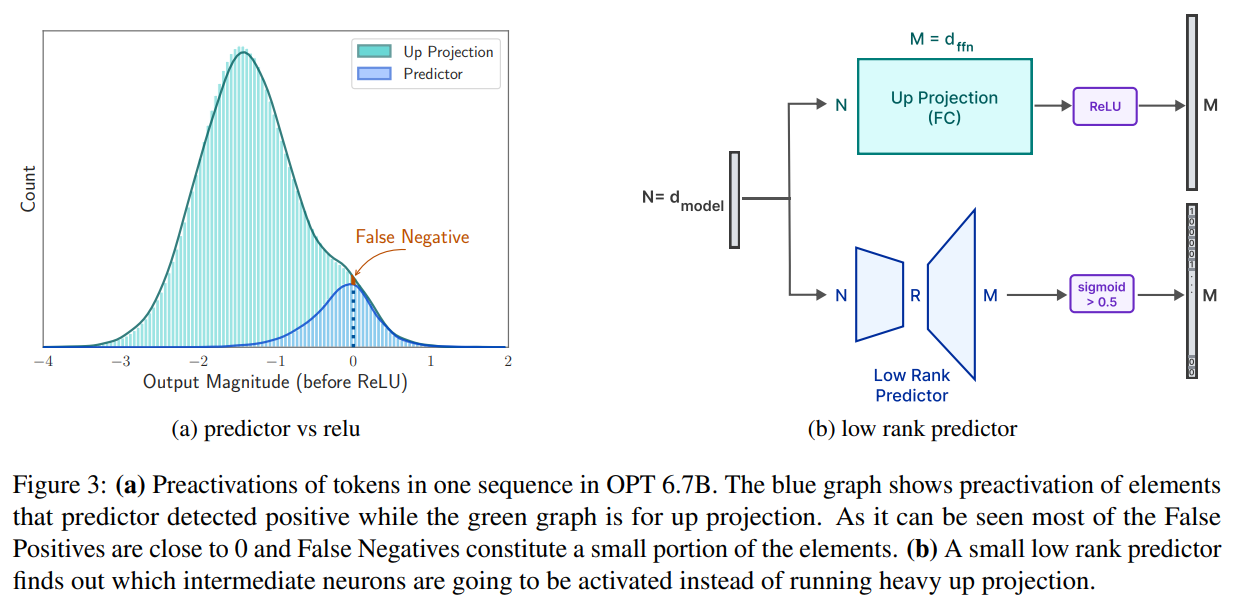

Llm In A Flash Efficient Inference Techniques With Limited Memory Apple ai researchers say they have made a key breakthrough in deploying large language models (llms) on iphones and other apple devices with limited memory by inventing an innovative. This paper tackles the challenge of efficiently running llms that exceed the available dram capacity by storing the model parameters in flash memory, but bringing them on demand to dram. Efficiently infer with limited memory using llm in a flash. explore techniques in our blog for quick and effective results. efficient large language model inference techniques have been developed to tackle the challenges of running large models on devices with limited memory. The common approach to make llms more accessible is by reducing the model size, but in this paper the researchers from apple present a method to run large language models using less resources, specifically on a device that does not have enough memory to load the entire model. By optimizing for large sequential reads and leveraging parallelized reads, the approach maximizes the throughput from flash memory, making it viable for llm inference. the paper emphasizes. Our method involves constructing an inference cost model that harmonizes with the flash memory behavior, guiding us to optimize in two critical areas: reducing the volume of data transferred from flash and reading data in larger, more contiguous chunks.

Comments are closed.