Efficient And Economic Large Language Model Inference With Attention

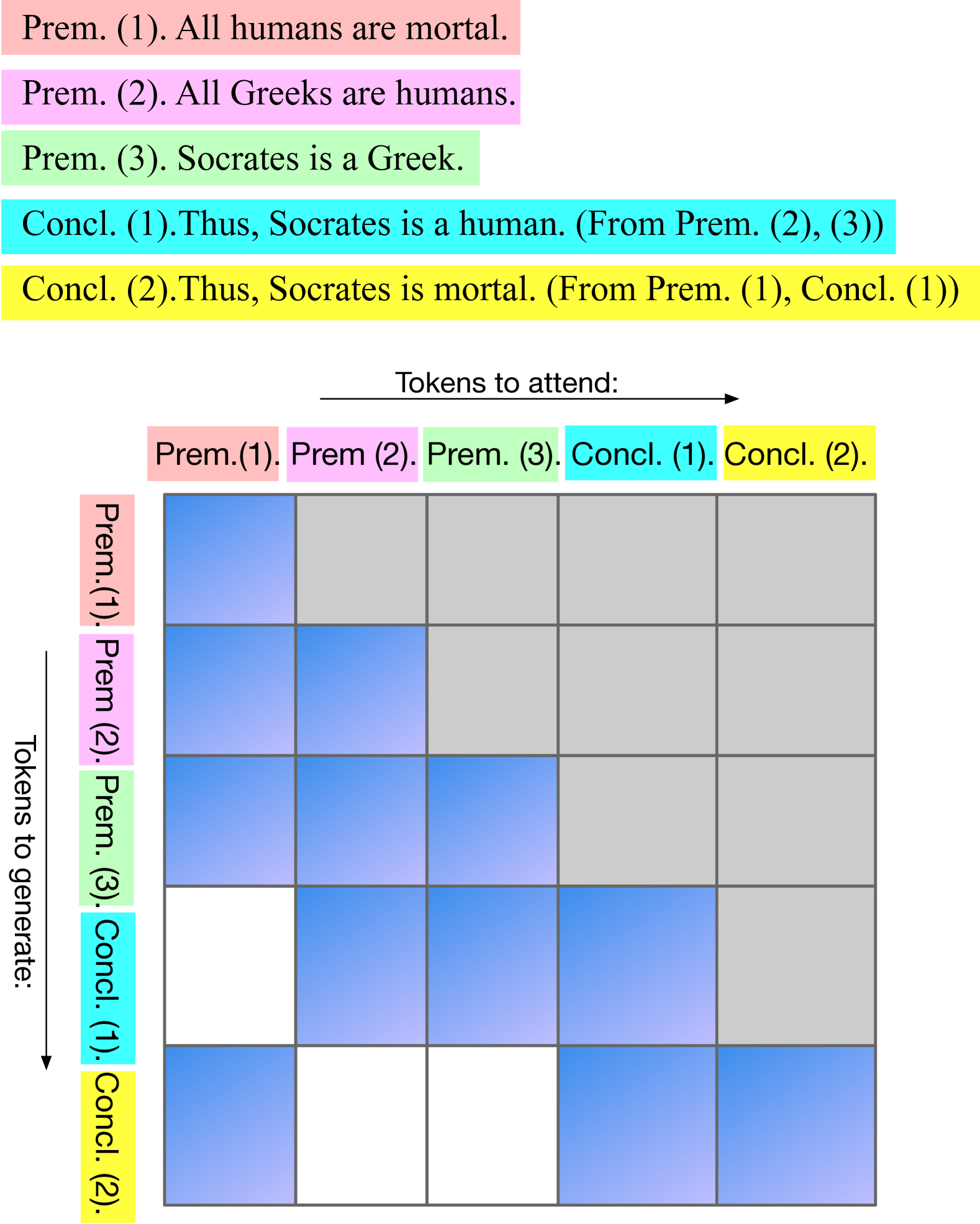

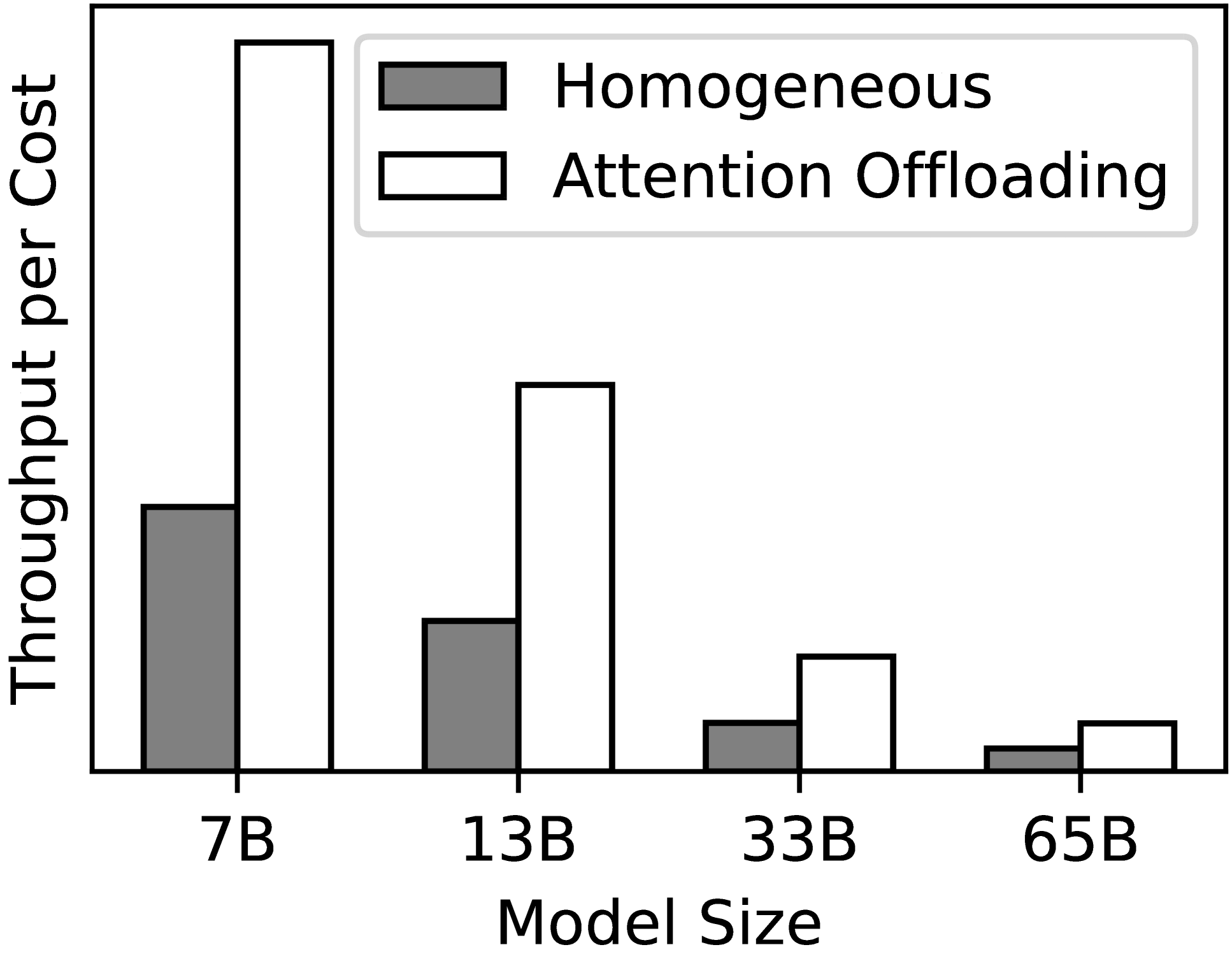

Efficient And Economic Large Language Model Inference With Attention To enhance the efficiency and cost effectiveness of llm serving, we introduce the concept of attention offloading. this approach leverages a collection of cheap, memory optimized devices for the attention operator while still utilizing high end accelerators for other parts of the model. First, value aware token pruning (vatp) is proposed for kv cache reduction. by incorporating both attention scores and the l1 norm of value vectors to evaluate token importance, vatp addresses the limitations of conventional approaches that rely solely on attention scores.

Efficient And Economic Large Language Model Inference With Attention Identifying the attention computation as the main bottleneck in large language model inference. developing a novel architecture that offloads the attention computation to a remote server, while keeping the rest of the model on the local device. Chniques aimed at enhancing the eficiency of llm inference. this paper presents a comprehensive . urvey of the existing literature on eficient llm inference. we start by analyzing the. Here we explore various strategies to improve inference efficiency, including speculative decoding, group query attention, quantization, parallelism, continuous batching, sliding window. Abstract—the rapid advancement of deep learning has led to significant progress in large language models (llms), with the attention mechanism serving as a core component of their success. however, the computational and memory demands of attention mechanisms pose bottlenecks for efficient inference, especially in long sequence and real time tasks.

Efficient And Economic Large Language Model Inference With Attention Here we explore various strategies to improve inference efficiency, including speculative decoding, group query attention, quantization, parallelism, continuous batching, sliding window. Abstract—the rapid advancement of deep learning has led to significant progress in large language models (llms), with the attention mechanism serving as a core component of their success. however, the computational and memory demands of attention mechanisms pose bottlenecks for efficient inference, especially in long sequence and real time tasks. To enhance the efficiency and cost effectiveness of llm serving, we introduce the concept of attention offloading. this approach leverages a collection of cheap, memory optimized devices for the attention operator while still utilizing highend accelerators for other parts of the model. We significantly reduce both pre filling and decoding memory and latency for long context llms without sacrificing their long context abilities. deploying long context large language models (llms) is essential but poses significant computational and memory challenges. To enhance the efficiency of llm decoding, we introduce model attention disaggregation. this approach leverages a collection of cheap, memory optimized devices for the attention operator while still utilizing high end accelerators for other parts of the model. Attention offloading is a specialized memory management technique that separates computational tasks in language models. the process works by redirecting memory intensive attention operations to a dedicated, optimized storage device instead of processing them in the main system.

Efficient And Economic Large Language Model Inference With Attention To enhance the efficiency and cost effectiveness of llm serving, we introduce the concept of attention offloading. this approach leverages a collection of cheap, memory optimized devices for the attention operator while still utilizing highend accelerators for other parts of the model. We significantly reduce both pre filling and decoding memory and latency for long context llms without sacrificing their long context abilities. deploying long context large language models (llms) is essential but poses significant computational and memory challenges. To enhance the efficiency of llm decoding, we introduce model attention disaggregation. this approach leverages a collection of cheap, memory optimized devices for the attention operator while still utilizing high end accelerators for other parts of the model. Attention offloading is a specialized memory management technique that separates computational tasks in language models. the process works by redirecting memory intensive attention operations to a dedicated, optimized storage device instead of processing them in the main system.

Comments are closed.