E07 Feed Forward Network Transformer Series With Google Engineer

Feed Forward Transformer Block Download Scientific Diagram As a regular normal swe, want to share several key topics to better understand transformer, the architecture that changed the world and laid the foundation o. Summary of feedforward network in the transformer model. in summary, the feedforward network is a cornerstone of the transformer architecture, enhancing its capability to handle diverse.

Feed Forward Transformer Block Download Scientific Diagram Read this chapter to understand the ffnn sublayer, its role in transformer, and how to implement ffnn in transformer architecture using python programming language. After reading the 'attention is all you need' article, i understand the general architecture of a transformer. however, it is unclear to me how the feed forward neural network learns. The statement is: ‘the feed forward network applies a point wise fully connected layer to each position separately and identically.’. can anyone explain this statement? could someone please explain why the feed forward layer is placed after the multihead attention layer and not before it?. So, i’ve been doing a deep dive into understanding transformer (in the neural machine translation context).i’ve found the illustrated transformer and the annotated transformer much help. so, after great lengths i think i’ve gotten solid intuition on what the self attention layer will learn.

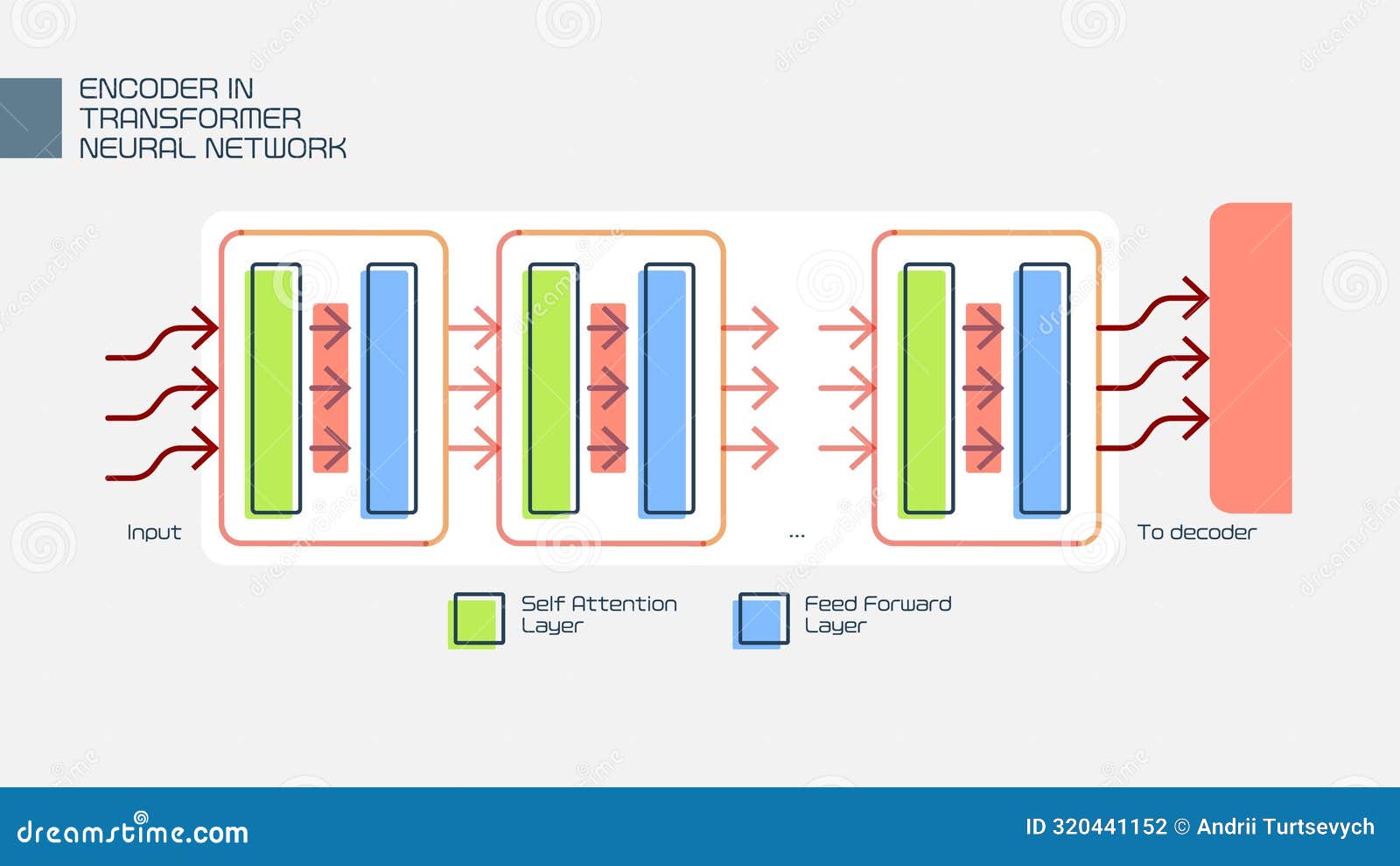

Detailed Diagram Of Transformer Neural Network Encoder With Self The statement is: ‘the feed forward network applies a point wise fully connected layer to each position separately and identically.’. can anyone explain this statement? could someone please explain why the feed forward layer is placed after the multihead attention layer and not before it?. So, i’ve been doing a deep dive into understanding transformer (in the neural machine translation context).i’ve found the illustrated transformer and the annotated transformer much help. so, after great lengths i think i’ve gotten solid intuition on what the self attention layer will learn. In this work, we make a substantial step towards unveiling this underlying prediction process, by reverse engineering the operation of the feed forward network (ffn) layers, one of the building blocks of transformer models. These results are the first step in exploring the application of architecture search to feed forward sequence models. the evolved transformer is being open sourced as part of tensor2tensor, where it can be used for any sequence problem. A clear breakdown of transformer layers—layernorm, attention, feedforward, and residuals—explained step by step with visuals. It’s a comprehensive framework that supports a wide range of neural network architectures, from simple feedforward networks to complex models like the transformer.

Feed Forward Neural Network Download Scientific Diagram In this work, we make a substantial step towards unveiling this underlying prediction process, by reverse engineering the operation of the feed forward network (ffn) layers, one of the building blocks of transformer models. These results are the first step in exploring the application of architecture search to feed forward sequence models. the evolved transformer is being open sourced as part of tensor2tensor, where it can be used for any sequence problem. A clear breakdown of transformer layers—layernorm, attention, feedforward, and residuals—explained step by step with visuals. It’s a comprehensive framework that supports a wide range of neural network architectures, from simple feedforward networks to complex models like the transformer.

Feed Forward Network Source 5 Download Scientific Diagram A clear breakdown of transformer layers—layernorm, attention, feedforward, and residuals—explained step by step with visuals. It’s a comprehensive framework that supports a wide range of neural network architectures, from simple feedforward networks to complex models like the transformer.

Comments are closed.