Dive Into Deep Learning Coding Session5 Attention Mechanism Ii Americas Emea

Attention Mechanism In Deep Learning Models Discussing and resolving coding questions participants might have during the sessions. Dive into deep learning, an open source, interactive book provided in a unique form factor that integrates text, mathematics and code, recently added a new chapter on attention mechanisms.

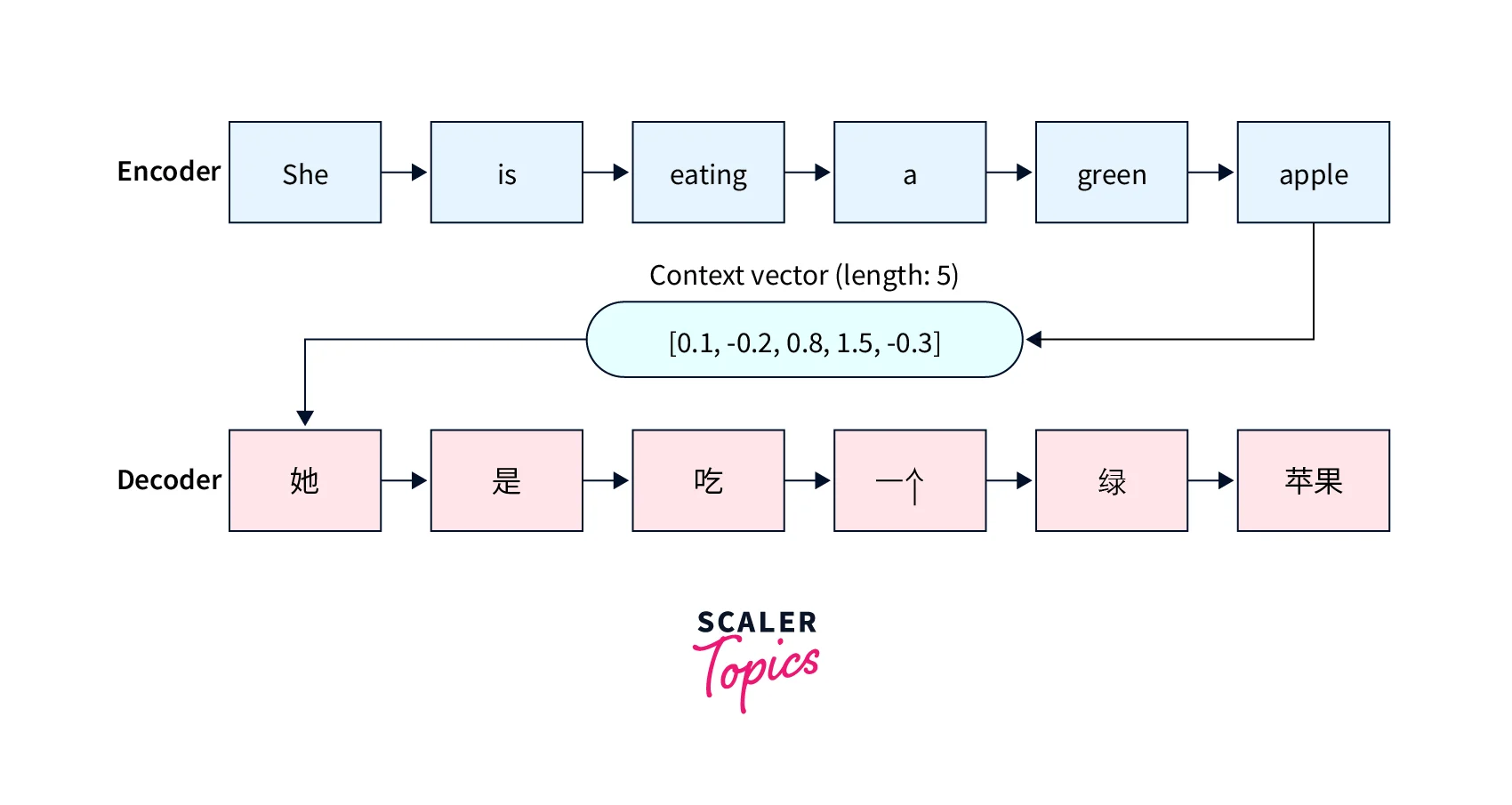

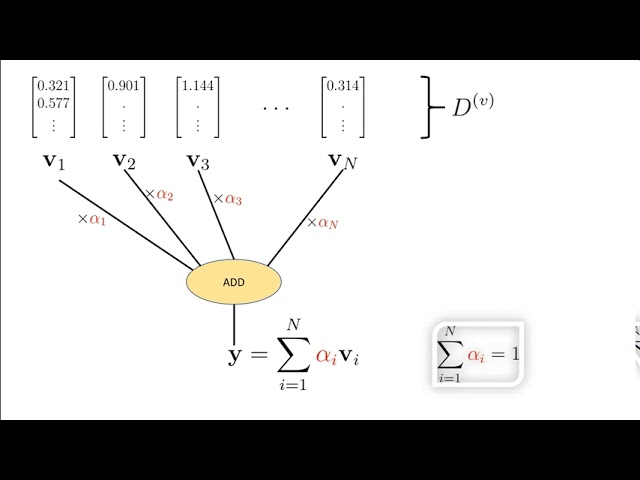

Livebook Manning Material and references from the mlt dive into deep learning coding sessions. these bi weekly sessions aim to provide code focused sessions by reimplementing selected models from the book dive into deep learning. these sessions are meant for people interested in implementing models from scratch. The core idea behind the transformer model is the attention mechanism, an innovation that was originally envisioned as an enhancement for encoder–decoder rnns applied to sequence to sequence applications, such as machine translations (bahdanau et al., 2014). In this blog post, we’ll provide a comprehensive introduction to attention mechanisms and showcase their implementation using a popular deep learning framework with python code. let’s get started!! what is the attention mechanism?. Attention mechanisms have become a cornerstone of modern deep learning architectures, particularly in natural language processing (nlp). this post provides a comprehensive overview of attention mechanisms, from their theoretical foundations to practical implementation.

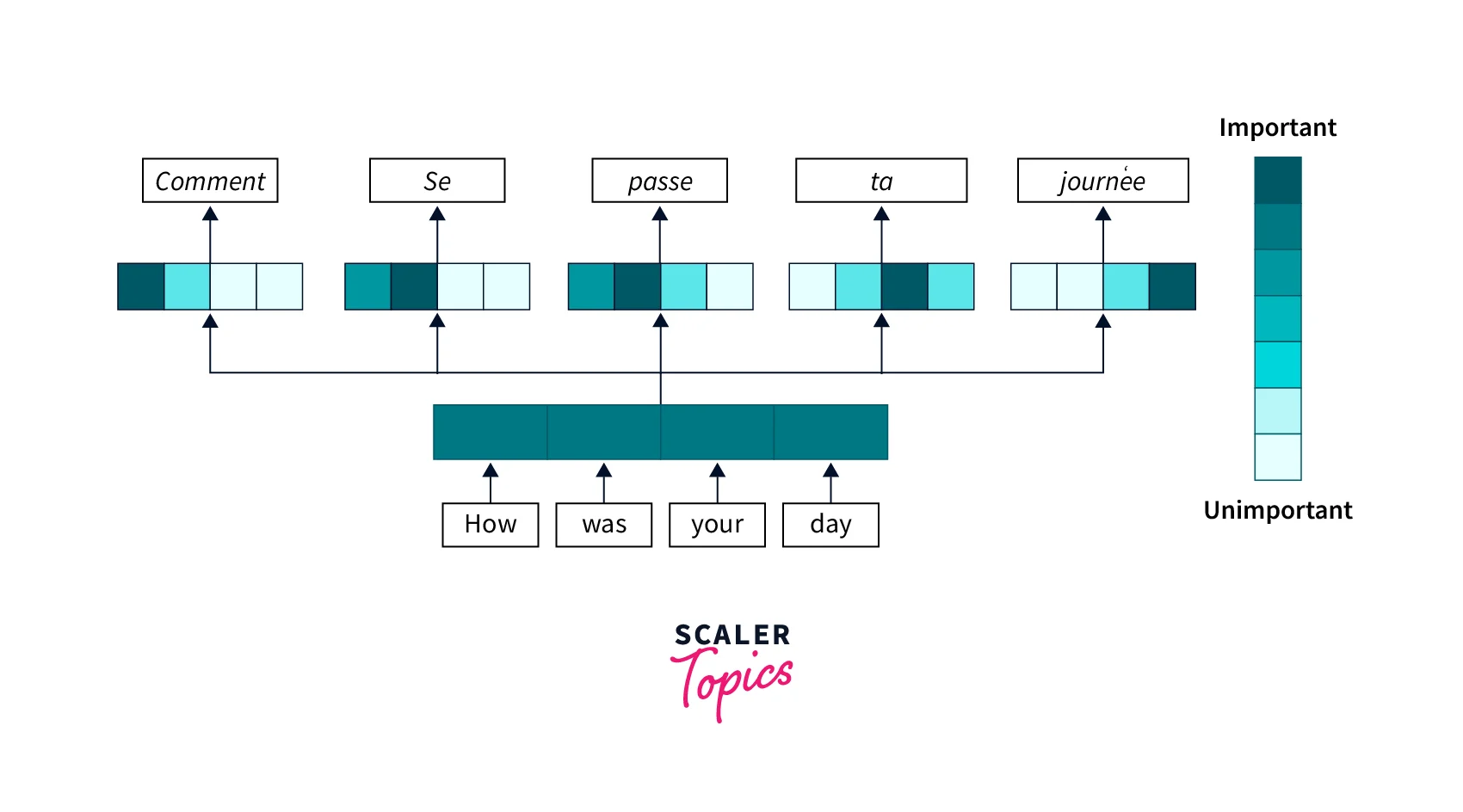

Attention Mechanism In Deep Learning Scaler Topics In this blog post, we’ll provide a comprehensive introduction to attention mechanisms and showcase their implementation using a popular deep learning framework with python code. let’s get started!! what is the attention mechanism?. Attention mechanisms have become a cornerstone of modern deep learning architectures, particularly in natural language processing (nlp). this post provides a comprehensive overview of attention mechanisms, from their theoretical foundations to practical implementation. Tensorflow 2 code for attention mechanisms chapter of dive into deep learning (d2l) book. In this chapter, we will begin by reviewing a popular framework explaining how attention is deployed in a visual scene. inspired by the attention cues in this framework, we will design models that leverage such attention cues. Attention mechanism have revolutionized natural language processing (nlp), allowing neural networks to focus on the most relevant parts of input data. but don’t worry; you won’t need a phd to. This repository contains tensorflow 2 code for attention mechanisms chapter of dive into deep learning (d2l) book. the chapter has 7 sections and code for each section can be found at the following links.

Attention Mechanism In Deep Learning Scaler Topics Tensorflow 2 code for attention mechanisms chapter of dive into deep learning (d2l) book. In this chapter, we will begin by reviewing a popular framework explaining how attention is deployed in a visual scene. inspired by the attention cues in this framework, we will design models that leverage such attention cues. Attention mechanism have revolutionized natural language processing (nlp), allowing neural networks to focus on the most relevant parts of input data. but don’t worry; you won’t need a phd to. This repository contains tensorflow 2 code for attention mechanisms chapter of dive into deep learning (d2l) book. the chapter has 7 sections and code for each section can be found at the following links.

What Is A Deep Learning Attention Mechanism Reason Town Attention mechanism have revolutionized natural language processing (nlp), allowing neural networks to focus on the most relevant parts of input data. but don’t worry; you won’t need a phd to. This repository contains tensorflow 2 code for attention mechanisms chapter of dive into deep learning (d2l) book. the chapter has 7 sections and code for each section can be found at the following links.

Comments are closed.