Diffusion Model Classifier Guidance Image To U

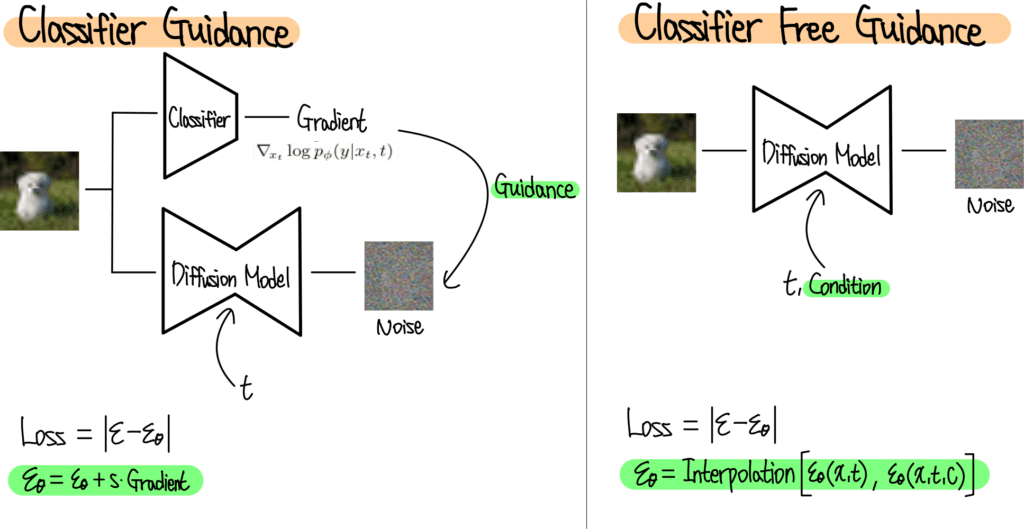

Universal Guidance For Diffusion Models Pdf Image Segmentation Introducing a new method for diffusion model guidance with various advantages over existing methods, demonstrated by adding aesthetic guidance to stable diffusion. classifier guidance allows us to add additional control to diffusion model sampling. First, let’s take a look at the table below which shows the main differences between classifier guidance and classifier free guidance when using them.

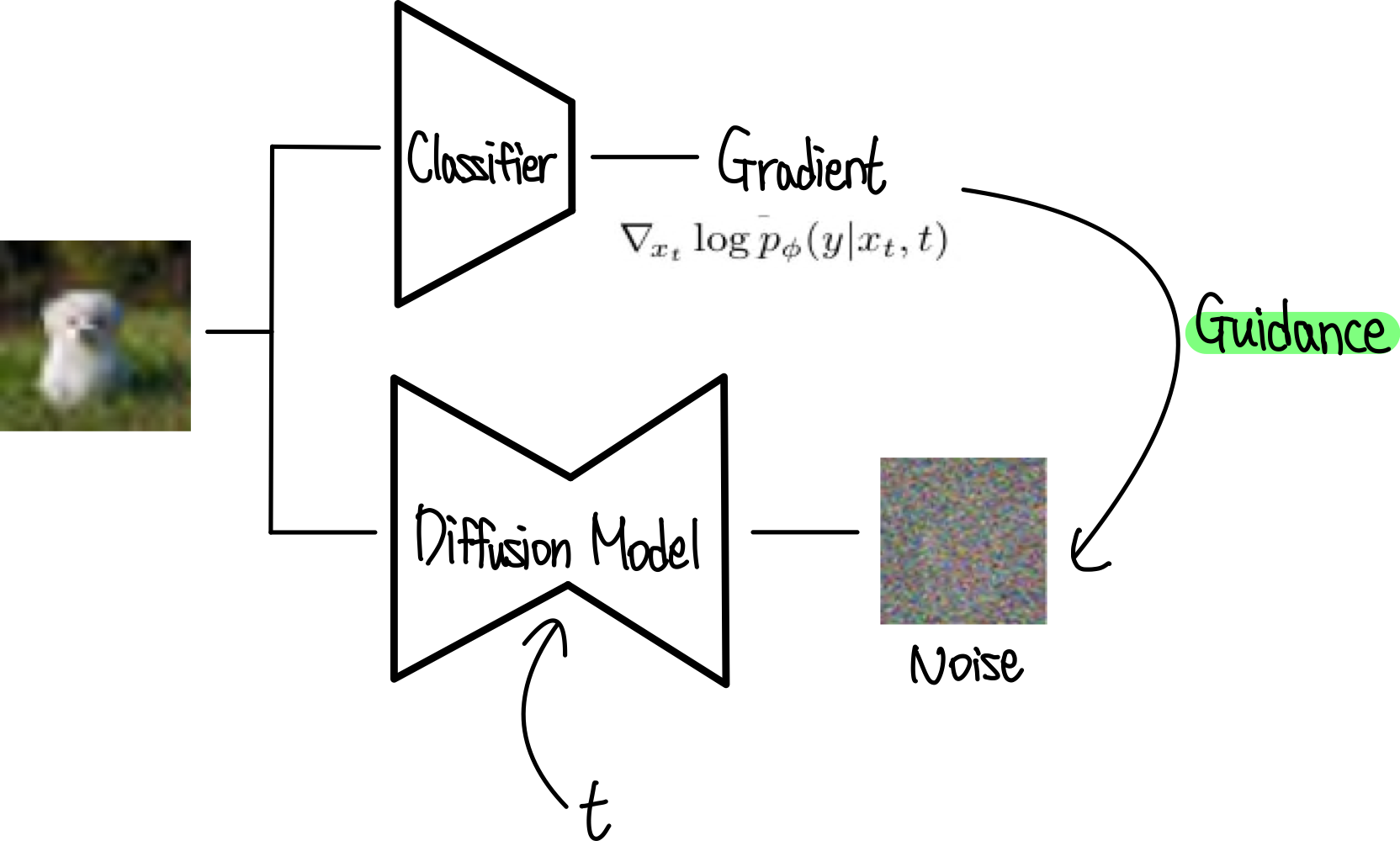

Diffusion Model Classifier Guidance Image To U Today, we are going to focus on classifier guidance. let's formalize our goal. in this case, we want to sample an image x x specified under a goal variable y y. e.g. x x could be an image of a handwritten digit, and y y is a class, e.g. the digit the image represents. We show that diffusion models can achieve image sample quality superior to the current state of the art generative models. we achieve this on unconditional image synthesis by finding a better architecture through a series of ablations. Conditional diffusion model with classifier guidance this project implements a conditional diffusion model with classifier guidance for generating butterfly images. One of the earlier, yet significant, techniques for steering the generation process towards specific desired attributes, such as a particular image class, is classifier guidance. this method modifies the sampling trajectory by incorporating information from an external classifier model.

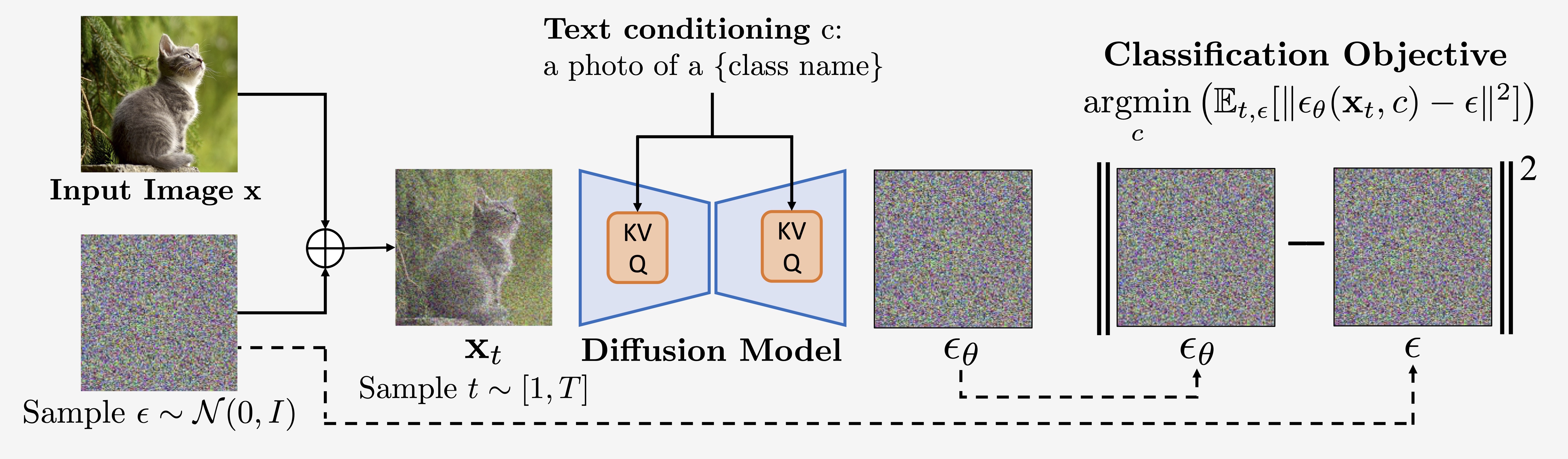

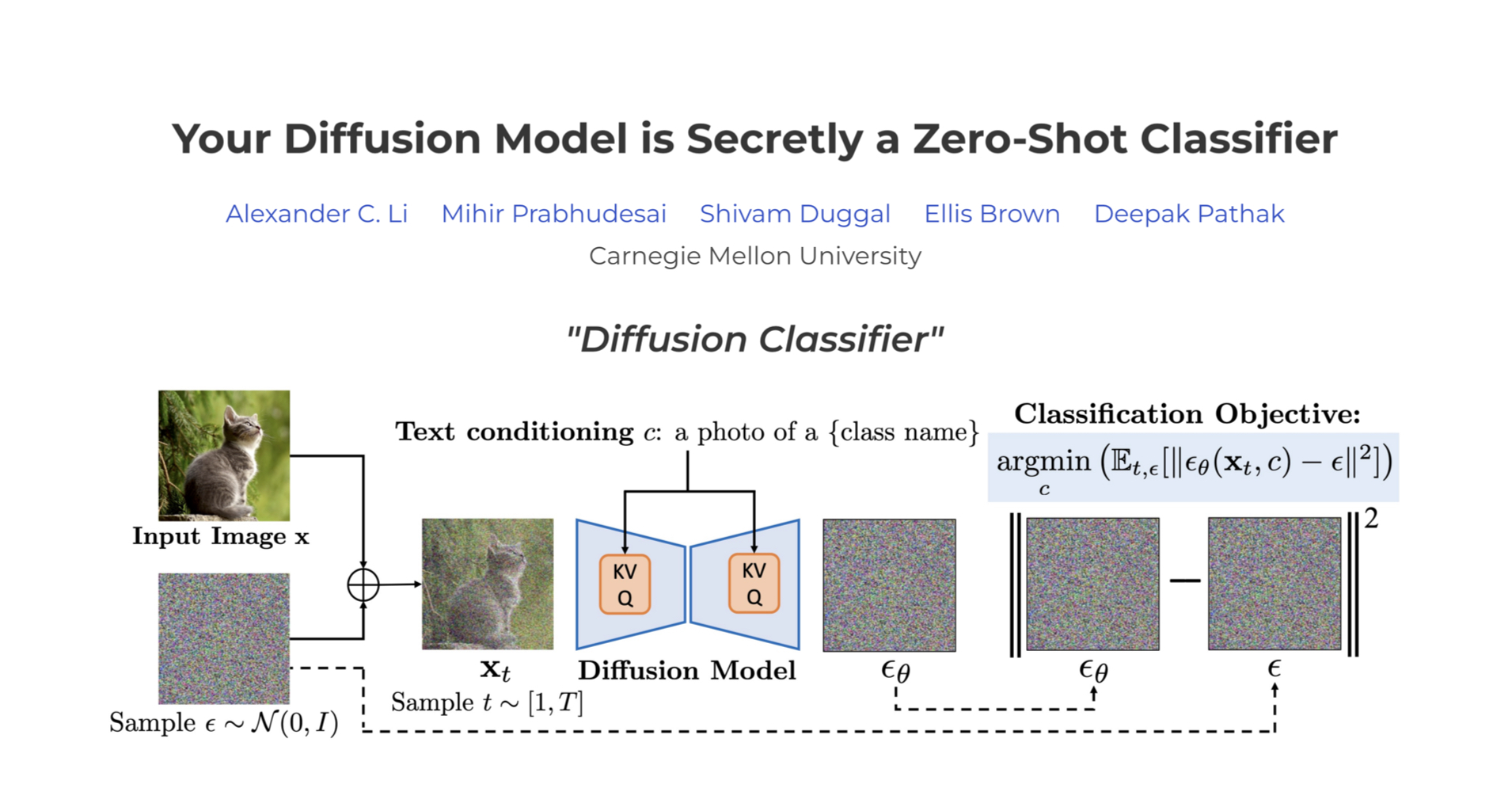

Diffusion Model Classifier Guidance Image To U Conditional diffusion model with classifier guidance this project implements a conditional diffusion model with classifier guidance for generating butterfly images. One of the earlier, yet significant, techniques for steering the generation process towards specific desired attributes, such as a particular image class, is classifier guidance. this method modifies the sampling trajectory by incorporating information from an external classifier model. Attention image source relies on being able to. ffusion model otherwise, naïve approach: add gaussian noise at appropriate le. el to the original image. introduc. im equations set = 0 ex. r ride a dragon” time t b. poole, a. jain, j. barron, b. mildenhall. dreamfusion: text to 3d usin. 2d diffusion. arxiv 2022 b. poole, a. jain,. For this purpose, we first generate a large scale synthetic underwater dataset based on three representative synthesis methods to activate an image to image diffusion model. then, we incorporate the prior knowledge from the in air natural domain with clip to train an explicit clip classifier. Suppose you want to generate images of trucks. without guidance, the model samples randomly across all classes. by adding a classifier trained to distinguish cifar 10 classes, you can compute gradients that push each denoising step toward features typical of trucks (e.g., wheels, flatbeds). In this work, we study universal guidance algorithms for guided image generation with diffusion models using any off the shelf guidance functions f, such as object detection or segmentation networks.

Diffusion Classifier Attention image source relies on being able to. ffusion model otherwise, naïve approach: add gaussian noise at appropriate le. el to the original image. introduc. im equations set = 0 ex. r ride a dragon” time t b. poole, a. jain, j. barron, b. mildenhall. dreamfusion: text to 3d usin. 2d diffusion. arxiv 2022 b. poole, a. jain,. For this purpose, we first generate a large scale synthetic underwater dataset based on three representative synthesis methods to activate an image to image diffusion model. then, we incorporate the prior knowledge from the in air natural domain with clip to train an explicit clip classifier. Suppose you want to generate images of trucks. without guidance, the model samples randomly across all classes. by adding a classifier trained to distinguish cifar 10 classes, you can compute gradients that push each denoising step toward features typical of trucks (e.g., wheels, flatbeds). In this work, we study universal guidance algorithms for guided image generation with diffusion models using any off the shelf guidance functions f, such as object detection or segmentation networks.

Diffusion Classifier Suppose you want to generate images of trucks. without guidance, the model samples randomly across all classes. by adding a classifier trained to distinguish cifar 10 classes, you can compute gradients that push each denoising step toward features typical of trucks (e.g., wheels, flatbeds). In this work, we study universal guidance algorithms for guided image generation with diffusion models using any off the shelf guidance functions f, such as object detection or segmentation networks.

Comments are closed.