Deploying Custom Llms A Hugging Face End To End Guide

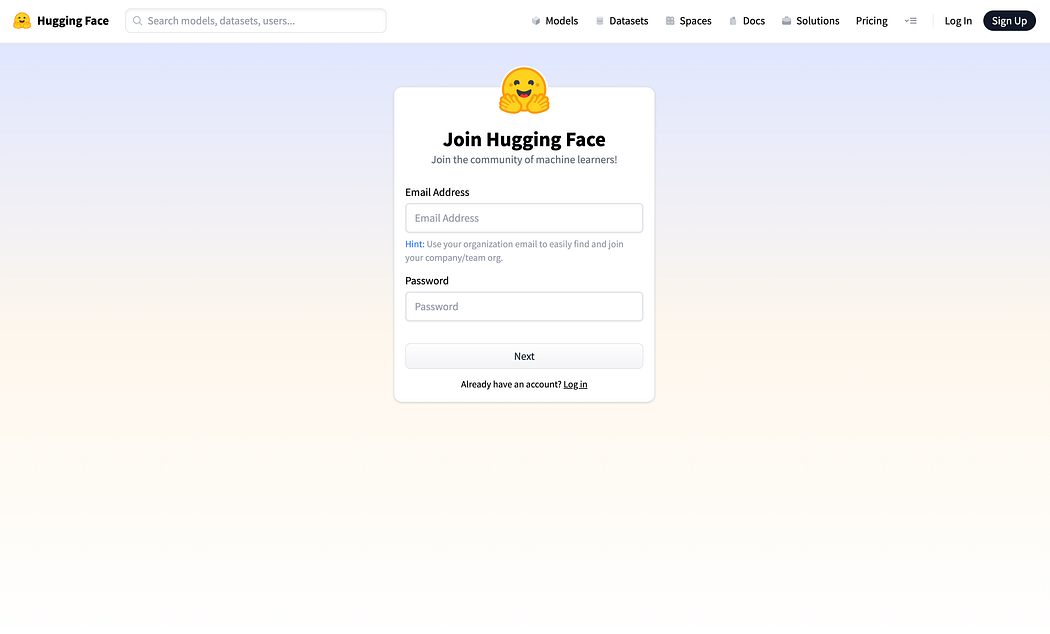

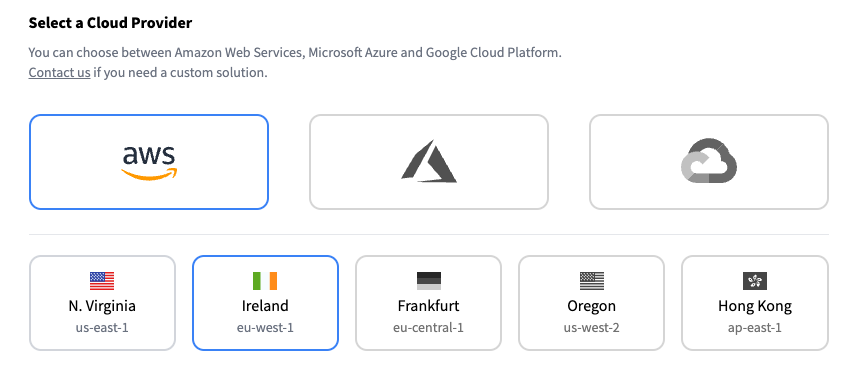

Deploying Custom Llms A Hugging Face End To End Guide In this blog post, we will show you how to deploy open source llms to hugging face inference endpoints, our managed saas solution that makes it easy to deploy models. additionally, we will teach you how to stream responses and test the performance of our endpoints. so let's get started!. In this comprehensive guide, we’ll explore three popular methods for deploying custom llms and delve into the detailed process of deploying models as hugging face inference.

Deploying Custom Llms A Hugging Face End To End Guide Hands on tutorial for launching and deploying llms using friendli dedicated endpoints with hugging face models. Deploy and score transformers based large language models from the hugging face hub. In this video, you can learn how to create an endpoint by using llms from hugging face with friendli dedicated endpoints. Deploy custom hugging face models on novita ai’s llm dedicated endpoint. enjoy flexible lora support, 99.5% sla, and scalable gpu resources.

Deploying Custom Llms A Hugging Face End To End Guide In this video, you can learn how to create an endpoint by using llms from hugging face with friendli dedicated endpoints. Deploy custom hugging face models on novita ai’s llm dedicated endpoint. enjoy flexible lora support, 99.5% sla, and scalable gpu resources. Learn how to deploy fine‑tuned llms using hugging face inference endpoints. this step‑by‑step tutorial covers cloud integration and mlops best practices. This repository provides a ready to use framework for fine tuning, distilling, and deploying custom llms via hugging face. it includes training scripts, model quantization, and automated deployment to hugging face spaces. Learn to deploy llms using hugging face and kubernetes on oci for scalable and secure ai deployments. We’ll cover everything from setting up your environment and preparing your data to deploying and maintaining your customized model. whether you’re an ai professional or an enthusiastic learner, this tutorial is designed to be easy to understand and highly engaging. why fine tune a llm?.

Comments are closed.