Deploy Models With Hugging Face Inference Endpoints

Inference Endpoints Hugging Face Inference endpoints is a managed service to deploy your ai model to production. here you’ll find quickstarts, guides, tutorials, use cases and a lot more. deploy a production ready ai model in minutes. understand the main components and benefits of inference endpoints. You can search from thousands of transformers models in azure machine learning model catalog and deploy models to managed online endpoint with ease through the guided wizard. once deployed, the managed online endpoint gives you secure rest api to score your model in real time.

Inference Endpoints By Hugging Face Explore the main benefits, security measures, best practices, and success stories of implementing hugging face inference endpoints to optimize your ai project in minutes. Learn how to use hugging face's inference endpoints to deploy and run powerful ai models like llama without any coding! this step by step guide covers:* what. Learn how to deploy fine‑tuned llms using hugging face inference endpoints. this step‑by‑step tutorial covers cloud integration and mlops best practices. In this blog post, we will show you how to deploy open source llms to hugging face inference endpoints, our managed saas solution that makes it easy to deploy models. additionally, we will teach you how to stream responses and test the performance of our endpoints. so let's get started!.

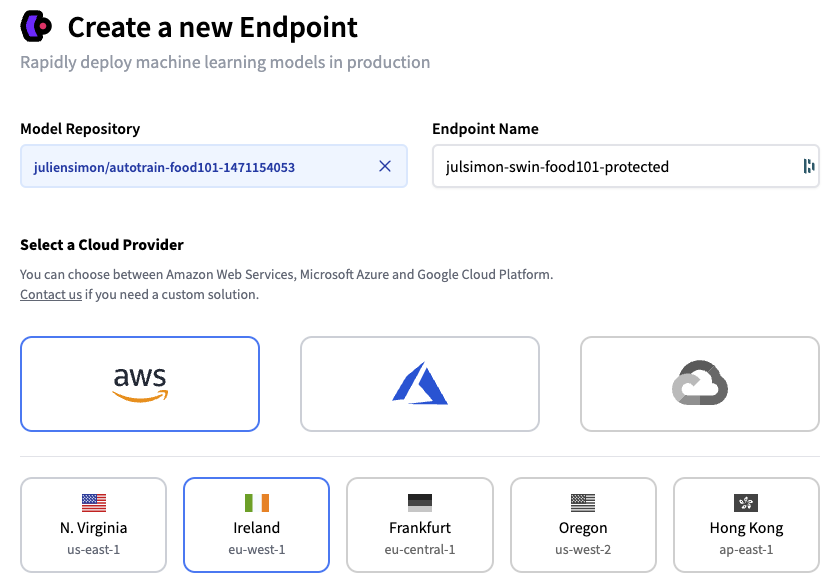

Getting Started With Hugging Face Inference Endpoints Learn how to deploy fine‑tuned llms using hugging face inference endpoints. this step‑by‑step tutorial covers cloud integration and mlops best practices. In this blog post, we will show you how to deploy open source llms to hugging face inference endpoints, our managed saas solution that makes it easy to deploy models. additionally, we will teach you how to stream responses and test the performance of our endpoints. so let's get started!. In partnership with cloud providers, like aws or azure, hugging face created inference endpoints, a user friendly solution to deploy and manage your model in the cloud, directly from. Learn how to deploy models easily using hugging face inference endpoints. choose your cloud provider and configure deployment settings for seamless model integration. Hugging face offers several deployment options, each suited for different use cases and requirements. text generation inference (tgi) is an open source, purpose built solution for deploying and serving large language models (llms). In this article we’ll take a look at how you can spin up your first huggingface inference endpoint. we’ll set up a sample endpoint, show how you can invoke the endpoint, and how you can monitor the endpoint’s performance. note: for this article we will assume basic knowledge of huggingface transformers and python.

Comments are closed.